After the colocation project last year one of the biggest reasons for change from a previous Amazon EC2 cloud infrastructure to a colocation platform was the cost of bandwidth. Since writing that piece we have received weekly messages from individuals and companies that have found the same thing: bandwidth is a driver for moving to dedicated hosting or colocation. For many shared hosts, “unlimited” hosting is the norm but the bandwidth discussion comes into play even when evaluating virtual private server or VPS options.

Leaving the colocation, dedicated hosting and VPS distinction out of the equation for this article we are going to focus on a very important piece of the equation: the network connection between your hosting platform and the outside world. Here are a few key terms that we will use in this guide:

- Data Transfer – the total amount of data passed between your hosting environment and the outside world

- Bandwidth – the maximum speed at which your connection can transmit data

- Throughput – the actual amount of data that can be transferred through a connection

- Unmetered – transfer up to a given bandwidth over a period of time without overages

- Unlimited – often refers to there being no formal cap on data transfer

- 95th Percentile Billing – a billing method whereby the top 5% of bandwidth values are discarded for usage calculations

- Burstable bandwidth – ability for a connection’s bandwidth to exceed a normal reserved bandwidth amount

- PPS – packets per second amount of actual packets that can be processed by the connection

- Oversubscription – simply almost all providers “oversubscribe” networks such that they resell a total amount of bandwidth to end users that is greater than their network can support if all end users were utilizing 100% of their service. We will have more on this in a future article

Data Transfer

Data transfer generally refers to the volume of data passed between your colocation/ dedicated server/ switch and the hosting provider’s infrastructure in a given period, most commonly on a monthly basis. Sometimes this is called traffic but since traffic has many other meanings such as visitors, page views and etc when hosting web applications, this is less common. Data transfer is usually measured in a denomination of bytes (or 8 bits.) Years ago using Gigabytes was common and one would see “600GB/ mo of data transfer” as an example with hosting offers. These days, connectivity is at the point where most offers out there will express data transfer inclusion in terms of terabytes. An example of this would be 3.24TB/ month. These limits specify the total amount of data that can be passed through a connection in a given month. In that example 3.24TB is roughly equivalent to 3.56 x 10^12 bytes transferred. If one goes above a data transfer limit there is usually an additional charge that is specified in the agreement. That charge is generally at a higher cost per TB than the original package. It is important to note here that cloud providers such as Amazon categorize data transfer in a few ways.

First, inbound data transfer (transfer to the cloud) on the AWS cloud is currently free. There are then small monthly data allowances for outboud data transfer (transfer from AWS instances to elsewhere.) Amazon and other cloud hosting providers sometimes distinguish destinations. For example, data transfer within an AWS region may be free. Data transfer between regions may be discounted. Data transfer externally may cost significantly more. This is the case because Amazon can differentiate pricing between its infrastructure endpoints and the rest of the world. For VPS and dedicated server offerings, data transfer is usually as easy as measuring the amount of data passed from the server to the external network. For colocation options (with multiple servers), so long as the traffic is passed through a customer switch on the customer’s own network, that data transfer is free. Any data passed from the customer’s network to the hosting provider’s network is generally considered part of the data transfer limit. If a hosting plan has a quantity of data (e.g. TB) expressed in a time period (e.g. month) it is usually billed on a data transfer basis. Data transfer billing is one of the key reasons web applications leave the cloud over time as their usage patterns look more like the example above with 1:20 inbound: outbound data transfer ratios.

Bandwidth

Bandwidth is a measure of the rate at which data can be transferred between the client’s server or network and the hosting provider’s network/ the external Internet. An example of this would be 20mbps or 1gbps of bandwidth. Since the most common connection type in datacenters is Ethernet which commonly comes in 100mbps, 1gbps or 10gbps link speeds, sometimes hosting providers will say a 100mbps port. Ports do not always accurately determine the amount of potential data transfer since oftentimes bandwidth allowances do not match Ethernet speeds. A big note here: bandwidth is almost always denominated in bits not bytes like data transfer. Remember 8 bits equal 1 byte. In our previous example we used 3.24TB/mo of bandwidth as an example. Another way to express this is 10mbps. One can do some math and figure: 10mb/s * 8 bits/byte * 60s/ min * 60 min/ hour * 24 hour/day * 30 days/ month and get 3,240,000MB transferred in a month. Using hard drive manufacturer standards (1000MB = 1GB) that would be 3.24TB/ month. Alternatively using normal base 2 math (1024MB = 1GB). Either way that gives a theoretical bandwidth available to a server or private network.

Another major note here is that one needs a compatible NIC. For example, if one has a colocation with a 1gbps port and is using a 10gbps Intel X540-T2 NIC in the server, the link will likely run at 1gbps not 10gbps.

Throughput

Throughput is usually a measure of what one actually can achieve. For example, a 1 gigabit Ethernet port in theory can transfer 125MB/s. In reality, getting 115MB/s to 120MB/s is a good result. Realistically, there are often other limitations so getting around 100MB/s is generally good.

There are a number of factors that impact throughput. Common impacts are server speed, network link speed, protocol overhead, packet size and packet processing speed to name a few. One of the more notorious culprits can be oversubscribed bandwidth at the hosting provider. One way hosting providers are able to keep costs down is by oversubscribing the bandwidth they have. This is the exact same principle as one sees with virtual machines (and therefore the compute part of VPS solutions.) Here is a quick example: The hosting provider has 100mbps of bandwidth to the outside world. Customer A uses 90mbps between 12AM and 12PM but 10mbps between 12PM and 12AM. Customers B and C each use 5mbps between 12AM and 12PM and 45mbps between 12PM and 12AM. With these three customers, all three can run at full speeds for their entire day cycle and utilize a constant 100mbps of bandwidth. Customer A can be given 90mbps and customers B and C 45mbps each and they will not experience a slowdown. The hosting provider has 180mbps of bandwidth promised but only a 100mbps connection to the outside world. That was a simple example but when the numbers get into the hundreds, thousands or millions, traffic patterns become relatively known and easier to manage and bandwidth pools become bigger. The bottom line is that if you need 50mbps of throughput, you need more bandwidth to be available from your hosting provider because there are a number of factors that will reduce throughput from a given bandwidth allocation.

Unmetered Connections

Many hosting offers have “unmetered” connections. These will be noted as such and will typically be an amount of bandwidth over time. If you have a 100mbps unmetered port that means you can transfer up to 100mbps at any given time and not be charged for overages. The benefit to unmetered hosting is that there is a relatively constant bandwidth cost since you are not charged for incremental data transfer. The disadvantage is that throughput achieved tends to be significantly lower than the unmetered limit. There is a simple reason for this. Web traffic tends to pick up when users are awake and reduces as users sleep. It is not uncommon for many sites to see 10x or more traffic between peak times in a day to the slowest times. If this was a completely linear usage model it would then be approximately ( 10 + 1 ) / 2 = 5.5x the slowest times or 55% of the peak times. If your unmetered connection perfectly handles peak usage at 100mbps it means your average usage is only 55mbps given that scenario. Here is an example recently shared by Backblaze regarding a new 5gbps connection they installed:

One can see both hitting the 5gbps peak on Thursday but also that the majority of the network graph shows bandwidth under 5gbps on Friday. One other aspect is important when buying unmertered hosting: service provider oversubscription. For example, if a provider gives a 100mbps “unmetered” connection but has 42x 100mbps “unmetered” connections in a top of rack switch and then has only a single 1gbps uplink ports the total bandwidth available for all customers in that rack may only be 1gbps or around 1/4 of the bandwidth all users would utilize if they had their connections at 100%. Further, if the provider only has one 10gbps uplink to the Internet but 20 racks with 1gbps uplinks there is another potential bottleneck. Unmetered connections are usually not immune to providers oversubscribing their networks. There are more than a few example of budget providers that heavily oversubscribe their networks. Time of day peaks and valleys in visitor traffic is common in almost every application so it is a key to determining the value one will achieve out of a given unmetered connection.

Burstable Connections

Burstable connections are perhaps the most interesting. If a hosting offer says 10mbps on a 100mbps burstable port we can break down the offer into components we already introduced. 10mbps is the amount of bandwidth you have available under contract. 100mbps generally means that you can achieve bandwidth and throughput of up to 100mbps which is 90mbps beyond your connection speed. This is important to many users simply because traffic is not linear. At the end of year/ quarter sales and ERP systems see large spikes in traffic. Mentions on Reddit or Twitter can generate huge bursts of traffic to a website. The bottom line, as we mentioned earlier, is that traffic is almost never 100% constant. Having a burstable connection allows for additional traffic (up to the burstable limit) to be handled without any configuration change. Of course, if you reserve 10mbps of bandwidth under contract, but then burst up to 100mbps, you are going over your contract value. This often may result in an overage, especially if one is consistently using over the 10mbps limit in our example. If you wanted to be extremely conservative on a burstable connection you could limit your inbound and outbound traffic to 10mbps which would more or less ensure you do not go over bandwidth limits. Here is an example of a colocation facility that has a few occasional spikes beyond 10mbps:

If a maximum bandwidth was limited to 10mbps due to the switch port being configured to 10mbps Ethernet, then there are a few spikes in this colocation example where speed would have been limited by the total bandwidth available. Spikes happen and the hosting industry has had a solution for this for a long time. 95th percentile (or 95th for short) billing.

95th Percentile Billing

The premise here is simple, and 95th percentile billing is probably most prevalent on burstable ports since they generally leave a lot of room for spikes to cause overages. 95th billing generally has a few key components:

- Billing periods (usually months) are broken into smaller increments (usually 5 minute increments)

- Average bandwidth utilized is measured at each increment

- The top 5% of these bandwidth measurements are then discarded for calculations

- The next highest increment is used for billing purposes

- Generally hosting providers calculate both inbound and outbound 95ths and then bill based on the higher of the two

If that next highest increment is within the sustained bandwidth limits on a burstable port there is no overage. As an example, ServeTheHome is a fairly static website with sub 2MB page loads. Spikes from time to time may reach in excess of 50mbps but 95th billing usually means the site uses single digit mbps in a given month.

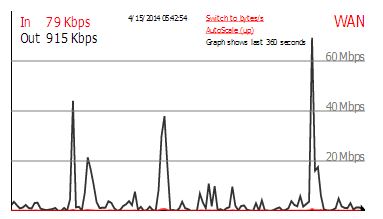

The above picture shows a colocation site where there are spikes up to 28.6mbps out from and 12.2mbps into the colocation. The average transfer is 2.3mbps out and just under 0.5mbps in. 95% billing over this week long period is at 5.75mbps out and 1.23mbs in so a 6mbps burstable port on 95% billing would easily cover this. Although this does not look perfectly even, it is not an uncommon pattern to see for a smaller site that gets a burst of traffic. This can have an extreme impact on the amount of data transferred, but also the costs. For example, with a 10mbps 95th on a 100mbps port we used 3.24TB of data transfer per month in above examples. If we had a perfect usage pattern for that billing arrangement, and bandwidth equaled throughput, the maximum amount of data on our burstable 95th port that could be transferred each month would be: 3.24TB * 95% of the time + 32.4TB (monthly data transfer on a 100mbps port) * 5% of the time = a maximum of 4.698TB transferred per month. So in our example, perfect utilization of bursting added about 1.458TB of data transfer in a month or about 45% more data transfer than on a limited 10mbps connection. On the other hand, in practice, there is seldom a steady state all consuming 95th workload. Instead, extreme spikes can be the norm, such as when one finds content featured on a sub reddit:

In the above example, there is a spike to over 96Mbps. The average is only 3.33Mbps but the 95th billing (red line) would be done at 36.55Mbps. If one had a 10Mbps 95th connection there would be significant overages. The bottom line is that 95th billing can be great, but one needs to be careful with matching a workload.

Putting it all together

At the end of the day, picking the right data transfer model can have major impacts on cost. Overages can cost a significant amount of money as can data transfer, especially at more expensive cloud providers. One should certainly profile their application and usage before entering into a hosting agreement and ensure the billing model matches their usage model. Another good practice is to leave at least some buffer in terms of bandwidth limits so that overage does not occur, or occur too frequently. The amount of data transferred is only one piece of the puzzle though. Another key aspect in many applications is network quality but that is a topic that will be covered in a subsequent piece. Hopefully this helps in terms of understanding common hosting billing models for bandwidth/ data transfer. Feel free to share this article or discuss it either below or in the forums. If there are clarifications, happy to make updates as needed.