Recently I have been working on a new mini cluster project. With the release of Intel’s Avoton platform, there is finally a solution that makes sense to build small points of presences with. Industry standards allow for more or less 110-120w of power to be consumed per rack unit at just about any data center. While no company has made a perfect solution yet, the goal has been to start playing with small clusters in a box to push density using easy-to-find, off-the-shelf parts.

For the build we utilized the BitFenix Prodigy mini-ITX chassis. As far as mini-ITX chassis go, this is certainly not a small case. It is meant to be an excellent platform for the higher-end mITX systems with many drives and even dual-slot GPU coolers. The chassis is still very sub-optimal for what we are doing, but it did work which is good enough for this proof of concept.

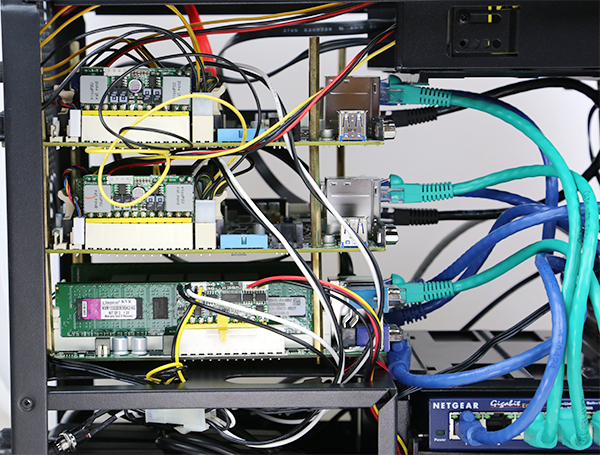

Inside the chassis is the cluster. Motherboards are stacked inside the chassis. Power remains in the same compartment as a standard power supply, yet consumes much less space. Networking is handled via two switches, one for IPMI and one for data. The mass of short CAT-6 Ethernet cables can be found where the BitFenix removable 3.5″ hard drive rack would otherwise go. We also moved the 120mm fan to a higher position. The Intel Atom C2750 is a very cool running chip, but components do need at least some airflow.

Here is the stack of three Intel Atom C2750 platforms. The top two are Supermicro A1SAi-2750F platforms with the bottom being an ASRock C2750D4I. Notably, they also represent the platforms around for use in this type of project. The Supermicro platforms offer four network ports so we were able to connect both to the switch and to the other units directly. All three motherboards had at least two data network ports connected as well as the IPMI NICs connected.

Another change here was that we used readily available PicoPSUs to power the machines. This was the simplest way to get everything running given our space constraints. Certainly an enterprising company could wire up one PSU potentially to all three motherboards as a single PicoPSU 150XT unit can easily power the cluster’s total power consumption.

In terms of networking, this required a bit of creativity. 16 port switches were physically too large so a compromise was made. A 5-port Netgear GS105 v4 fit neatly in a 3.5″ drive bay and provides IPMI networking for the existing nodes plus a potential future node. An 8-port TP-LINK gigabit switch fit between the hard drive cage and the front chassis mesh with a little bit of maneuvering. One of the switch ports is routed to the rear of the chassis to provide connectivity to the external network.

On the opposite side of the chassis one can see the expansion slots for each platform. and some additional cable routing (not pretty I know but this is still a cluster being added to.)

One major call out is that under the bottom motherboard, there is a piece of red electrical tape. This is due to the fact that the motherboards are rotated 180 degrees to the rear of the motherboards faces the front of the chassis. That change means the mITX mounting holes align with the motherboards at only two of the mounting points instead of all four. Electrical tape was used on the two unused mounting points to provide insulation.

In the 5.25″ expansion bay we added an Icy Dock 6-in-1 2.5″ hot swap chassis. This can easily provide six drives to be split among the three nodes. Further, the chassis does have on-door mounting and the additional unused 3.5″ mounting spot for additional storage capacity.

Power is a mess. We are using thee small power bricks in the standard PSU cutout. Along with these three items we also have the TP-Link and Netgear switch power adapters.

We are still looking for a clean power solution such as a power strip so that we can simply have one power and one Ethernet plug to make the Mini Cluster in a Box V2 simple to setup.

Conclusion

This build was significantly more practical than the initial build which utilized an Intel Atom S1260 as well as two Raspberry Pi nodes. In terms of power consumption we are utilizing around 55-60w during normal idling. Under full load and after time for some heat soak we generally hit around 112w with only three SSDs for storage. Certainly in-line with the design goals.

In the end, we crammed three nodes (in some applications more important than individual performance), up to 96GB of RAM (we used only 64GB for POC purposes), 10 data NICs, just about 1.5TB of storage capacity with 24 onboard SATA ports, an IPMI network and switches into a relatively small and portable form factor. The best part is, adding a fourth mITX platform is not out of the question (and is, in fact, a project.)

The major thing this proof of concept showed is the fact that 1A at 120v per 1U is now the realm of a small cluster rather than a single system. In fact, given the Intel Atom C2750 performance, we can easily now fit a cluster of servers, including networking in a small form factor and low power consumption thresholds.

Certainly there are things that can be improved upon, the networking situation is sub-optimal and realistically an Avoton node with a pfsense VM could be used instead of an additional network switch which would save some power and provide better management capabilities. On the power side, a right-sized power strip is desperately needed. Even better still if a small battery back-up unit could be utilized. The chassis is significantly too large, but was easy to work with. Still, as far as proofs of concepts go, this is just about what we wanted to see.

Head over to the forum post on this cluster if you have ideas and to see more information about the build.

Do all of the power supplies output 12v ? If so, there are many compact 12v PSU’s that can be had, even ones that are designed to fit in a standard 5.25″ drive. You would simply trim the power cables and attach them to the screw terminals on the PSU. (Or you could get something like this: http://www.newegg.com/Product/Product.aspx?Item=N82E16817104054 )

Point is, if they actually are all a standardized voltage, your power supply options really open up.

You need to get this into a 1U. Easily enough space. Wonder why HP doesn’t just build this or a 2U 2A (1A EU) version???

I am really interested in a 1U build too as it is more practical in a server environment.

Can you do a build and blog on that? Would be great!

As there should be a cheaper and more silent way then to go for a Casepro B9ITX (http://www.casepro.cn/datasheet/B9ITX-datasheet.pdf). That is 16 mini-ITX in a 5U case. And with 40mm fans, which are never silent in my experience.

I would rather go for a cheap and silent way (80mm fans) of 4x mini-ITX in a 2U case with 1 power supply, all connections on the back (so a normal switch in the rack can be used).

Anyone?

Great project. That 5U linked in the comment above is way too big. May as well get a 3U micro cloud that has higher density at that point and simplify power supplies.

Love the project. Also want to see a 1U or 2U rackmount.

For those who stay with a desktop case, see here a tower with 14 mini itx, power and switch in 1 case: http://www.meisterkuehler.de/wiki/index.php

As 1U case is already on the market for 2 mini ITX blades (http://www.casepro.cn/datasheet/M550-datasheet.pdf), but these still use their own PSU and annoying 40mm fans, there should be a cheaper (less PSU redundant) solution.

Besides 2U cases are cheaper most of the time vs 1U cases, compared to the space you get.

The SuperMicro boards have a separate 12v input that you can use instead of an ATX power supply. I don’t know if the ASROCK does too – but assuming it does you could always use something like this instead of the three separate bricks: http://www.ebay.com/itm/LED-Universal-Regulated-Switching-Power-Supply-AC-to-DC-/250876072121. With a little searching around you could probably find one that delivers both 12v and 5v so that you can power the SSDs too. Then you just have to make sure your internal switches run off either 12v or 5v supplies (unfortunately, a lot of then use 9v or 19v inputs…).

Try this instead of 8 PSUs:

8CH Power Supply CCTV Cameras 8 Port 12V 6A DC+Pigtail

Fire hazard looking AC adapter from China

Only if you don’t understand electricity, don’t forget your entire rack’s power uses only 2 copper wire from the socket.

If you want extra safety, assemble your own fuse box in your setup:

18-Port Fused Outputs 12V DC 12Amp UL list CCTV Power Supply Box Security Camera

16 Channel CCTV Security Camera Power Supply Box 18 Port 10A 12V DC

By the way, all your AC adapters are made in China. Your $100,000 Cisco is also made in China.

This one offer more emotional comfort for those unfamiliar with AC/DC:

9 Port PTC Protected Wall Mount Power Supply, 12V DC,7Amp

Easily fitted inside any rack/chassis, plug in your own pigtail DC jacks on the back and you’re good to go.

Drill a few holes and place it next to a fan, and you end up with something much safer and more space/heat efficient than a bunch of adapters tide together.

These HDD size AC/DC power switches also works, pick your own base on load:

240w:

http://www.amazon.com/Switch-Power-Supply-Driver-Display/dp/B00GMCL9EQ

120w:

http://www.amazon.com/Generic-selling-Switching-Power-Supply/dp/B007C055BK

60w:

http://www.amazon.com/Switching-Switch-Power-Supply-Driver/dp/B00FGQ0C7O

1. Efficient work and low temperature

2. Comes with the functions of overvoltage protection, short circuit protection and overload protection

3. Constant voltage output to ensure the stability of the power supply

By much safer I mean this AC/DC conversion setup is actually earthed, as oppose to the usual AC/DC adapter that doesn’t even have a earth wire.

This is a stupid article, I’m running 96 GB DDR3 1600 overclocked to 1640 on a micro ATX Rampage III Gene with a X5690 @ 3.90 Ghz and it destroys this setup in everything what a ridiculous article!

If you find you don’t need one of the board + ram anymore, your welcome to contact me.

Cheers

You obiously don’t understand the concept. Doing a serious setup and then put an OC on it speaks volumens. Should go troll somewhere else, which I can see you also do..

Hey David, I’m very curious to find out the exact spec you have running i.e. MB and RAM part numbers. I would like to price it out how much it would cost on say the used parts market.

At the present time I am using a i7-2600 Shuttle box which I use it as my VMware ESX lab but being limited to 32GB is causing a bottleneck and I have been looking at the Supermicro and ASRock based Avoton Mini-ITX solutions out there purely for their small size.

Patrick:

Thank you so much for sharing this mini-ITX cluster configuration. I am really very keen to go down this route purely for the potential of being able to pack so much processing and possibly storage capability into a small package.

I would greatly appreciate your thoughts on how these Avoton based systems perform when it comes to running multiple virtual machines for lab use where the importance is to be able to power on say in my case between 10-15 VMs all of different memory and vcpu configurations ranging from 1GB to 4GB/8GB RAM and 2-4vcpu VMs.

What I am very keen to find out is with these many VMs running all at the same time how responsive is the system once the VMs have been powered on and booted up. The Atom processor are 8 core but in terms of horsepower this processor is way down to my 2nd Gen i7-2600 processor.

Any light you can shed on the above will be greatly appreciated.

Thanks

M

Hi

This is a cool setup, something that I too had in mind but with Intel NUC.

A side project just to setup a low power datacenter inspired by

http://www.tranquilpcshop.co.uk/ubuntu-orange-box/

Was planing of using 4x i5 or i3 intel NUC stacked in a casing

Case : Fractal Design Node 304 + 450W PSU

To power the NUC I would use the molex or PCI / E to Pigtail convertors,

http://www.ebay.com/itm/Molex-4-Pin-to-2-Gridseed-Power-Plug-2-1mm-DC-Barrel-Plug/271565450797

so all the cabling and happens inside without wires coming out of it, Is this a good idea?

and of course a net-gear 4 port router. inside the case.

Software : Ubuntu MAAS and Ubuntu Juju

You could also wire all 3 boards to an HDPlex 300w DC-ATX PSU. You would only need a single 19v+ laptop power brick. So in essence you would have 2-3 cords running out of the back instead of what you have now. Or, like you said, run a small power strip and run a single cord out. Either way I am looking at building something similar for my homelab, maybe even with the Avonton c2758.

BTW, I know this article is a little out of date now but it could still be viable. Any consideration to revamping this project>