At Intel Data Center Day 2013 Intel debuted their vision of the future. The San Francisco event was called Re-imagine the Data Center and was, in a way, a business unit update. Of course, Intel took the opportunity to highlight some cool technology, including their low-end next-generation Atom code-named Avoton as well as looking at higher end such as Xeon Phi, the E3 and E5 series as well as interconnects. Bottom line, an exciting day of announcements. At the end of the day, the vision is interesting and something we saw a glimpse of back with the Centerton launch months ago.

Avoton is the New Atom – x86 SoC’s Are Coming

A major component of today’s event was around x86 architectures going low power. The basic Intel Atom architecture has remained largely the same since 2008/ 2009 when we were first being introduced to Atom. Low power and a process node behind. Intel is moving ahead with a more modern process with its Avoton and improving performance significantly. As we saw with our Intel Atom S1260 Centerton v. the Cloud review, the current generation Atom did a lot for power consumption, but the performance was not significantly better.

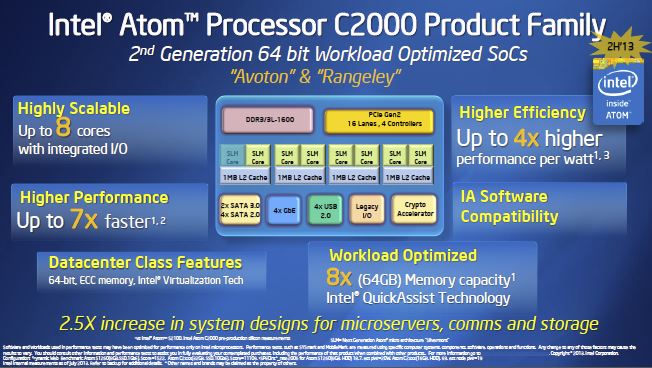

Intel provided their Avoton and Rangeley update and it looks like there are some major performance gains in the next generation:

Intel is working to change this and the next Avoton is going to have up to eight cores (currently the maximum is 2C/ 4T) and further integrate components. We already saw the Atom S1260 move this direction along with what many ARM designs are doing, but Avoton and its successors are going to move this way. It makes a lot of sense. For example, some tasks are much faster on dedicated hardware. We saw this with QuickSync and AES-NI as previous examples. In the lower power market, a lower power x86 core with dedicated accelerators can make a significant power/ watt difference.

Another major advantage is cost. Integrating components on chip lowers the cost of platforms by removing the need to add ICs. For example, we saw with Haswell much of the power delivery hardware move on-die leading to much cleaner CPU areas.

Intel Custom Chips

One area that was picked up by the news is that Intel designs custom chips for Facebook and ebay. This should not be a surprise for folks as we know Intel has been dabbling in this from the fact that the Intel Xeon L5639 chips (previous generation parts) that are hitting ebay these days are from a former Facebook data center and we know even in the consumer space Intel made chips available to Apple that other manufacturers did not get. This is a natural part of that process.

I would have been very interested to see the Haswell-EP results. Major cloud players such as Google already have been testing A0 rev silicon in data centers for some time now. This is despite the fact that Haswell-EP will be next year’s server release.

One very important factor to remember when we hear about the ARM players entering the market is that they are NOT competing with Intel’s current generation of public silicon. Intel has been shipping Ivy Bridge-EP chips to select customers for many months now. The big players are also, coincidentally, the players looking at ARM. Giving these players advanced access to volume shipments allows the company to keep these big cloud providers one step ahead of the rest of the industry.

Intel’s Bet to Beat ARM Servers

Intel is betting on the cloud completely obviating the need for low-end servers. That is an important step for the company because it is trying to find a way to use big iron processors to combat ARM on the low-end.

If Intel can produce an equal or better x86 competitor but have that same infrastructure span multiple types of workloads, then Intel may win the cloud. If low-end dedicated servers find fewer applications due to the cloud alternative, and vendors pick the next-gen Intel Atom processors, then ARM’s target market shrinks considerably. It is an interesting strategy to combat the phenomena we saw in the Innovator’s Dilemma.

Software Defined Everything

The other key takeaway from today’s event is software defined infrastructure. As someone who does cover the storage industry quite often, the idea of x86 based software defined storage is not foreign. I would argue that the fact that one can look inside EMC, NetApp, IBM, Hitachi and Dell storage systems and see Intel “inside” means x86 has already won the storage side of things. Now the question is simply how can redundancy be provided, how can provisioning be made easier and how can hot data move to fast tiers.

On this topic, software defined storage is already here. EMC’s ViPR and NetApp’s OnCommand are two good examples of even traditional pure storage players moving in this direction and reclassifying dollars in the SDS category. At the end of the day, x86 has the performance to continue driving this type of workload.

With regard to computing, I think we are getting there. One area where this can be, and likely will be, improved upon is in instance scaling. ServeTheHome was outgrowing its Amazon EC2 instances regularly prior to moving to our colocation and growing the instance was not as simple as it should have been. Still, with many public and private clouds in existence today and everyone from the OpenStack consortium to VMware working on raising machine utilization and instance performance, this is happening.

On the software defined networking side, ServeTheHome’s colocation utilizes a pair of Intel Xeon based pfsense firewalls. Many intelligent network appliances incorporate x86 although this is an area where low latency does drive custom ASIC design.