About two and a half months ago we covered the Xilinx Versal AI Edge launch. At Hot Chips 33 the company is talking about the new AI inference capabilities of these chips. We are covering this one live during the talk so please excuse typos.

7nm AI Edge Inference Chips at Hot Chips 33

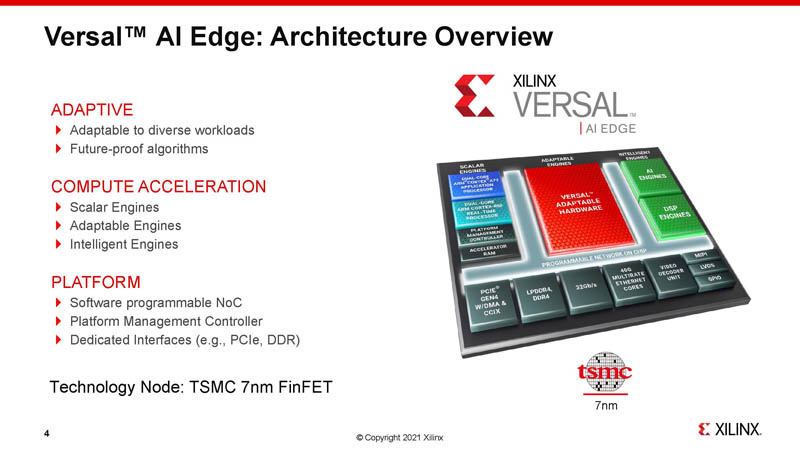

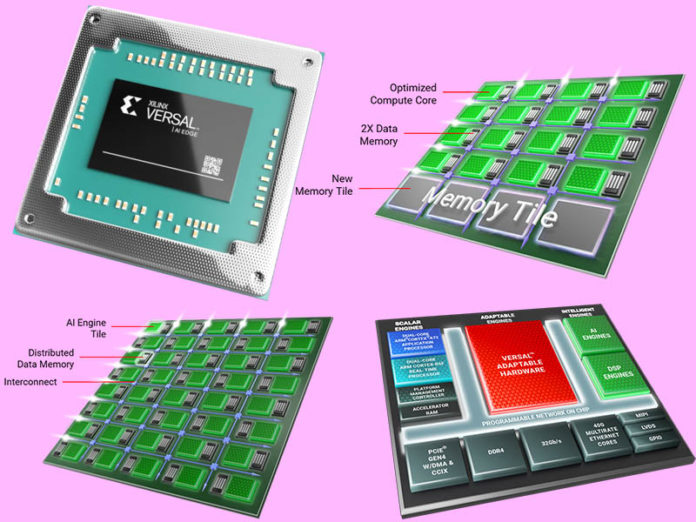

Here is the new 7nm Xilinx Versal AI Edge slide that shows some of the key features. The talk is mostly focused on the AIE-ML for inference. As a result, instead of

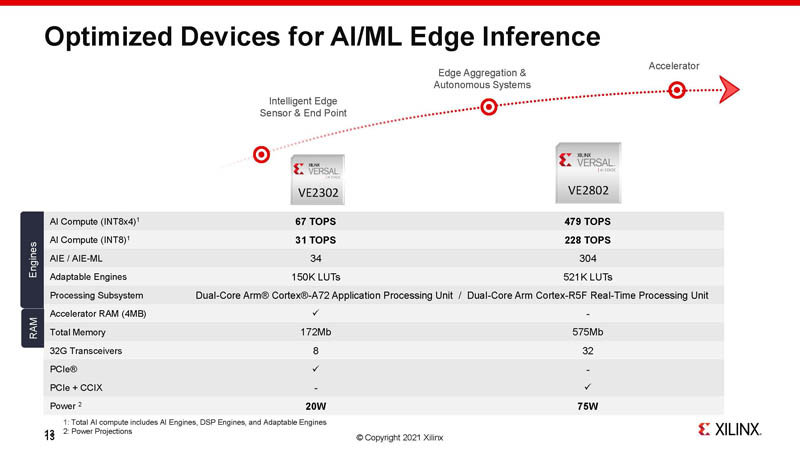

Xilinx said it has two new chips being launched today, yet these were certainly in the Xilinx Versal AI Edge Launch around two months ago along with a more extended family. They are designed for different applications. The VE2302, as an example, is a lower power part so it does not get the Video Decode unit, but it does get 4MB of accelerator RAM. In the higher power device, we would expect more RAM off-chip.

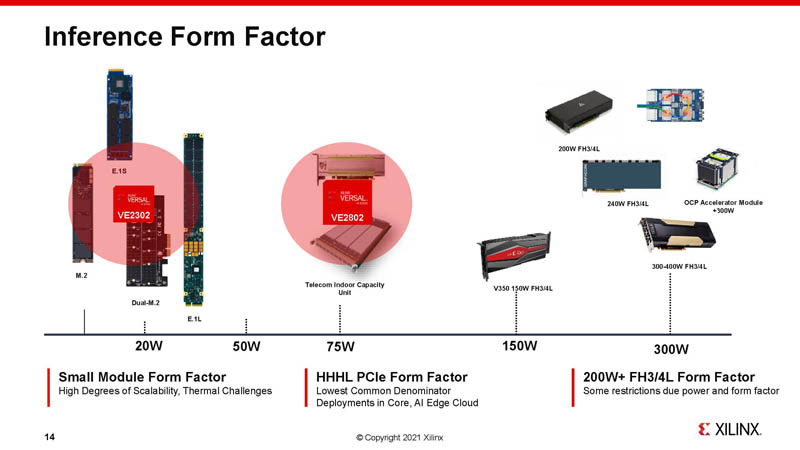

These chips focus on the lower power up to the 75W range. Note E1 EDSFF is specifically called out here.

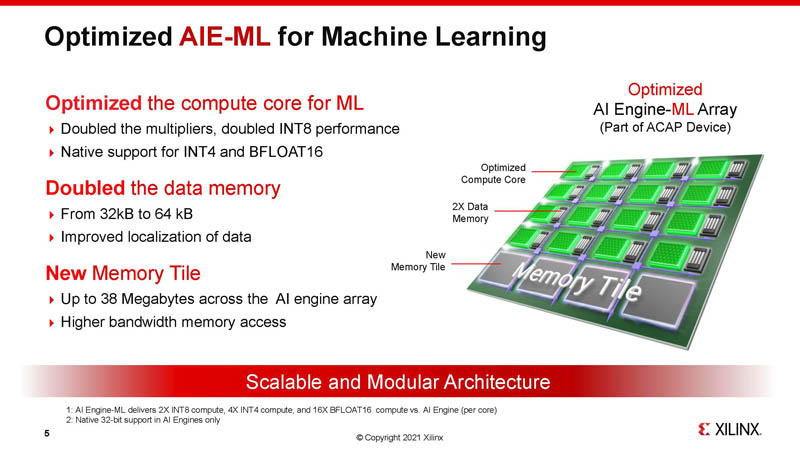

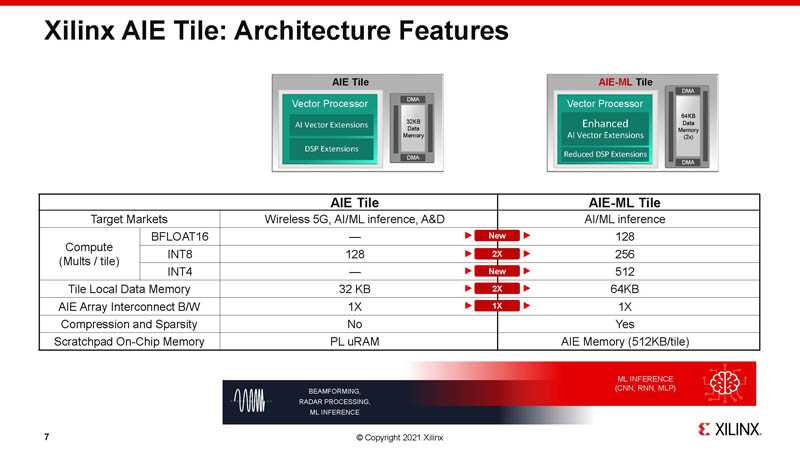

With the new 7nm generation, the AIE-ML cores get more performance and support for INT4 and bfloat16. These accelerators do not support FP16. Local data memory was doubled to 64kB. The Memory Tile was added to increase the amount of local memory in the array.

Here is another look at how big the improvements are.

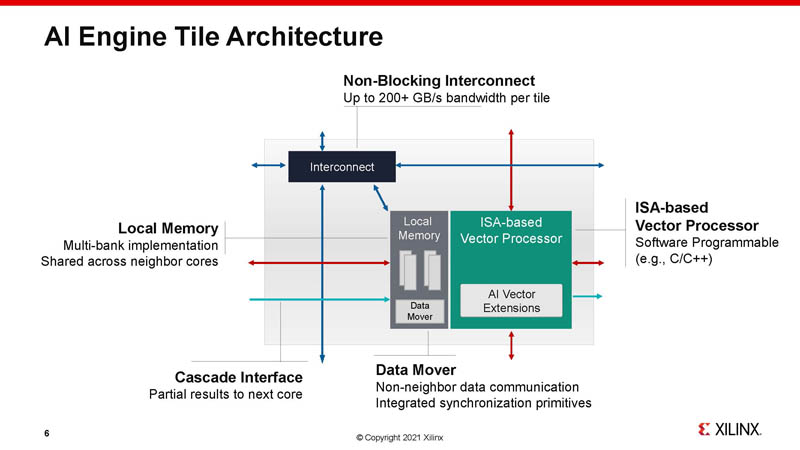

This is the tile architecture with the interconnect as well as the vector processor and the local memory.

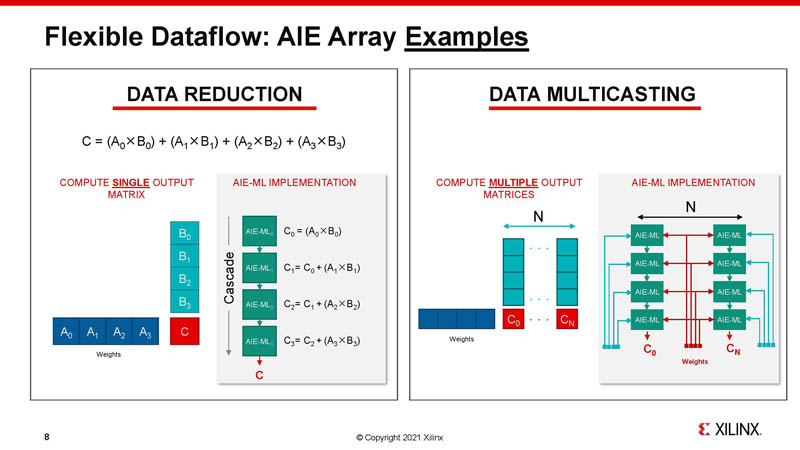

Flexible data flow within the AIE-ML array allows for things like data reduction and multi-casting data.

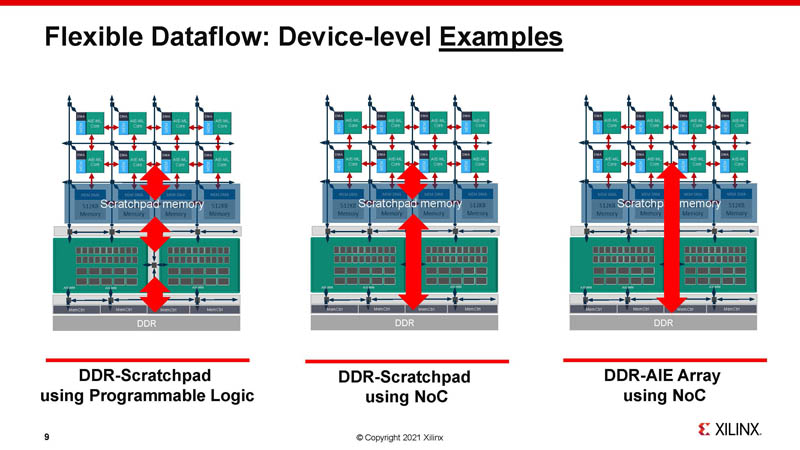

One can also move data through the rest of the SoC since there is the scratchpad memory but also DDR and then all of the programmable logic on the FPGA. The real goal is to make a programmable pipeline using other SoC resources.

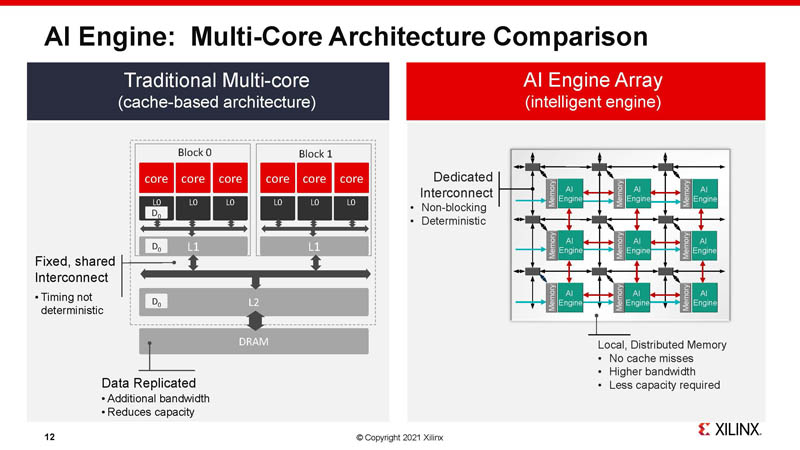

Xilinx made a comparison to mutli-core CPUs and shows examples such as not having a traditional cache in the complex.

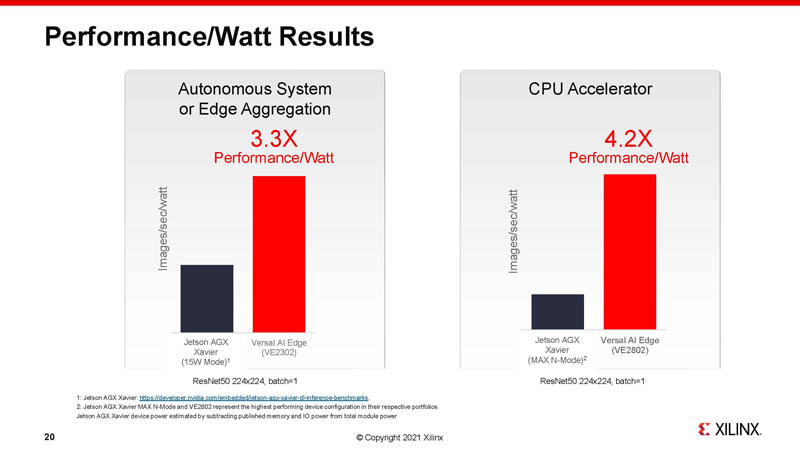

This one was a bit strange. Xilinx originally stated that the Xilinx VE2802 was competitive power-wise to the 70-75W NVIDIA T4, yet here it is being compared to the AGX Xavier as we saw in our Advantech MIC-730AI Review.

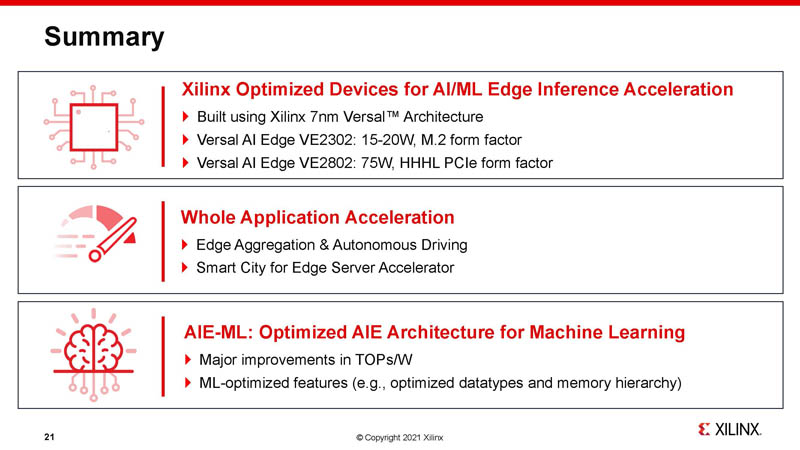

Here is a quick summary of this presentation:

As we would expect, this is going to have a Xilinx software toolchain behind it including Vitis/ Vitis AI.

Final Words

Overall, we still hope to see these hit the market sooner rather than later. NVIDIA also needs to update its Jetson line at some point as there is going to be more pressure on this market segment. Hopefully, the company does so in the next few months even though we did not get a Hot Chips talk on it this year. We expect to see the impact of these next-generation Xilinx 7nm FPGAs with AIE-ML accelerators more in 2022 than this year.

According to the Jetson roadmap on Nvidia’s website, Orin-based hardware replacing the AGX Xavier, Xavier NX, and TX2 will be released in 2022. Replacements for the TX2 NX or the Nano won’t be released until 2023.