Market Impact and Looking Ahead

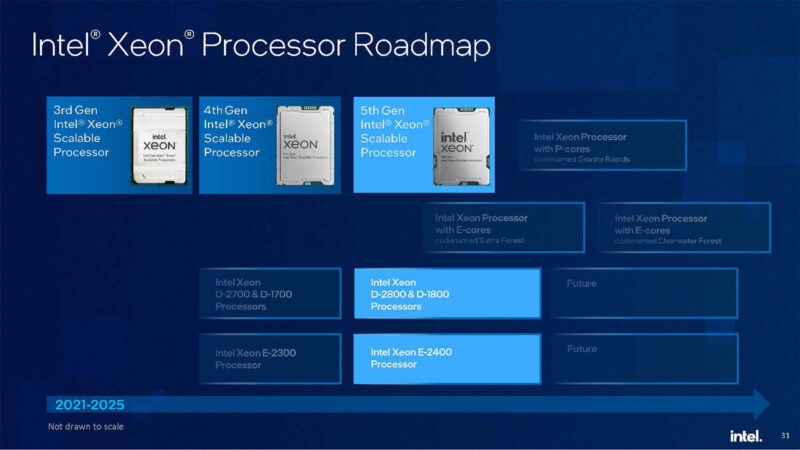

At this point, a few things are reasonably clear. Intel does not have the core count to go toe-to-toe with AMD in this generation. Instead, it has a roadmap for that. In six months or so, Sierra Forest will start that journey of high core count parts and re-set the market’s expectations in core counts.

Given a big part of today’s announcement is that Intel has more cores than in the previous generation, but the high core count story is the 288-core Sierra Forest in six months, there is a clear challenge with Osborne’ing. I asked Pat Gelsinger about this. His basic response was that a lot of organizations are buying 4th Gen Sapphire Rapids today, and the 5th Gen Emerald Rapids is a drop-in, easy replacement. That makes a lot of sense, especially in a market where a large volume of the non-hyper-scale market is still buying 32 cores or 16 cores per socket to align with VMware and Microsoft licensing. While cores are great for running Nginx web servers, redis servers, and so forth in Linux containers, for licensed software, fewer cores with more performance per core are the way to go.

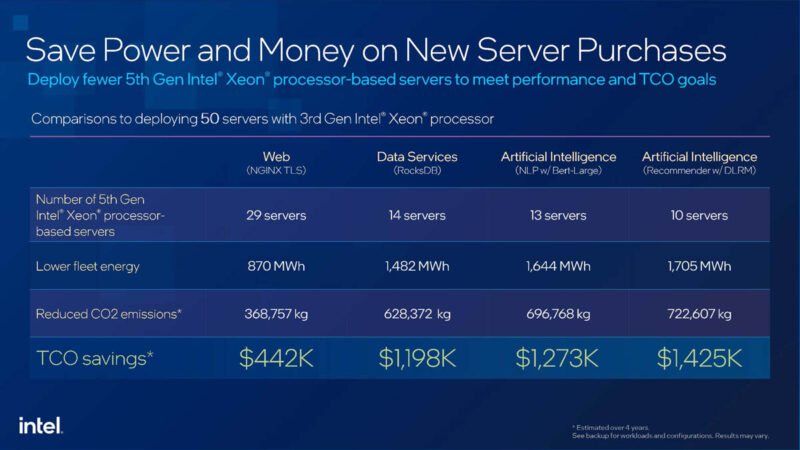

Since it takes some time for organizations to change generations of CPUs, Intel had some fun numbers talking about the 3rd Gen versus 5th Gen servers. The key to all of these numbers is that they are using some sort of acceleration. For example, the nginx TLS is a QuickAssist workload. QAT is only active on half of the new SKUs. Likewise, RocksDB is using Intel IAA, which is absent from over a third of the SKU stack. The big AI gains are from Intel AMX, which is present on all SKUs. So keep in mind that in Intel’s marketing materials for non-AI cases, it is showing some best-case numbers using features that a large part of its SKU stack does not have.

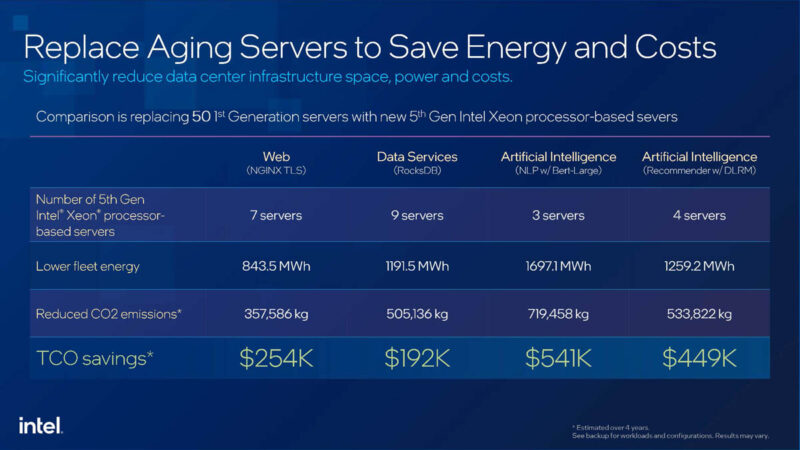

Here are the TCO numbers for replacing 2017-2019 era (5-6 year refresh cycle) servers with the new generation. While many of the same principles apply, the 3rd Gen comparison is deploying all new hardware while the below is a rip and replace delta.

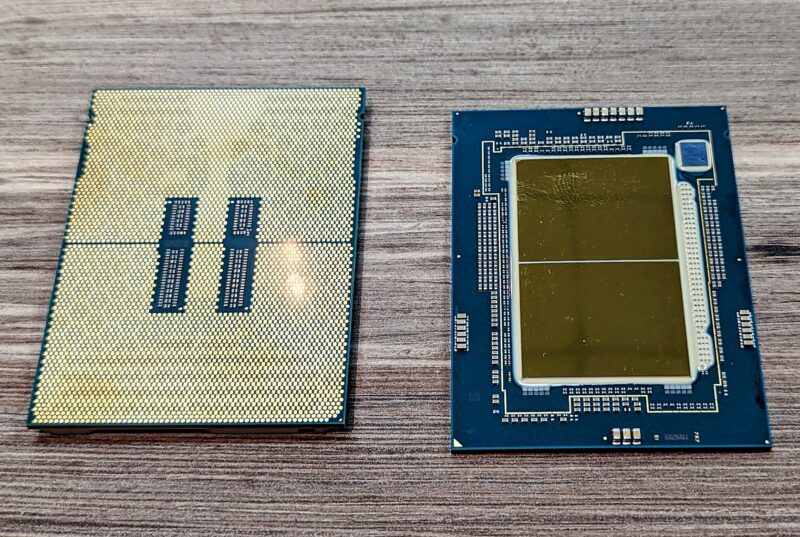

That comparison to 1st and 2nd Gen servers is something we are going to dive into more shortly. To many, a 2nd Gen that was still top of the line at the start of Q2 2021 and a 5th Gen server will look a lot alike. We have two Supermicro servers that look almost identical, save that the newer server, we only had eight drive bays populated with trays. To many, they could walk around the data center and assume they are the same.

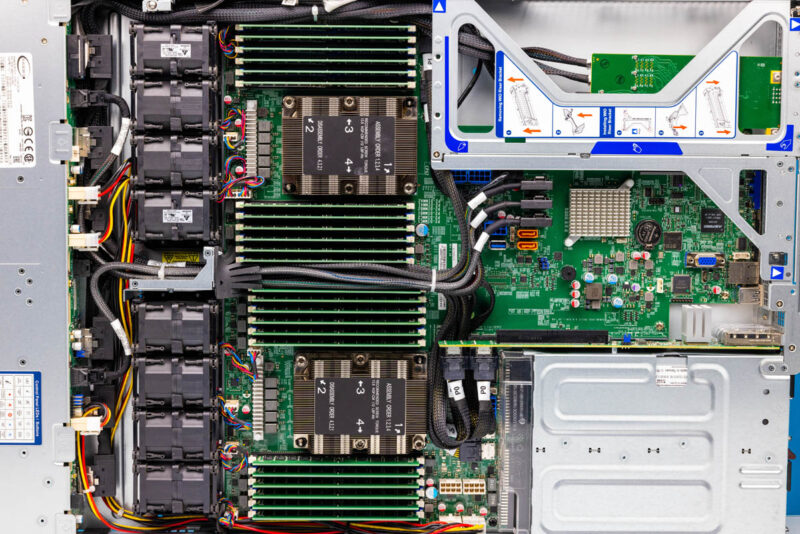

Looking inside, though, they are massively different. Here is the X11 generation server that scales to 56 cores, 24 DDR4 DIMMs, and 96 lanes of PCIe Gen3 max.

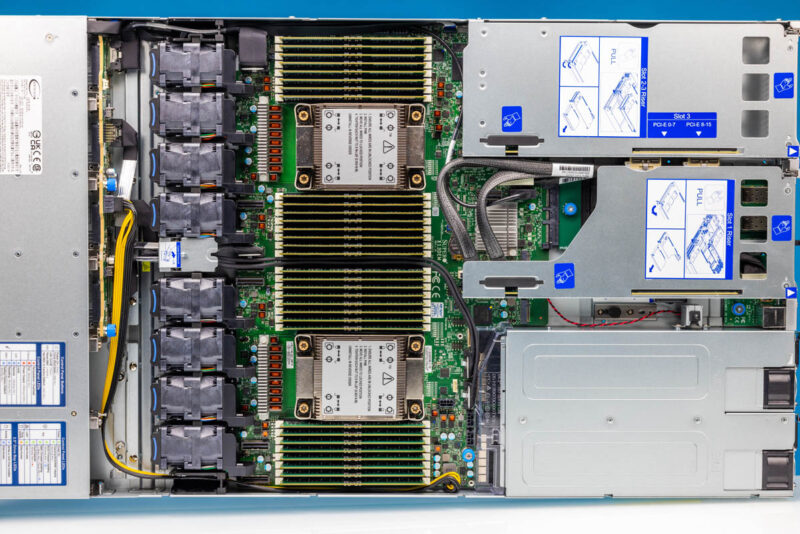

Taking a look at the more modern X13 system, we now have up to 128 cores (~2.3x more), 32 DDR5 DIMMs (1.33x the number, but can be 4x the capacity, and 2.5x the bandwidth), and 160 lanes of PCIe Gen5 (~1.7x the lanes, 6.7x the throughput.) The server is also more modern with OCP NIC 3.0 and the ability to support CXL Type-3 memory expansion devices in addition to the onboard accelerators.

While they may look the same, that is a wild server improvement in 10 quarters. We are also using a newer Supermicro X11 design that is more modern than what the platform looked like at its launch.

That onboard acceleration is really interesting as well. Earlier this month, I had the opportunity to hang out at Netflix and ask about a paper they presented on performance optimization. We will have more of that next year. At the same time, the folks at Netflix explained how they actually use CPUs for AI/ML. They have portions of their transcoding pipeline that optimize lower-resolution and quality transcodes from the high-quality masters. These lower-quality transcodes are designed to save on space and bandwidth with the understanding that, in many cases, on-device (e.g., phones) upscaling will occur. Netflix developed a system to optimize the transcode quality and does ML inference on CPUs.

I asked why, and the answer was that it is less costly for them to do that part of the workload in AWS on the CPU instances it already has than to pay more for GPUs or other accelerators. Many have heard Intel’s AI on CPU story and dismissed it, given the dominance of NVIDIA on the GPU side. In practice, these workloads that are mostly another function but have some AI element are great use cases for CPU-based AI. Perhaps it is just comparisons like the ones above where Intel is showing AI-only workloads running on CPUs showing huge gains with AMX instead of a more realistic mixed-use case. The bigger point is that AI is a real-world CPU use case.

Final Words

When looking at the Intel Xeon Emerald Rapids CPUs, Intel has some undoubtedly awesome technology. For that sweet spot of the market that does not buy top-bin CPUs, the built-in acceleration can give more performance per core that is well beyond a simple 10-15% generational improvement. Likewise, we can get new classes of devices like CXL Type-3 device support, allowing Intel platforms to expand memory capacity and bandwidth beyond what was available in even the new 4th Gen Xeon platform launched earlier this year.

The part that is a complete bummer in the new parts is how the SKU stack is created. Intel would have a completely different story if it enabled acceleration for things like QuickAssist on every SKU. Instead, the specs are almost a Yahtzee (Amazon Affiliate) game of specs. Some SKUs have accelerators, and some do not. The only way to get a full set of QuickAssist, IAA, DLB, and DSA accelerators (four each) is to buy the Intel Xeon Platinum 8571N a single socket part. Even the Intel Xeon Platinum 8592+ at $11,600 only has one-quarter of the potential acceleration provisioned. Some Xeon Platinum “high-end” SKUs do not even support DDR5-5600. Intel is holding back its biggest differentiators that it bases many of its performance claims on from huge portions of the customer base. This made sense in an era of no Xeon competition. These days when Intel is half the core count of AMD EPYC per socket, it needs to re-align its methodology for designing SKUs.

With all of that said, perhaps the coolest aspect of the new release is the fact that we had two Xeon P-core CPU releases this year. After using the new parts, it is clear why one would buy 5th Gen over 4th Gen Xeon, and perhaps that is the point. The drop-in upgrade is great.