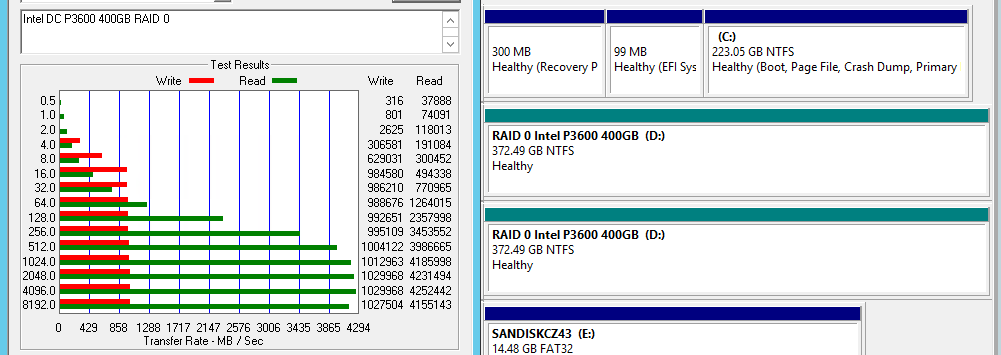

Recently we embarked on an ambitious project: adding 2.5″ SFF NVMe drives to systems which previously lacked support. The benefits are fairly obvious. For example, during testing we managed to, get a low cost <1A @ 120v system to achieve read speeds in excess of 4.5GB/s even configured with 128GB of RAM and 2x 10GbE NICs. The fact is, adding a single NVMe drive lowers potential points of failure while achieving performance often rivaling 6-8 Intel DC S3700 SATA drives. Adding multiple drives and one can see the advantages multiply. We have already seen solutions start to hit the marketplace using MLC/ TLC SATA drives as hard drive replacements in three tier storage solutions (RAM, NVMe, SATA SSD.) NVMe is happening and as of 2015 it is now affordable. The flip side is that it is still extremely difficult to add 2.5″ drives into a system.

Why bother with 2.5″ SFF NVMe drives?

The move to 2.5″ SFF form factors provide a number of benefits over traditional add in cards (AIC.) For example:

- Many 2.5″ SFF NVMe drives are hot-swappable, much like traditional SAS drives

- One can potentially fit more drives in a single chassis. AIC devices are normally PCIe x4 so using them in a system with PCIe x8 slots means one is only able to use half of the available lanes with the AIC. With 2.5″ NVMe, one can potentially use more drives in a given system

- They are the future. There are expectations of PCIe switch based architectures allowing multi-host topologies driving more PCIe lanes. The 2.5″ SFF form factor allows more drives to be fit into a system based on the smaller form factor

We are seeing more servers come with 2.5″ NVMe slots built-in and with the launch of Broadwell-EP these will become more prevalent with Skylake-EP and successive generations. With more slots available, we will see the device ecosystem pick up and more 2.5″ devices available.

Four Potential Solutions

The four solutions we are looking at today are:

- ASUS Hyper Kit ($22 street price)

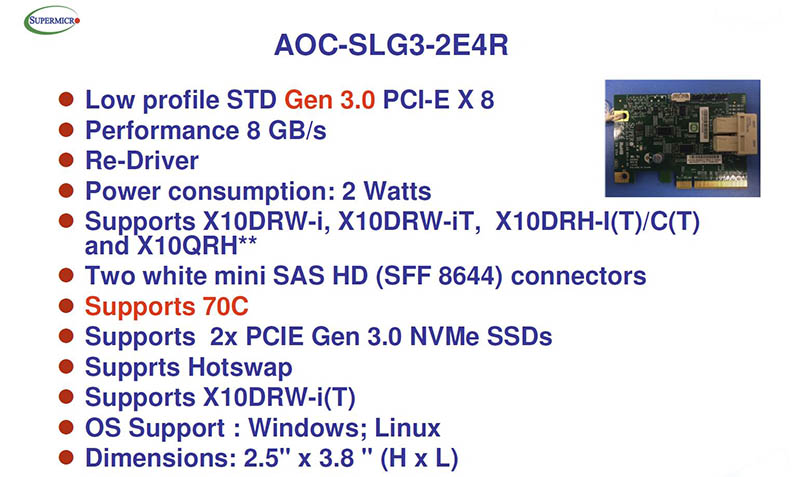

- Supermicro AOC-SLG3-2E4R ($149 street price)

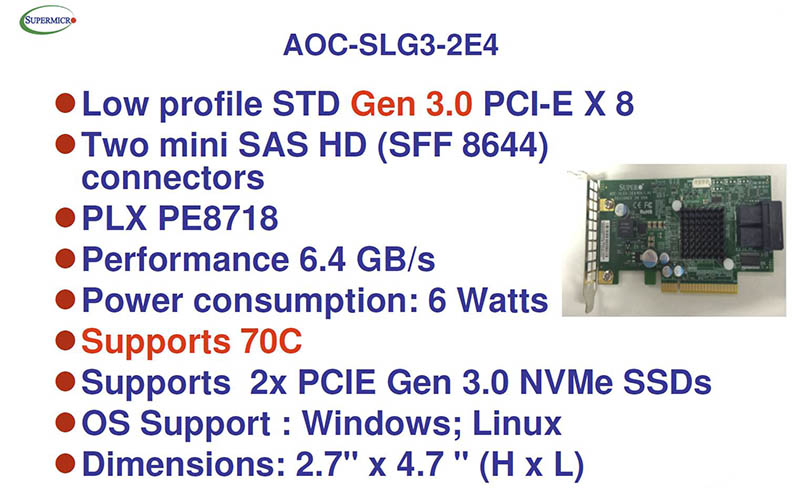

- Supermicro AOC-SLG3-2E4 ($249 street price)

- Intel A2U44X25NVMEDK ($500 street price)

While pricing may range significantly, two of our solutions are intended to be used in a wide variety of systems while the other two are focused more on specific sets of systems.

Supermicro AOC Experiences

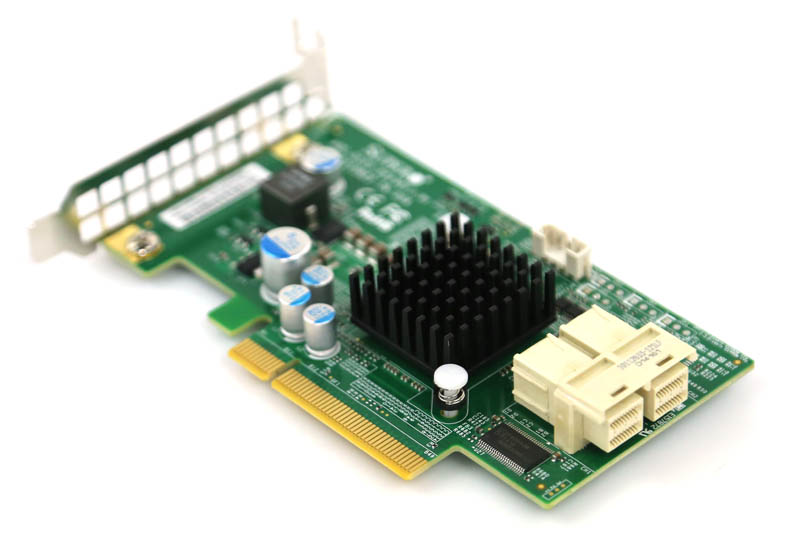

We tested two Supermicro cards the Supermicro AOC-SLG3-2E4R and AOC-SLG3-2E4. The “R” version is a smaller, lower power model due to not having the PLX switch chip. As a result, it was the first version we tested.

The Supermicro AOC-SLG3-2E4R was the first attempt at adding NVMe to existing systems. We lined up the card along with a cable from the Intel 750 400GB 2.5″ SSD kit and a host of 2.5″ drives and started testing.

What we found, and shared in an earlier post is that this card does not have an Avago/ PLX switch chip and therefore only works/ works with two drives in a select few systems (see below).

After a few days of testing, we found a the card that costs $100 more, the Supermicro AOC-SLG3-2E4 had a PLX chip and much wider card compatibility.

In a simple explanation, this is due to the fact that many motherboards are not able to support two PCIe devices in the same physical slot.

With the addition of the Avago/ PLX PE8718 PCIe switch chips, we were able to connect two NVMe drives even on our diminutive mITX Supermicro X10SDV-TLN4F platform and achieve something awesome. We were quickly breaking 4.2GB/s read speeds with two $600 Intel DC P3600 SSDs, all running under 120w!

It was not long ago that 4.2GB/s sequential read speeds would have required a fairly expensive SAN/ NAS running at many times the power this system was using.

While we were able to get single drives working using the Supermicro AOC SLG3-2E4R on some motherboards, two drives caused issues on unsupported systems.

Bottom line: if you do not have a motherboard on the compatibility list, we would suggest getting the Supermicro AOC SLG3-2E4 (also known as the “non-R version”. This is the best option we have found for adding 2x PCIe 3.0 x4 drives to an existing PCIe x8 or x16 slot.

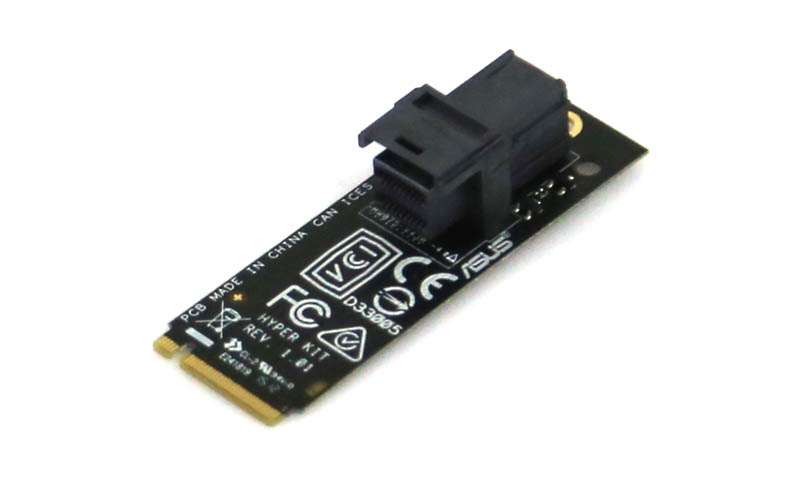

ASUS Hyper Kit

When the M.2 slot was introduced, many folks were excited for the prospect of having PCIe 3.0 x4 based SSDs that were in “gumstick” form factors. The ASUS Hyper Kit essentially converts the standard m.2 PCIe 3.0 x4 slot into a SFF-8643 connector for PCIe SSD cabling.

Here is an example of the ASUS Hyper Kit installed in an ASUS motherboard.

Luckily since this is a relatively simple board we were able to use it in a number of different motherboards with success. Of course, using the Hyper Kit in a non-ASUS motherboard is not something ASUS or other manufacturers will likely support. We tried this in the Supermicro X10SDV-TLN4F test platform we were using and found one caveat. The Supermicro board does not support all of the potential m.2 sizes specifically the 2260 (60mm) size that the Hyper Kit is. We did get this to work just to test, using a bit of creativity and electrical tape. It is not something we would recommend trying.

Bottom line: if you want to use a single drive, this is the lowest power/ least expensive option right now.

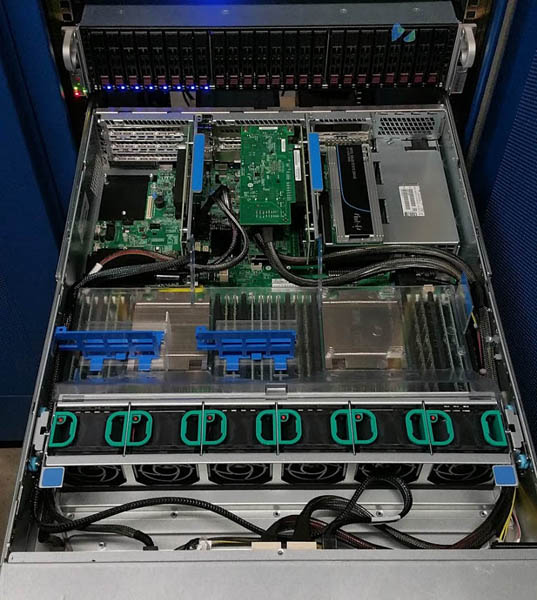

Intel A2U44X25NVMEDK

By far the priciest, and most complete kit we found was the Intel A2U44X25NVMEDK. This kit allows one to upgrade an Intel 2U R2208 WT series chassis (see here for compatible chassis) with an extra hot swap cage. The kit itself is complex. The main parts are:

- New chassis specific riser that converts riser board 2 to a 1x 8 lane and 1x 16 lane PCIe slot configuration

Intel R2208WT upgrade 2U riser and PCIe SSD AIC

- PCIe 3.0 x16 slot to 4x U.2 connector NVMe cable

- 2x dual SFF-8643 to dual SFF-8643 cables

- NVMe/ SAS 8-bay hot swap cage w/ 4x NVMe trays and 4x SAS only trays

To understand what is going on, it is important to remember that the SFF-8639 / U.2 connector actually has pins for data from both SAS and PCIe sources. As a result, the backplane can have SAS in all slots or can use up to 4 NVMe drives while still supporting 4 SAS drives.

Installation took about 15 minutes, including removing two Fusion-io cards from the server and replacing them with Intel DC P3600 and DC P3700 add-in card SSDs. The installation was very easy (even though I had originally forgotten to install the 4-pin power cable!) and could be completed while the R2208WT server was still on rails.

The kit may seem pricey, but it does handle 4x PCIe drives plus 4x SAS drives. We did try the setup in a non-Intel chassis. There were two issues: the fit of the cage and the operation of the PCIe card. The fit of the hot swap cage was something that could be fixed with a bit of Dremel time. The PCIe card worked somewhat in a standard PCIe x16 slot but only allowed operation of one drive in the systems we used it in. If you do have a R2208WT chassis that is compatible, in only a few minutes you can be NVMe enabled.

Our hope is that someone releases a generic version of this kit, perhaps with a larger PLX switch than is found on the AOC-SLG3-2E4 card. That would allow users to bypass the tough to find U.2 cables and upgrade existing systems easily.

Bottom line: awesome kit, but unless you have one of the specific Intel systems that works with the kit, the PCIe x16 card is unlikely to work in your machine. We hope someone builds a generic version of this kit.

On Cables

To perform testing on these solutions we had to find SFF-8643 to U.2 cables. Despite the fact that 2.5″ drives have been and are widely available, cables are not. The ones we found (and were actually able to place an order for) were selling for upwards of $75 each. That is absolutely ridiculous! Instead we ended up buying two Intel 750 400GB 2.5″ SFF SSDs which cost about $400 but have the cables we need.

The retail packs come with cables and we do have some read intensive workloads that can put these drives to good use. For context, traditional generic SAS cables retail for $20-30/ ea, even with SFF-8643 breakout cables.

Conclusion

The state of adding 2.5″ drive support to existing systems is meager to say the least. There are really two options if you want to add drives to existing systems: the ASUS Hyper Kit ($22 street price) or the Supermicro AOC-SLG3-2E4 ($249 street price). The former carries a low initial purchase price while the latter allows for expanding to significantly higher densities than had been previously possible. While m.2 SSDs in gum stick form factors make excellent client SSDs the prospect of using higher-end drives in servers that come with both power loss protection and higher write endurance is very exciting. Hopefully as we see more options available soon. For the majority of servers and workstations with few if any m.2 slits, the Supermicro AOC-SLG3-2E4 is the card to get. Be very careful as the much less expensive R version is designed for specific systems.

We did not test for booting off of NVMe drives. At this point, in servers and workstations where there is generally more space, booting off of a SATA SSD or USB drive (for embedded systems) is much easier. Windows has a built-in driver, Linux has supported NVMe out of the box for some time, even FreeBSD now supports NVMe. That makes NVMe extremely easy to work with versus custom PCIe storage such as Fusion-io. Our advice: boot off of SATA/ USB then add NVMe as data/ application drives as necessary.

You people are rockstars. Nobody else has done anything like it. Great job team STH. I am going to get one of those AOC’s.

I’d seen these products before. Intel makes a 1U kit that’s cheaper but even more system specific.

I could see using those AOC (not r) + the Intel cage as a great solution. If you got two of them you could use those with the intel cage and make something really nice without paying up the *** for U.2.

Patrick – best investigative storage tech journalism since the Samsung TLC SSD fiasco. +1

Thx. I’ve wanted this so we don’t have to pay as much in the studio for “4k video” storage vendors. if someone made a generic version of the intel we’d buy it.

Nice work Patrick! Thanks for blazing the NVMe trail for us.

Patrick: I have installed the kit inthe 2U 2600GZ PCI Riser 2 but i can only access 1 NVME Drive. I assume there is a Bios setting needed to force the pci slot to x4x4x4x4, could this be the case? If so, do you remember which setting it is?

Thx!

I correctly understood that you tested the A2U44X25NVMEDK on the Supermicro X10SDV-TLN4F platform using the Supermicro AOC SLG3-2E4R card?

And you tested this kit without having the right cables?

And why did you test the AUP8X25S3NVDK without the included board?

SUPER cool. Is there an x16 solution for non-SuperMicro Motherboards..? Perhaps the:

AOC-SLG3-4E4T

AOC-SLG3-4E4R ..?

Thanks!