3rd Generation Intel Xeon Scalable “Cooper Lake” Processor Overview

The new 3rd Generation Intel Xeon Scalable platforms have a number of key improvements but also areas that are still the same versus the previous generation of 2nd Gen Intel Xeon Scalable processors.

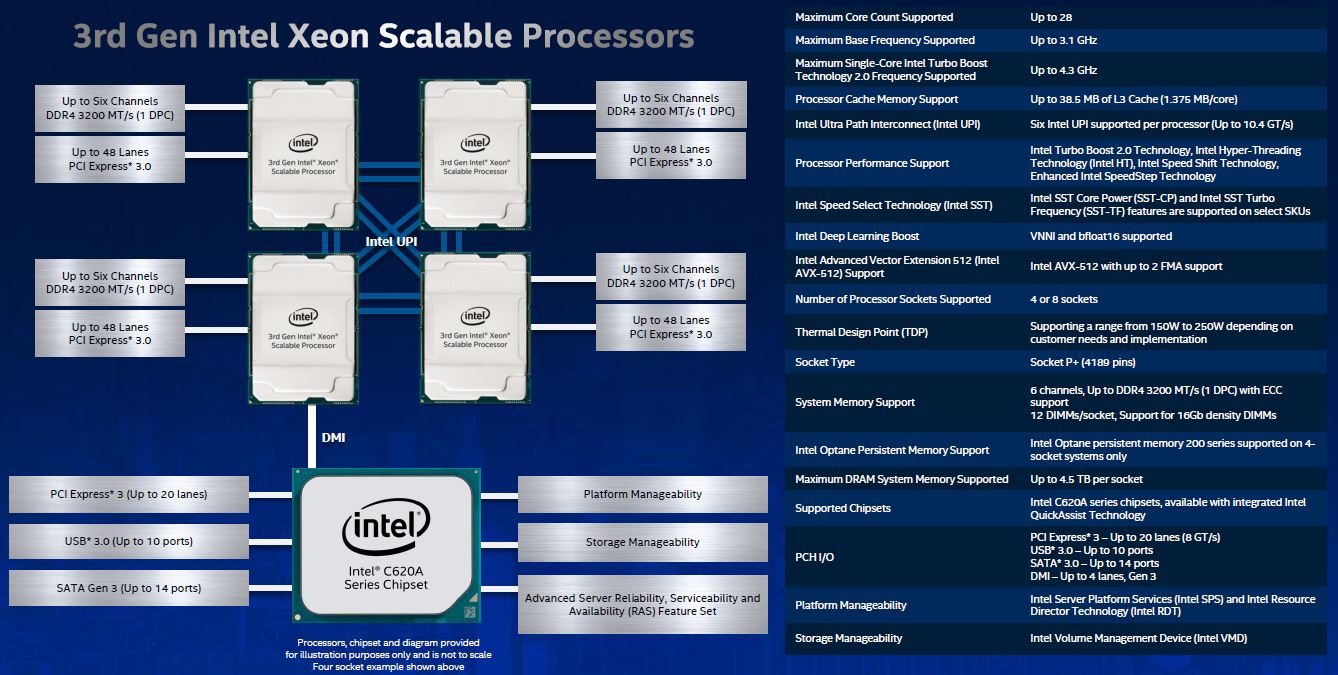

As a quick note, the Purley platform (Skylake/ Cascade Lake) socket is Socket P or LGA3647. This shared a pin count but ultimately not the same socket with Xeon Phi x200. The new Cooper Lake / Cedar Island platform uses Socket P+. Socket P+ is a 4189 pin socket to handle extra I/O from the processor.

Since the way we are going to explain Cooper Lake is in the context of Cascade Lake (and Refresh) Xeons, let us start with the cores.

3rd Generation Xeon Scalable Core Update

With Cooper Lake, we get 28 cores maximum and the same 1.375MB L3 cache per core as we saw on 2017 Skylake and 2019/ 2020 Cascade Lake Xeons. The biggest jump perhaps is the move to a 250W maximum TDP. That means Intel can add new features but also it can keep higher sustained frequencies so long as the cooling solution is capable. A 45W TDP jump is massive and means that these will lead in performance per core at high core counts until updated Ice Lake cores and even higher TDPs are launched.

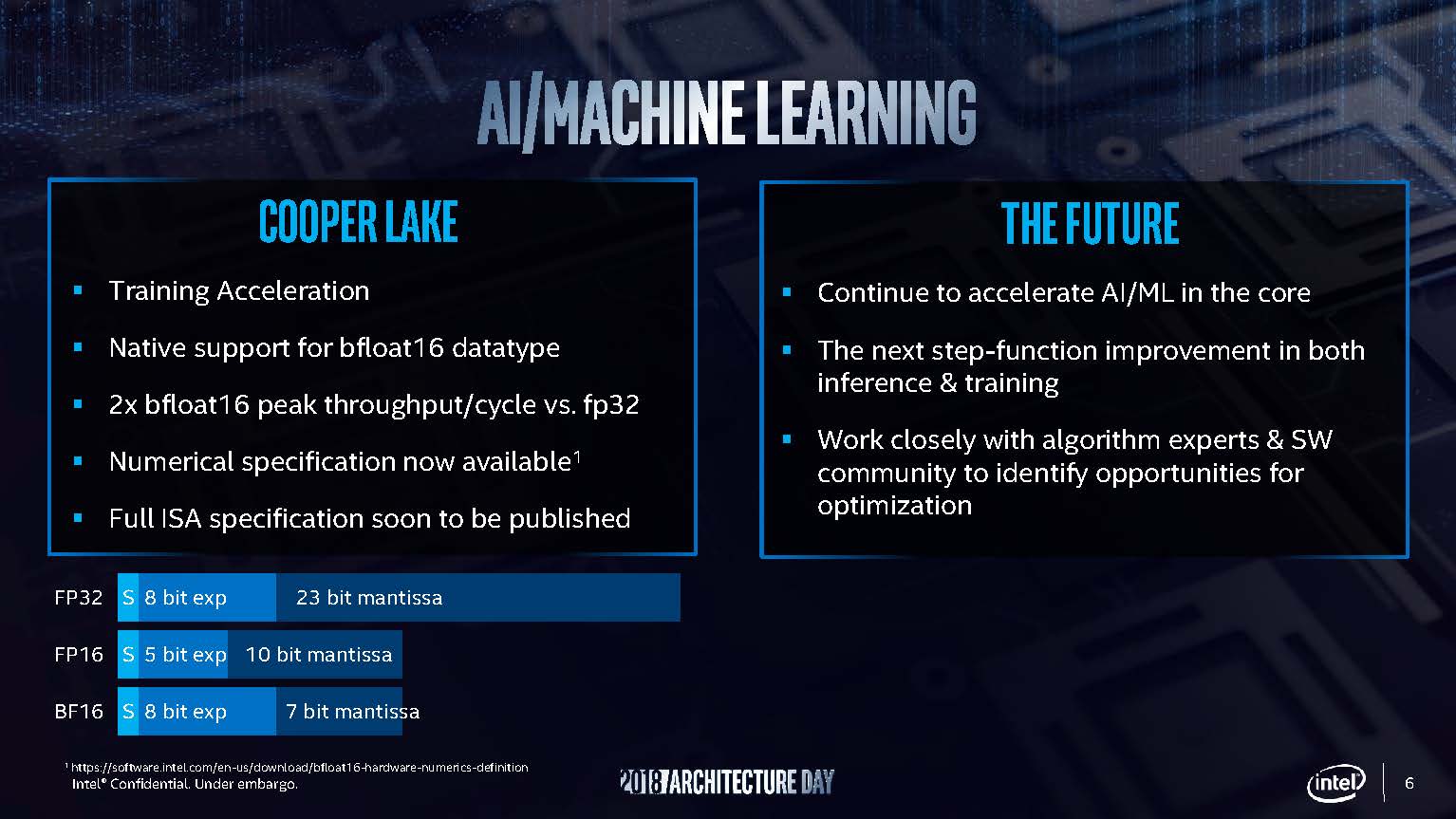

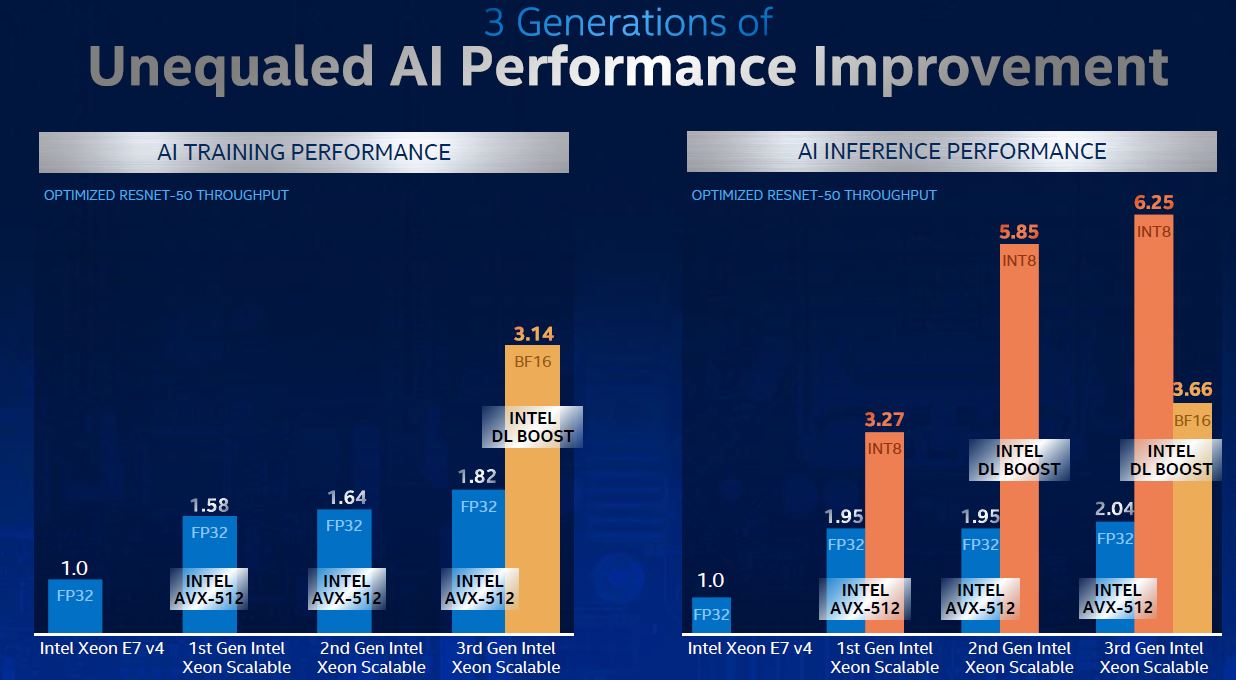

The cores themselves are fairly similar to the Cascade Lake cores as we understand, except for a few differences. Perhaps the biggest is support for bfloat16.

The key with bfloat16 is that it increases effective floating-point performance by retaining enough precision using half of the data of FP32. Since we have half the data, it also means more data fits in caches and memory which helps performance.

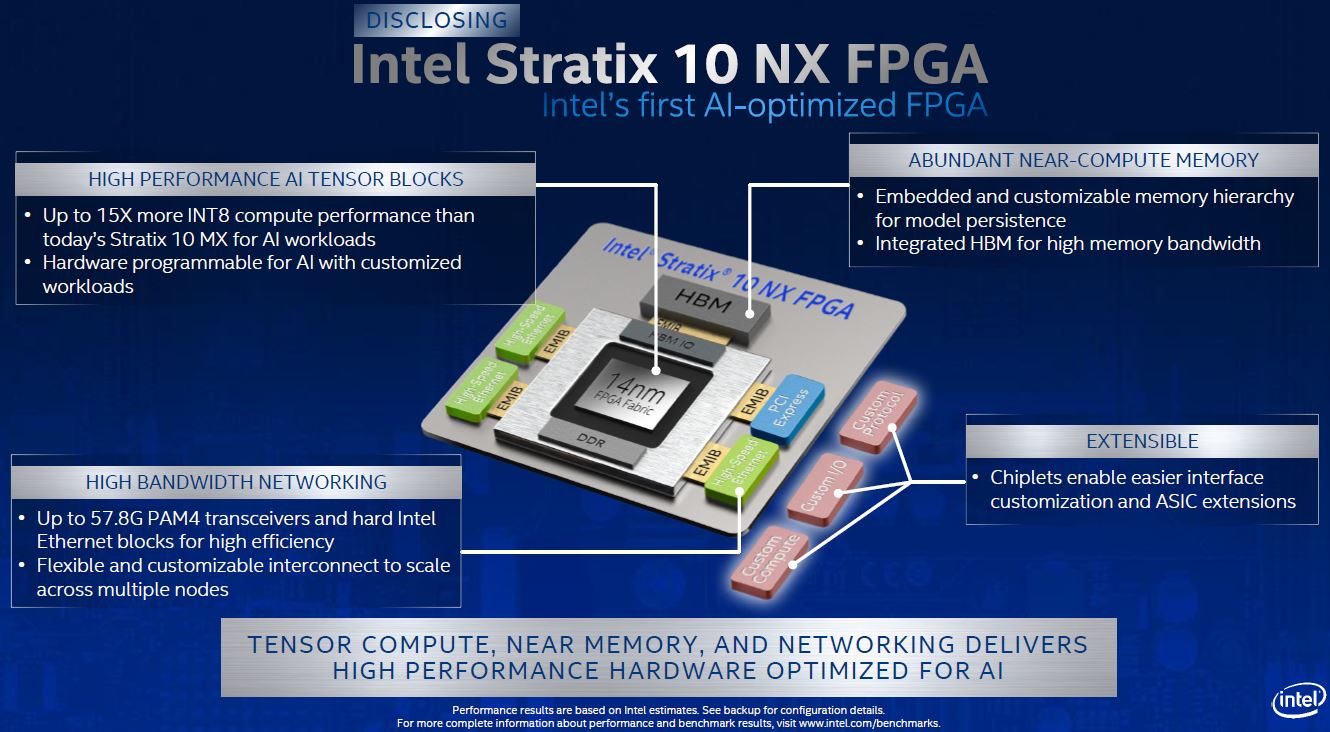

While Intel has a growing portfolio of other AI technologies including Intel Stratix 10 and future Agilex Next-Gen FPGAs, GPUs, Habana Labs chips, Movidius, and others, the idea is to enable deep learning training using unused CPU cycles that customers already have in their environments. Intel offers that it is less expensive to use CPUs that you already own versus buy GPUs specifically for deep learning. One also can benefit from CPU features such as large memory footprints. Of course, Intel on the briefing for the 3rd Gen Xeon Scalable offered a new Stratix 10 NX FPGA (note this is not 10nm Agilex) to further the idea that it is pushing a portfolio approach:

With Cooper Lake, we still get VNNI support for Intels cross-segment inferencing acceleration as well as AVX-512 with dual-port FMA.

Intel did not say this in the briefing, but a fairly valid way to think about a Cooper Lake chip is that it is like Cascade Lake chip with higher TDP, some memory controller tweaks, float16 added, and more UPI links. There are a few other differences, but realistically, the delta between the 2nd and 3rd generations of Xeon Scalable are not as big as we will start to see later this year with Ice Lake Xeons.

3rd Generation Xeon Scalable SKUs

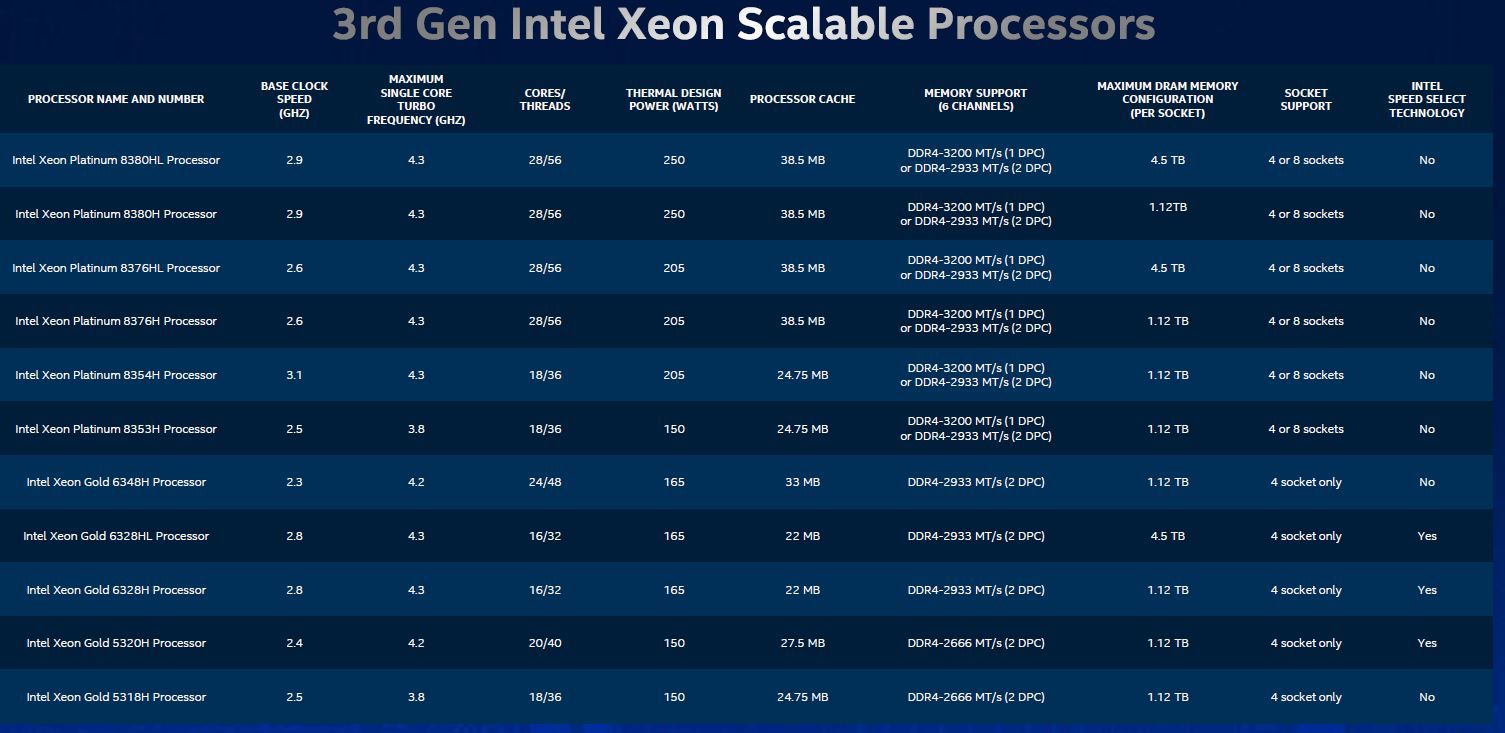

We are going to have our formal SKU and value analysis, but here is Intel’s chart on the new SKUs:

There are 11 SKUs being launched today. We expect Intel to plug some of the holes in this lineup with additional SKUs over the next few months.

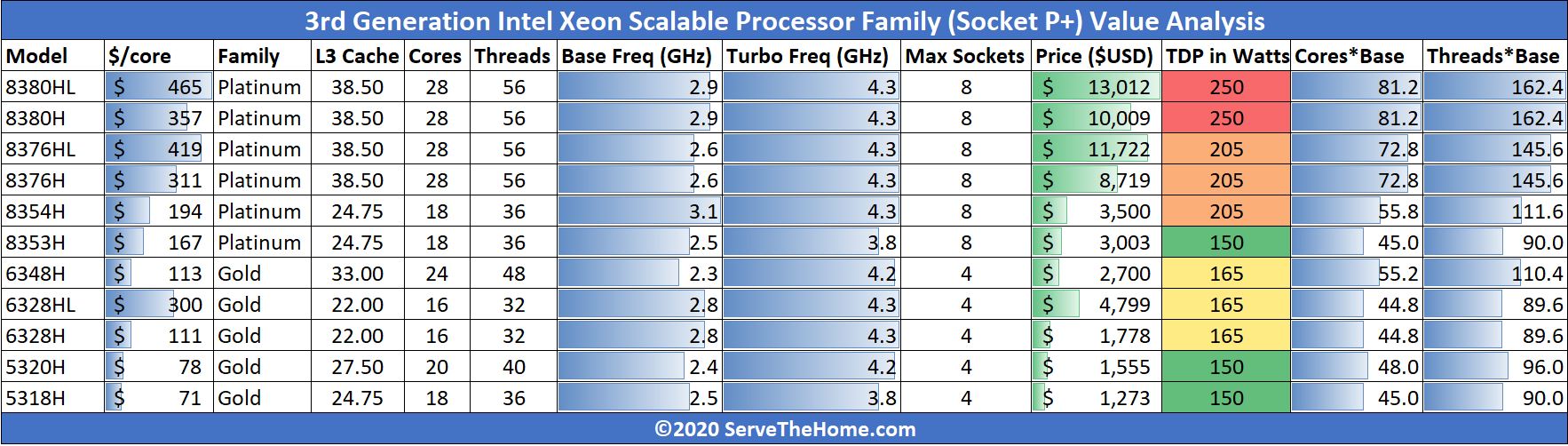

Here is what that looks like with some of the basic metrics:

Overall, these chips are in-line with the 2019 generation of Cascade Lake parts. We do not see severely out-of-step pricing since the market would likely not bear something like a Xeon Gold 5318H at $10000 and going up from there in the line. Competition is a good thing.

More on this in a separate piece.

Next, let us start looking at the platform components.

Why doesn’t anyone else have info on the PMem 200 in memory mode that you’re talking about on page 3. STH is the only place reporting that.

Fred we confirmed this with Intel before the piece went live. To be fair, it was very hard to figure out based on what Intel released in their materials.

This is the most in-depth coverage of Cooper I’ve seen. There’s crazy detail here. excellent work Patrick and STH

Does anyone know what the “MOQ” refers to in the Optane specs, the values (4 and 50) align with the existing Optane DIMMs but could never find what it referred to.

Thanks

“a stopgap step”

From the processor king that has been pumping $Bs Q after Q?

AMD for pumping out products, but Intel for pumping in $$$…

@binkyto… MGTFY.com. OH what help a google could be. MOQ typically refers to Minimum Order Quantity. however…if you had actually googled “Optane” + “MOQ”, without the quotes of course, you’d have come to this link:

https://ark.intel.com/content/www/us/en/ark/products/190348/intel-optane-persistent-memory-128gb-module.html

wherein it says:

Intel® Optane™ Persistent Memory 128GB Module (1.0) 4 Pack

Ordering Code

NMA1XXD128GPSU4

Recommended Customer Price

$1499.00

Intel® Optane™ Persistent Memory 128GB Module (1.0) 50 Pack

Ordering Code

NMA1XXD128GPSUF

Recommended Customer Price

$1499.00

4 pack and 50 packs. they have different sku’s. you’r welcome.

Isn’t MOQ minimum order quantity BinkyTo?

Nice writeup STH.

That Optane PMem 200 I’ve read Anandtech, Toms, and nextplatform and none of them mentioned it in their articles.

Fire.

Keep cutting through the marketing BS at these companies.

Can’t wait to see how it stacks up with the exiting parts and Epyc 7xx2

The use of place names to distinguish these products creates confusion. Reading articles about Intel CPUs requires one to have a decoder ring handy.

Intel should stop being obscurantist.

Is the TDP 15W or 18W? If you go to their site it still mentions 18W.

Also some of their datasheet still mentions the specs for the 100 series. Like here: https://www.tomshardware.com/news/intel-announces-xeon-scalable-cooper-lake-cpus-optane-persistent-memory-200-series

Will the rated 8.1GB/3.15GB R/W bandwidth improve with the Icelake platform? Why does the datasheet say it supports up to 2666MT/s?

Intel got hurt bad by their 10nm process. The delays that caused allowed AMD to leapfrog. The question now is who will be first to market with PCIe Gen 5. That will be an enormous advantage in the server space.

I love competition. I really do.

I’m going to have to dig into this further tomorrow, but what has really caught my eye this week is working Sapphire Rapids chips in Intel labs. Sapphire Rapids is supposed to have both DDR5 and PCIe 5, and they are targeting a 2021 release. The reporting on AMD’s roadmap that I have seen has Genoa and the SP5 socket (with DDR5 and PCIe 5) coming out in 2022. I wonder how many months Intel powered servers will have those features before AMD’s get them? DDR5 looks like a huge upgrade. I thought AMD’s technical dominance in servers would last a couple more years, I might have been wrong.

@Wayne Borean: From a business point of view, Amd has done nothing to really stress Intel as regards profits/revenue over the last 3 years since Epyc has been on the market. Most of the marketshare that Epyc has gained over the last 3 years is miniscule when you look at the revenu/profit that Amd earns from Epyc.

If Amd cannot compete on a volume basis with Intel when Zen 3 come out, Intel will continue to dominate in the only ONE true area that counts for any business: increasing revenue/profits over your competitors

Amd has amazing performance compared to Intel but at what cost??

Once Ice Lake server comes out in volume then and only then will we see a head to head competition and based on the last 3 years when Amd clearly had the performance lead, it does not look too good for Amd in the server space!!!