Micron 9400 32TB Performance by CPU Architecture

If you saw our recent More Cores More Better AMD Arm and Intel Server CPUs in 2022-2023 piece, or our pieces like the Supermicro ARS-210ME-FNR Ampere Altra Max Arm Server Review, Huawei HiSilicon Kunpeng 920 Arm Server piece, you may have seen that we have been expanding our testbeds to include more architectures. This is in addition to the Ampere Altra 80 core CPUs that are from the family used by Oracle Cloud, Microsoft Azure, and Google Cloud.

We also have several IBM Power9 LC921 servers in the lab. We were able to use the PCIe Gen4 slots, (albeit not with hot-swap bays) to get connectivity for the drives. On a relative scale, Ampere Arm was easy, Huawei/ HiSilicon was more challenging (our first tests were ~80% of AMD EPYC performance), but the IBM Power9 servers have taken a lot more time to get working for this.

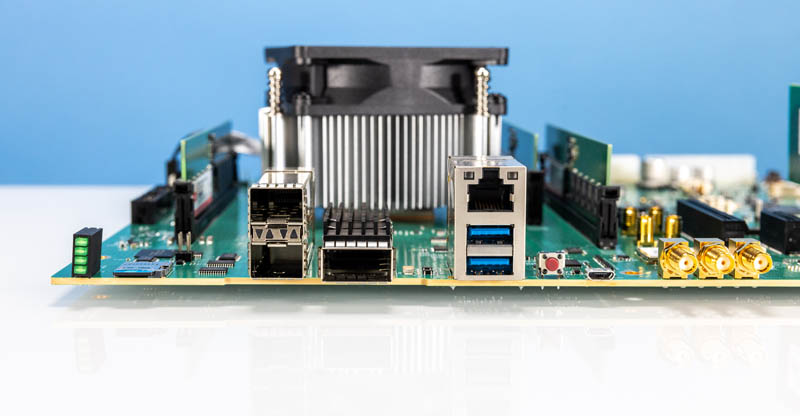

One of the other newcomers to this test is a new PCIe Gen5 capable platform, the Marvell Octeon 10 DPU. This is actually the newest Arm architecture that we have tested using the Arm Neoverse-N2 cores and with a PCIe Gen5 root complex. This one was far from easy to get working given we used a development platform, but with 24 Arm cores, PCIe Gen5, DDR5, and 200GbE networking built-in, these are very capable DPUs.

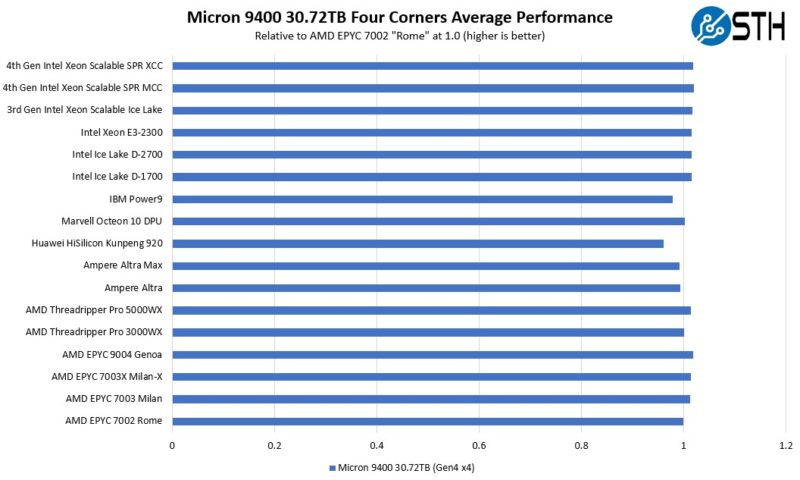

With that, we also had AMD EPYC 7002 “Rome,” EPYC 7003 “Milan,” EPYC 7003X “Milan-X,” 3rd Generation Intel Xeon Scalable (Ice Lake), and the new Ice Lake D-1700 and D-2700 using the Supermicro platforms from our Welcome to the Intel Ice Lake D Era with the Xeon D-2700 and D-1700 series, and also the Intel Xeon E-2300 series. We have added the AMD Threadripper Pro 3995WX and Threadripper Pro 5995WX to the mix as those are effective replacements for high-end dual Xeon professional engineering and studio workstations. We also now have the AMD EPYC 9004 “Genoa” and 4th Gen Intel Xeon Scalable “Sapphire Rapids” PCIe Gen5 both in its XCC and MCC platforms to test in. That gives us twelve PCIe Gen4-based CPU architectures and two or three (depending on your take on XCC/MCC impact) PCIe Gen5 architectures we could test the drives with. Using the AMD EPYC 7002 “Rome” as the base case, we used our four corners numbers and then averaged the percentage deltas from the Rome results:

A few quick notes. First, we are seeing the newer Marvell part perform better than, the older Huawei HiSilicon Kunpeng 920, and that makes a lot of sense. The IBM Power9 is a bit slower, but that is common across drives we have tested. Another key takeaway is that the new AMD and Intel parts perform very well downgrading to PCIe Gen5 speeds.

Another key point is that to get all of this data took servers from Supermicro, Gigabyte, Huawei, Inspur, IBM, Wiwynn, QCT, Lenovo, AIC, ASRock Rack, and ASUS. We did not use Dell and HPE for these because of their RAID controllers in our test systems change performance. That is an amazing number of vendors to use. It also takes over two drive weeks to generate this data due to the run and then replacement cycle times.

It was impressive as Micron was able to perform well across a wide variety of architectures. Some of those architectures we are not even sure if Micron’s team has access to (yet.)

Final Words

In some ways, this is a very intriguing drive. For PCIe Gen4 NVMe SSDs, the Micron 9400 is perhaps the fastest drive we will see. That is an accomplishment. While we are going to start to see the introduction of more PCIe Gen5 servers and DPU models in 2023, this year, the market is going to straddle the PCIe Gen3, Gen4, and Gen5 generations. We have a new Intel Snow Ridge edge networking platform that is just hitting the market now but only has PCIe Gen3 and will have a 7+ year lifecycle. PCIe Gen4 is going to be with us not just in 2023 but for many years to come, and that is what the Micron 9400 Pro line is focused on dominating.

Going beyond raw performance figures for a moment, the 30.72TB or “32TB-class” form factor is simply awesome. There are a huge number of organizations that can utilize these high-capacity drives with higher-capacity servers to achieve great consolidation. In our recent AMD EPYC Genoa and Intel Xeon Sapphire Rapids articles we discussed how server architectures are now able to consolidate at a ratio of over 2:1 compared to 5-year-old systems. Part of consolidating is not just additional per-socket memory bandwidth, cores, and raw CPU performance. It is also capacity. There are organizations that will look to buy at different parts of the CPU SKU stack to potentially achieve 4:1 consolidation ratios over 2017-2020 era machines. If those systems were using 7.68TB drives, which would have been large at that time, Micron has the ability to consolidate those on a 4:1 basis in terms of capacity and sometimes in terms of performance as well. The same math works with more common in that era 4TB-class SSDs and Micron’s 16TB class 9400 series.

Having a faster drive is always nice, and there will always be a market for having incrementally more IOPS or lower latency. Where drives become transformational is with their ability to reduce the power and footprint of servers and storage drastically. Or put another way, filling the same footprint with new higher-capacity servers, storage, networking, accelerators, and memory means new capabilities. The Micron 9400 series would be interesting just based on its performance. It starts to be transformational to rack architecture by increasing performance and capacity.

Is Dell using these drives? It’d be nice to get 4 drives instead of 8 or 16 so it increases airflow. That’s something we’ve noticed on our R750 racks that if you use fewer larger drives and give the server more breathing space they run at lower power. That’s not all from the drives either.

I’m enjoying the format of STH’s SSD reviews. It’s refreshing to read the why not just see a barrage of illegible charts that fill the airwaves since Anandtech stopped doing reviews.

That multi uarch testing is FIRE! I’m going to print that out and show our team as we’re using many of those in our infra

I enjoyed seeing the tests for the different platforms. As the Power 9 was released in 2017, it’s interesting how it holds up as a legacy system. My understanding is that IBM’s current Power10 offering targets workloads where large RAM and fast storage play a role. I’m particular, the S1022 scaleout systems might be an good point of comparison.

At the other end of the spectrum, I wonder which legacy hardware does not benefit significantly from the faster drive. For example, would a V100 GPU server with Xeon E5 v3 processors benefit at all from this drive?

Micron 9400 en Fuego!

30TB over PCIe 4.0 should take around 1h. So, a 7 DWPD drive in this class would have to be writing at full speed for ~7h per day to hit the limit. I’m sure workloads like this exist, but they’d have to be rare. You probably wouldn’t have time for many reads, so they’d be write-only, and you probably couldn’t have any sort of diurnal use patterns, or you’d be at 100% and backlogged for most of the day with terrible latency. So, either a giant batch system with >7h/day of writes, or something with massive write performance but either no concern about latency, or the ability to drop traffic when under load. Packet sniffing, maybe?

Given the low use numbers on most eBay SSDs that I’ve seen, you’d need a pretty special use case to need more than 1 DWPD with drives this big.

That’s 1 hr to fill 30tb using sequential writes which are faster. The specs for DWPD are 4K random since those are what kill drives. If all sequential SSD have much higher endurance. So the 1hr number used is too conservative

How does one practically deploy these 30TB drives in a server with some level of redundancy? Raid 1/5/10? VROC?

@Scott: “You probably wouldn’t have time for many reads”

I am not sure what you mean. PCIe is full duplex and you can transfer data at max speeds in both directions simultaneously. As long as the device can sustain it, that is. I would assume reading is a much cheaper operation than writing so in device with many flash chips (like this one) that provide enough parallelism you should be able to do plenty of reads even during write-heavy workloads.

@Flav you would most likely deploy these drives in a set using a form of Copy-On-Write (ZFS) or storing multiple copies with an object file system or simply as ephemeral storage. At this capacity RAID doesn’t always make sense anymore as rebuild times become prohibitively long.

This is a superior read. TY STH

Great read. I’ve managed to get my hands on an 11TB Micron 9200 ECO, which I’m using in my desktop and while it’s an amazing drive, it gets really toasty and needs a fan blowing air directly at it, which makes sense, as the datasheet specifically requires a continuous airflow along the drive.

What I’d like to say with all that is that I think it would be really useful if you could include some power consumption figures for all of these tests, as the idle power is generally not published on the datasheets, all we get is a TDP figure, which is about as accurate as the TDP published for a CPU. If you used an U.2 to SFF-8639 cable, you could measure the current along the 2-3 voltage rails, which I think would be fairly simple to do.

Blah blah blah. How much are these mofo’s gonna cost? Looking forward to these drives being the norm for the consumer market at a lie price to be honest. Then we can have a good laugh at the price we used to have to pussy fit a 1tb ssd, like in the early 90’s with 750mg hdd’s costing £150+.

In general STH should have more focus on power consumption!

The are astonishingly affordable for what they are. $4,400 on CDW.com. Going to be loading the boat on these things.

How are you connecting the 9400 U.3 drives? i just bought a 7450 U.3 cant get it to work with a PCIe 3.0 x4 U.2 card, nor with m.2 to u.2 cable, both work fine with Intel 4610 or Micron 9300 but not with 7450, just looking for a PCIe card or an adapter to test the 7450.

Typo: Micron, not Mircon on two sites!!!

I had to fill about 50 obnoxious CAPTCHAS to post my previous post. I was just about to give up. You may be losing valuable messages!!!