We recently provided some figures from our initial benchmarking on a single Pliant/ SanDisk Lightning 200GB SLC SAS SSD. We were using our “quick and dirty” suite of three benchmarks to test a few SAS SSDs we picked up for under $0.60/ GB. While these are certainly not the full suite, they do give at least a quick level of performance. Most SSD reviews are based on single drives so we decided to try something slightly different: RAID 0 benchmarks with two drives. The big question we have is how do these SanDisk Lightning 206s drives handle read and write scenarios where data is striped across both disks simultaneously. We are using the same test configuration and benchmarks in our initial figures just to provide some consistency here.

For those keeping track, here are the pieces thus far in the series:

- Pliant/ SanDisk Lightning 206s 200GB SLC SAS SSD benchmarks

- Seagate Pulsar.2 200GB MLC SAS SSD benchmarks

- Pliant/ SanDisk Lightning 206s 200GB SLC SAS SSD benchmarks

- Smart Storage System/ SanDisk Optimus 400GB MLC SAS SSD benchmarks

More to come for in-depth coverage. Each of those articles linked above has additional detail about the drives being tested.

Test Configuration

Since we are going to assume the use of already released hardware, we are using a legacy system for testing across the test suite:

- Motherboard: Gigabyte GA-7PESH3

- Processors: Dual Intel Xeon E5-2690 (V2)

- SAS Controller: (onboard LSI SAS2008 in IR mode)

- RAM: 64GB DDR3L-1600MHz ECC RDIMMs

- OS SSD: Kingston V300 240GB

We did not use our Intel Xeon E5-2600 V3 platforms because this series started prior to the embargo being lifted on that platform. The Gigabyte’s LSI controller found onboard is the same as can be found in countless platforms and cards such as the IBM ServeRAID M1015 and LSI 9211-8i. We decided to use this controller since it is an extremely popular first-generation 6gbps SAS II controller. For those looking to build value SAS SSD enabled arrays, the LSI SAS2008 is likely going to be the go-to controller. A major point here is that we are using a SAS controller. This means that one cannot compare results directly to consumer-driven setups where a SATA SSD is connected to an Intel PCH port. There is a latency penalty for going over the PCIe bus to a controller to SAS. It also is a reason NVMe is going to be a game changer in the enterprise storage space.

Controller wise, the LSI SAS 2008 controller has plenty of headroom for this configuration.

SanDisk 406s (lb406s) 400GB SLC SAS SSD Quick Benchmarks

For our quick tests during this part of the series we will just provide the quick benchmarks with only a bit of commentary.

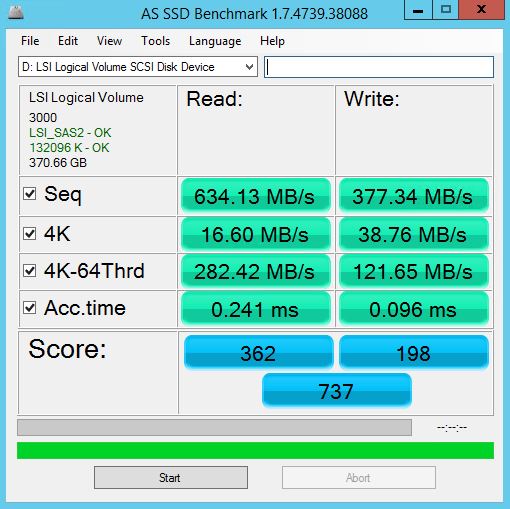

AS SSD Benchmark

AS SSD is a solid benchmark that does not write compressible data to drives. The result is perhaps one of the best workstation SSD benchmarks available today.

In AS SSD we see some interesting performance. Sequential reads/ writes are certainly not scaling fully with the second drive, but there is substantially more performance. Sequential writes are only a bit more than 50% faster than we saw with the single SanDisk Lightning 206s 200GB drive. Interestingly enough, the 4K read and write speeds are much closer to what we saw on the single 400GB drive version.

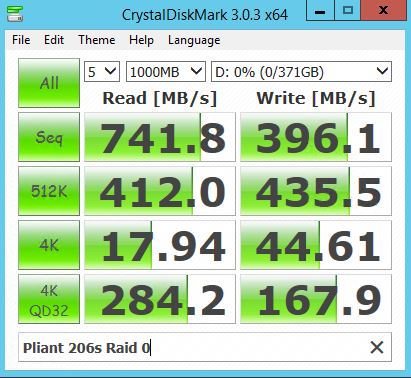

CrystalDiskMark

CrystalDiskMark is another benchmark which gives non-compressible read/write numbers. This is in contrast to the ATTO Benchmark used by LSI/ Sandforce and its partners when they market a given solid state drive.

CrystalDiskMark scales much better. Perhaps the most interesting thing here is that Sequential read and write speeds went up by almost a factor of four. Given this architecture it is a strange result to get more than a 2x speed improvement. The numbers were intriguing enough that we did double-check that there were indeed only two LB206s 200GB SSDs attached and incorporated into the array.

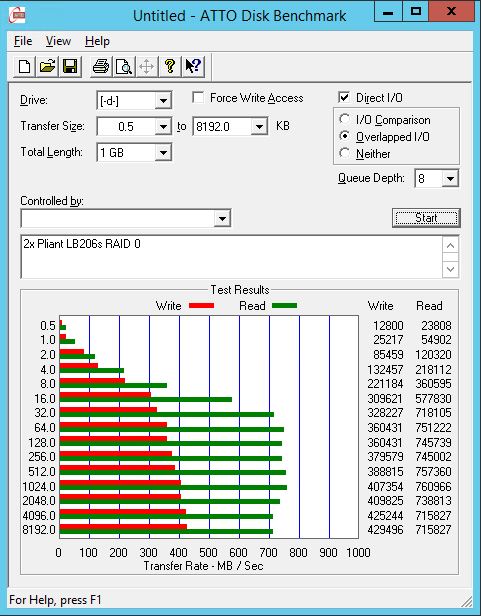

ATTO Benchmark

The value of the ATTO benchmark is really to show the best-case scenario. ATTO is known to write highly compressible data to drives, which inflates speeds of controllers that compress data like LSI/ SandForce does prior to writing on a given solid state drive.

In terms of ATTO scaling we see approximately a 1/3rd improvement in write speeds but almost a 2x improvement in read speeds. The performance gains from running these drives can be seen very clearly.

Conclusion

What started out as a crazy hypothesis, that these drives were built to run in RAID arrays and would work better in RAID arrays seems to be trending towards being true. Certainly not what we were expecting but perhaps it makes sense. These drives were made to be in arrays not as single drives. More to come but a great result nonetheless.