2nd Gen Intel Xeon Scalable Memory Speed Increases

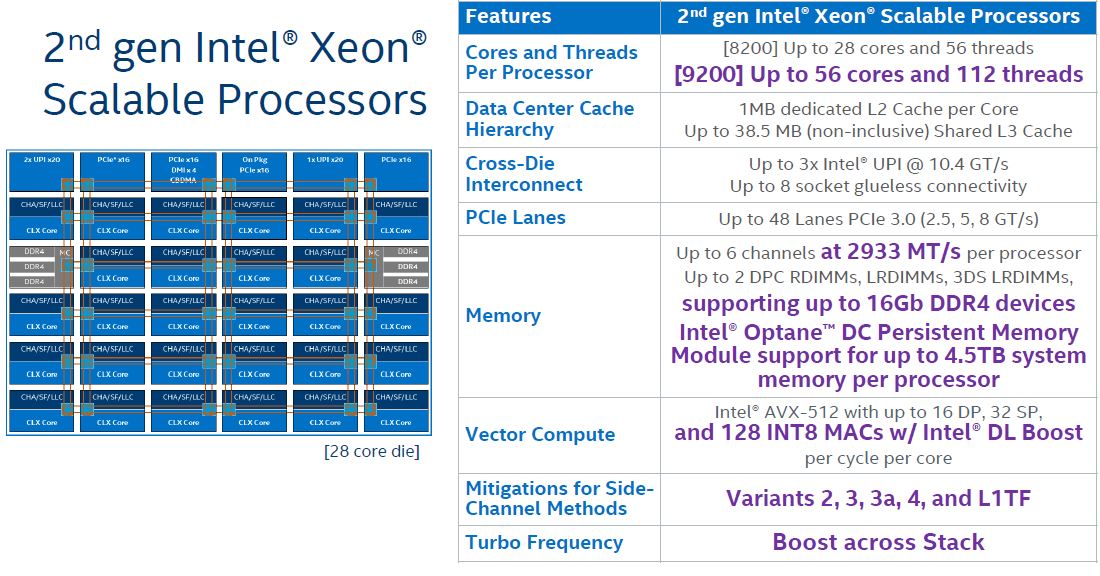

One of the key features in this new generation is the support for up to DDR4-2999 DRAM support along with larger memory sides. This is a minor feature compared to the Intel Optane DC Persistent Memory Modules (DCPMM), yet it is still one that is important.

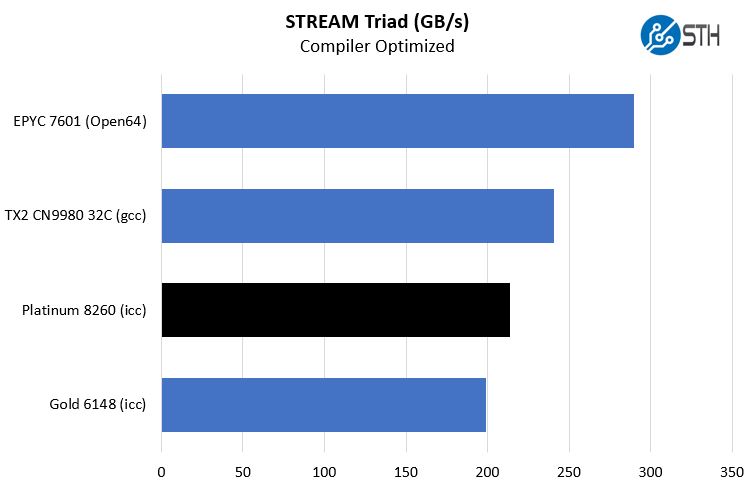

We tested this using the same setup we used during our Cavium ThunderX2 Review and Benchmarks piece. Here, one can see that we are indeed getting better memory bandwidth from the Platinum 8260 part.

We did not have a Gold 6248 CPU to test with, so we had to make a substitution here for the Platinum 8260. Still, we can see a fairly clear impact of higher memory speeds.

There is an enormous caveat here. DDR4-2933 is only available when Intel Optane DCPMM is not installed. The Intel Optane DCPMM was originally slated to launch with the 1st Generation Xeon Scalable CPUs in 2017 when DDR4-2666 was top of the line. As a result, the 1st generation Optane DCPMM runs at DDR4-2666 and thus, the entire memory subsystem gets limited to DDR4-2666 with the Intel Optane DCPMM modules installed.

Intel Optane DC Persistent Memory

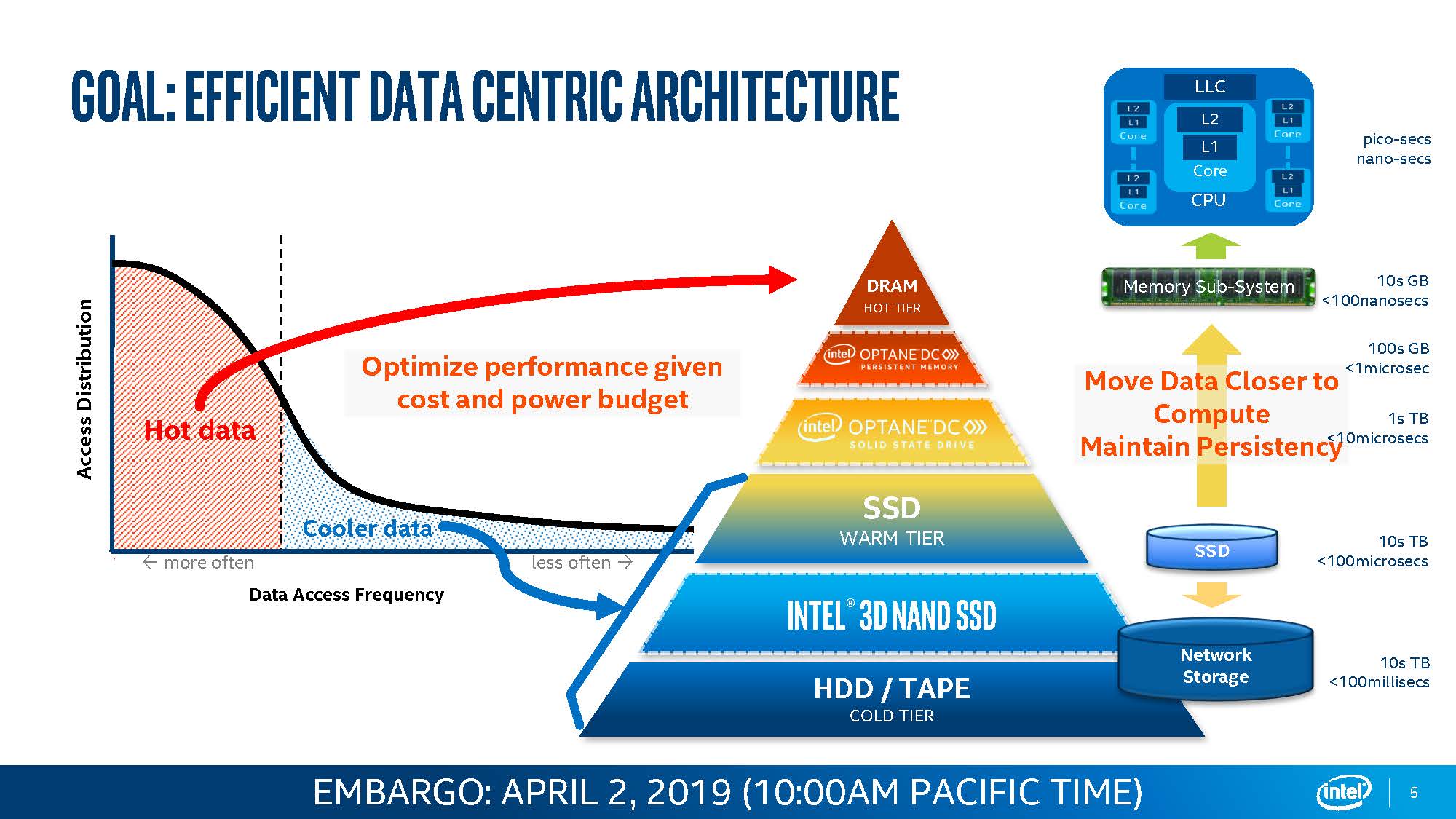

As far as marketing titles go, the Intel Optane DC Persistent Memory Module brand seems to follow a guideline that the more characters one can fit in a product name the better at almost 36 characters. By that yardstick, the Intel Optane DCPMM, for short, lives up to expectations. Intel Optane DCPMM’s first official support comes in the form of the Cascade Lake memory controller that has the necessary logic to handle both Optane DCPMM and DRAM. Intel’s goal is to create a new tier between its Optane NVMe SSDs and DRAM.

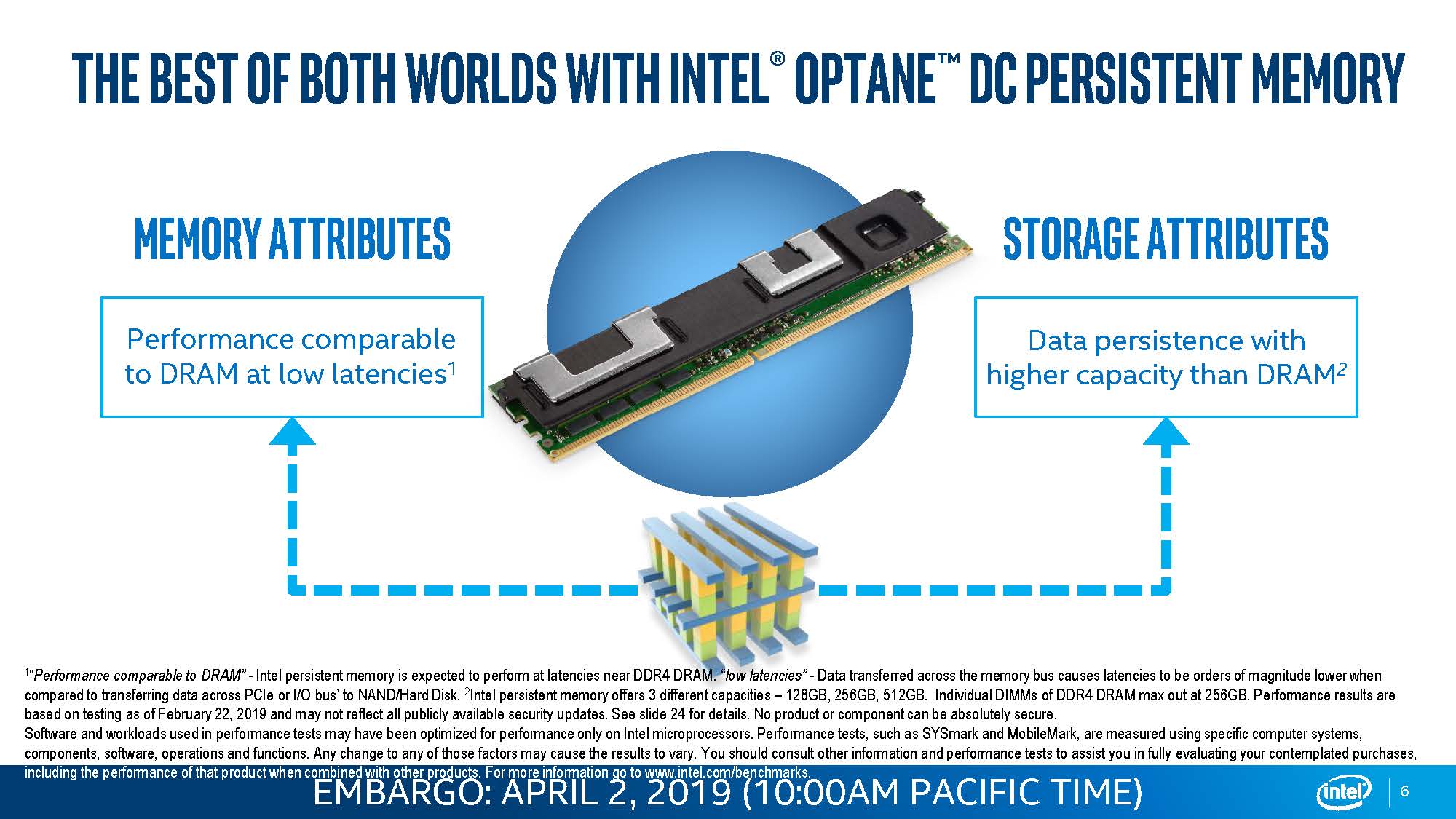

With Intel Optane DCPMM, one gets both low latency access in the memory channels, bypassing PCIe, while also getting higher capacities and persistence like in traditional NVMe devices.

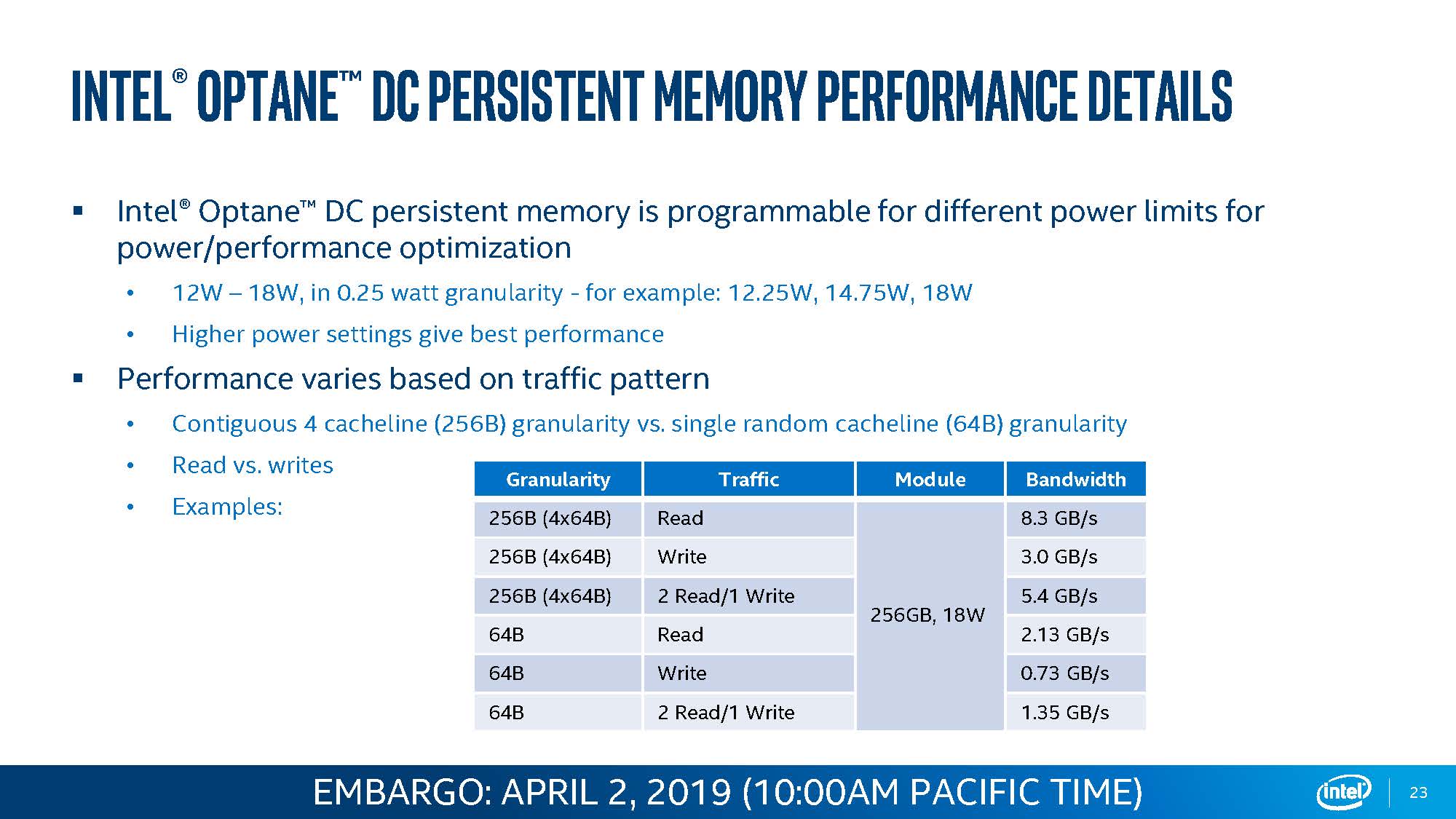

The Optane DCPMM modules come in 128GB, 256GB, and 512GB capacities and range from 15W to 18W in their operation.

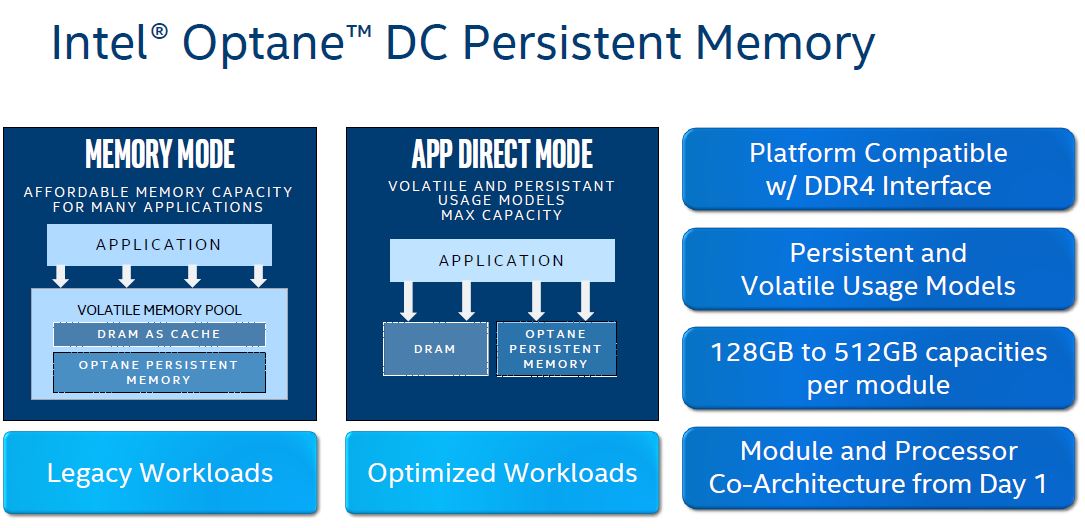

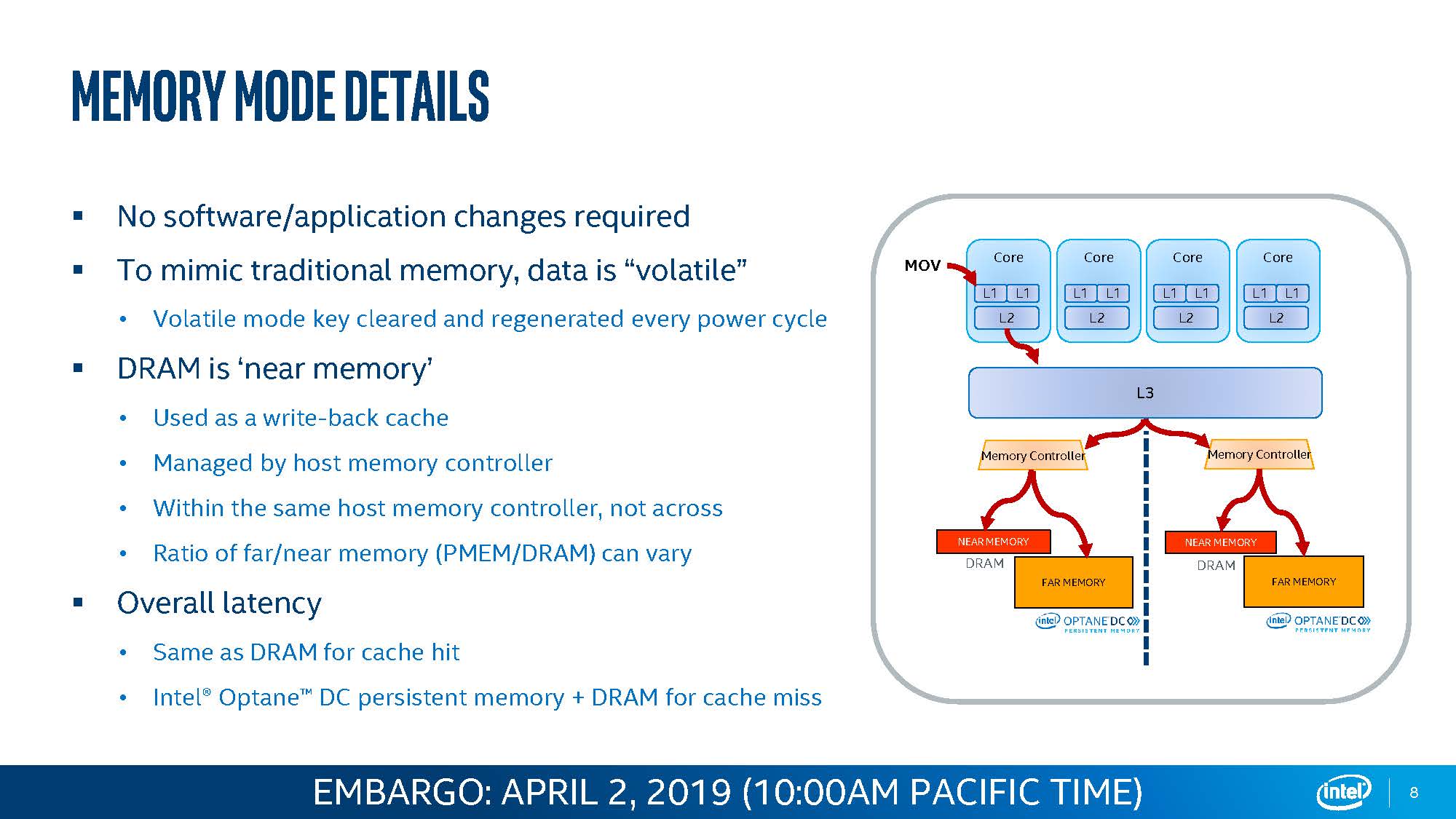

Intel is offering two modes. One is memory mode where DRAM is used as cache for the Optane DCPMMs. The system then has a larger memory footprint but it does not have persistence in this mode.

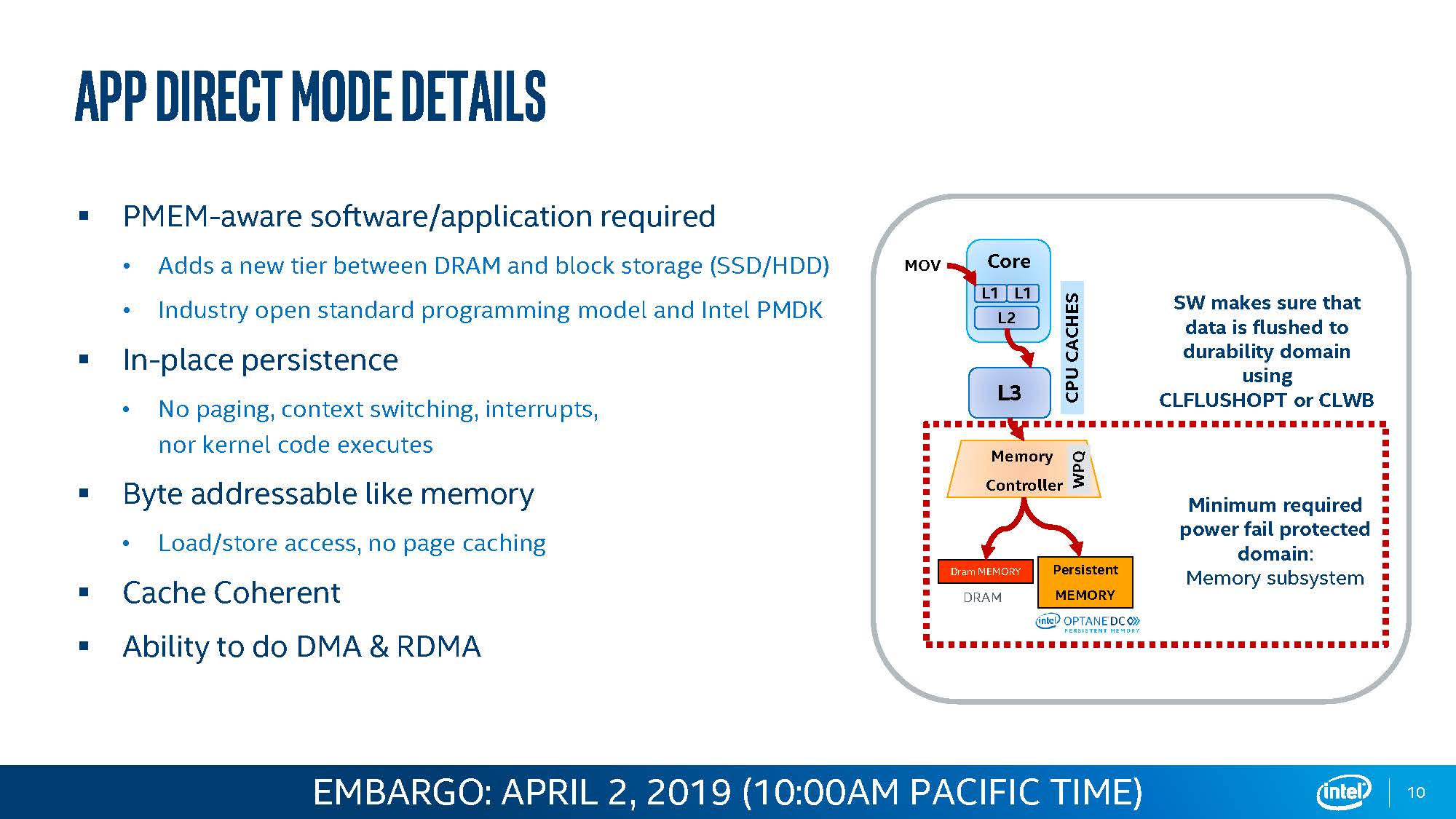

App Direct Mode is perhaps the more interesting as, with software support, the DCPMM can be exposed directly to the OS and used as persistent, low latency storage.

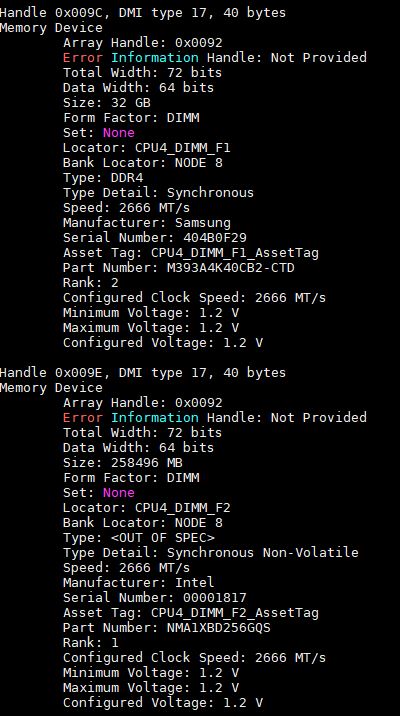

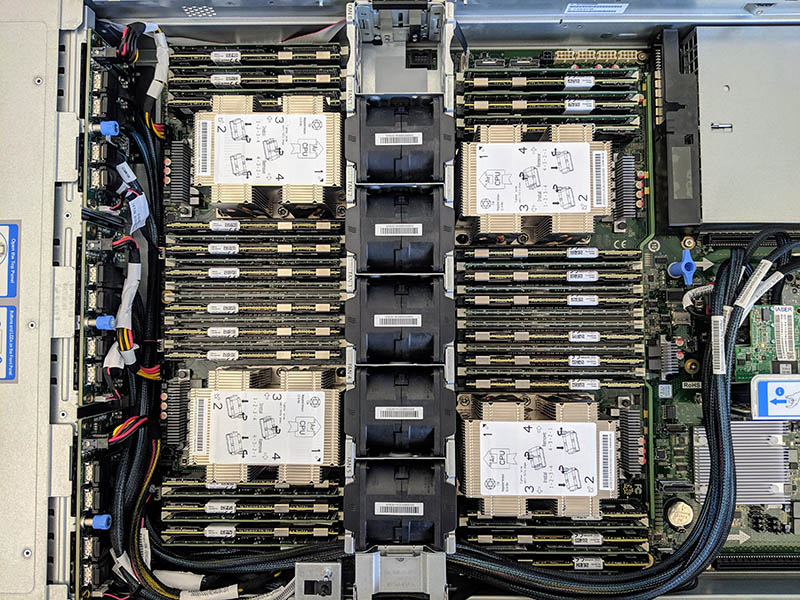

Here is an example of a 32GB DIMM next to a 256GB Intel Optane DCPMM in a system.

Intel’s vision of how an organization may use this looks something like this. Here we have 24x 32GB DDR4 RDIMMs alongside 24x 256GB Intel Optane DCPMM modules in a quad socket 2nd Gen Intel Xeon Scalable platform.

Each of the Intel Optane DCPMM modules offers hardware encryption so one cannot simply take a device out of a server and have access to the contents of memory. In memory mode, this effectively removes their persistence.

Endurance is rated for 100% module write speed for five years. We were told that the quality of die for the Intel DCPMM is higher than on the traditional M.2, U.2, and AIC Optane NVMe SSDs.

The impact of this new memory technology can be substantial offering lower latency and higher bandwidth than NVMe. Systems can also interleave the modules which increase bandwidth substantially in a system.

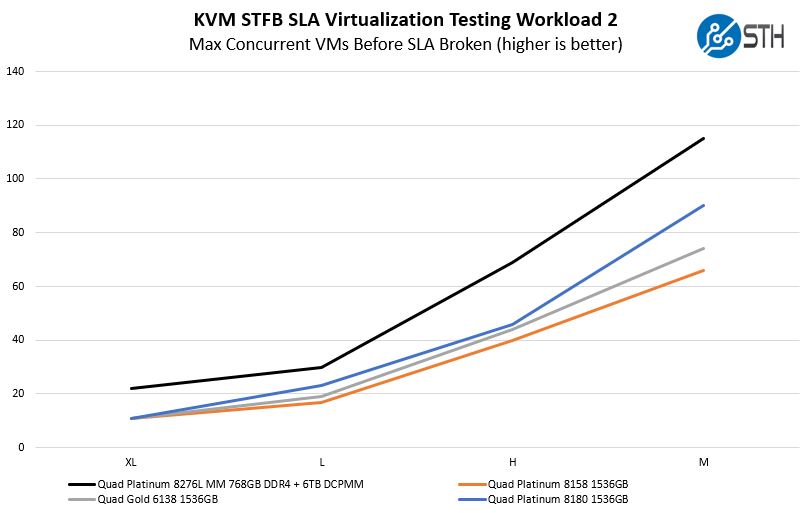

In our own memory mode KVM virtual machine testing that we highlighted recently with our Supermicro SYS-2049U-TR4 4P server review, we saw substantial performance improvements.

STH STFB KVM Virtualization Testing with Optane DCPMM

One of the other workloads we wanted to share is from one of our DemoEval customers. We have permission to publish the results, but the application itself being tested is closed source. This is a KVM virtualization based workload where our client is testing how many VMs it can have online at a given time while completing work under the target SLA. Each VM is a self-contained worker.

Here the memory requirements are considerable, so simply having enough memory becomes the driving factor of how many VMs can operate on the machine at one time.

We will have significantly more on Intel Optane DCPMM, how the modules work, and their performance in a dedicated piece.

Next, we will discuss security hardening and Intel DL Boost. We will end with a discussion of the new Intel Xeon Platinum 9200 series followed by our final thoughts.

Intel Ark lists a single AVX512 FMA Unit for the Gold 52xx series so that’s probably what you get in retail.

I’m looking at the performance effect of NVM like Optane DCPMM in a cloud environment. I’m configuring multiple virtual machine environment and struggling with the input workload. I’m wondering what “KVM Virtualization STH STFB Benchmark” is, and how can I get the detail workload information?