This week, Oracle announced some figures on its AI compute plans. What is more, when it comes to AI operations, Oracle and NVIDIA plan a multi-ZettaFLOPS in 2025. That is ahead of Raja Koduri, formerly Intel’s accelerated computing chief’s, plans for ZettaFLOPS in 2026-2027. That is going to take Oracle operating huge Blackwell GPU clusters.

2025 ZettaFLOP Scale Compute as Oracle Looks to Operate Hundreds of Thousands of NVIDIA GPUs

Just pulling out Oracle’s statements on how big its rentable AI clusters will be:

- OCI Superclusters with H100 GPUs can scale up to 16,384 GPUs with up to 65 ExaFLOPS of performance and 13Pb/s of aggregated network throughput

- OCI Superclusters with H200 GPUs will scale to 65,536 GPUs with up to 260 ExaFLOPS of performance and 52Pb/s of aggregated network throughput and will be available later this year.

- OCI is now taking orders for the largest AI supercomputer in the cloud—available with up to 131,072 NVIDIA Blackwell GPUs—delivering an unprecedented 2.4 zettaFLOPS of peak performance.

- OCI Superclusters with NVIDIA GB200 NVL72 liquid-cooled bare-metal instances will use NVLink and NVLink Switch to enable up to 72 Blackwell GPUs to communicate with each other at an aggregate bandwidth of 129.6 TB/s in a single NVLink domain. (in 2025) (Source: Oracle)

Adding up those figures alone is 212,992. Our sense is that the above figures are based on network topologies as 128 squared is 16384 and then 65,536 is four times that figure.

We know that there are many who will still focus on the HGX B200 in the next generation with a higher GPU-to-CPU mix with the GB200 NVL72 focused more on inference, but it will be interesting to see how that shakes out.

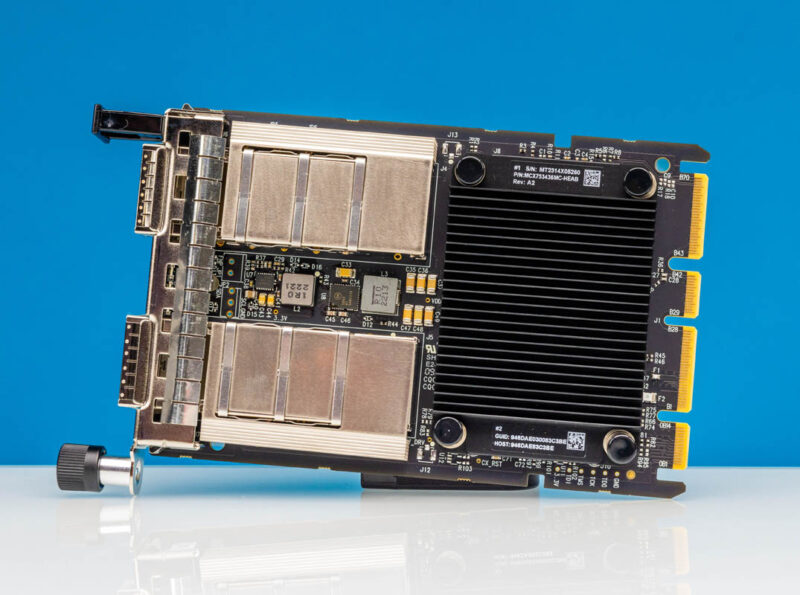

Oracle also discussed using NVIDIA Quantum-2, RoCEv2, and using NVIDIA ConnectX-7 NICs and ConnectX-8 SuperNICs. So it appears as though Oracle will be using NVIDIA’s networking stack, but there is no mention of BlueField-3 on the DPU side even though Oracle announced using BlueField-3 DPUs last year. Oracle also uses other DPUs so that is notable.

Perhaps the coolest part of the new announcement is that it is bringing ZettaFLOPS scale computing quickly. Clearly, this is not double precision FP64 FLOPS. At the same time, the sheer quantity of calculations is exciting.

Final Words

Oracle talked about using new reactors to power data centers. As our Chief Patrick noted today there are many small reactor projects active in the US, and there are active discussions about them for data centers, but regulatory hurdles will make them hard for these 2025 clusters. Oracle released a video of its OCI Superclusters that showed very low density AI compute with 8 GPUs per rack. To scale to 100,000+ GPU clusters, Oracle will have to both secure power, and also move to much higher density rack configurations. The Supermicro 4U Universal GPU System is already being deployed in 8 system per rack clusters using the Supermicro Custom Liquid Cooling Rack today in 100,000+ GPU clusters.

Now it remains to be seen how this actually gets implemented.

Pretty bold claims, Straight up claiming 100% scaling of FP8 performance across entire install.