Happy 2025 STH’ers! I just wanted to put this into a post, since it is something we may reference later. I just wanted to quickly mark the new year with what our starting point is for those buying servers. Since it is 2025, I figure this would be a good post to memorialize where we are at with the technology stack.

CPU Cores Per Socket

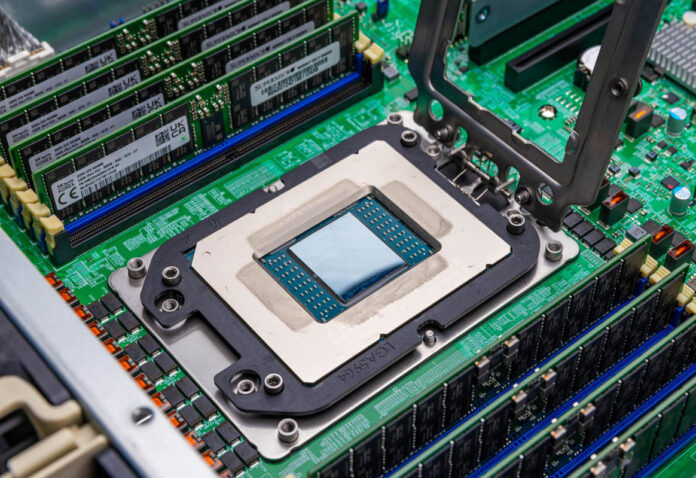

AMD is currently at 192 Turin Dense cores and 384 threads per socket, and 128 full cache and clock speed cores (256 threads) in Turn. Intel has its 128 core/ 256 thread Granite Rapids AP and its Sierra Forest-SP at 144 cores/ threads. The smaller Granite Rapids-SP and larger 288 core Sierra Forest-AP we expect in the second half of Q1 2025, assuming that 288 core part does not become solely a special order hyper-scale part.

On the Arm side, NVIDIA Grace is still at 72 cores for the single CPU module and 144 cores for the dual chip module. Check out our Quick Introduction to the NVIDIA GH200 aka Grace Hopper piece because the memory bandwidth varies based on the capacity. Ampere Altra Max is 128 cores and is still chugging along while the AmpereOne is at 192 cores.

Networking Speeds

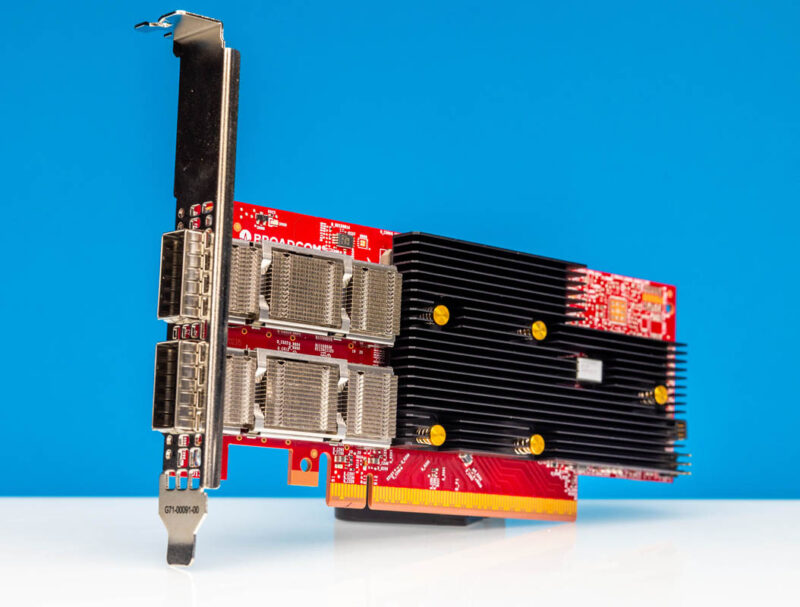

On the switching side, we are at 51.2Tbps switch silicon, although the 102.4T generation should start to emerge in the next quarter or two. We already showed the Delta DC-90640 A Next-Gen 2025 102.4T Switch.

For client networking, we expect to stay at 400GbE for the near future. It will take PCIe Gen6 or multi-host adapters to get to 800Gbps speeds due to the PCIe bandwidth limitations.

Something that will change is that 25GbE is currently a PCIe Gen5 x1 slot making it harder to deploy given how inefficient it is to use just given PCIe lanes. 100GbE is becoming the new 25GbE, but the AI space is really pushing 400Gbps networking to efficiently use PCIe lanes.

SSDs and HDD Capacities

Hard drives are still progressing slowly as we have seen the launch of a few 30TB class models. On the other hand, SSDs are growing in capacity at an immense pace. We discussed in our Solidigm D5-P5336 61.44TB SSD Review how hard drives lost. The 122.88TB generation is upon us with multiple vendors starting to ship. Those might be sold out for 2025.

Around the corner is the 245.76TB generation and that is coming faster than many think.

Server Memory

While most memory today is a maximum of DDR5-6400, the capacity has really stalled. We are still seeing a lot more 64GB and 128GB DIMMs being used than one probably would have thought would be the case in 2020. Instead of increasing capacity per DIMM, we are seeing servers offer 12 memory channels. On the speed side, adding, eventually, MCR DIMMs will be a big deal.

Perhaps the bigger trend is that we are seeing a lot more on the CXL memory front. We have been seeing designs utilize CXL Type-3 devices for memory expansion which is great to see.

GPUs for AI

There are plenty of AI accelerators out there, but the GPUs are the big one. NVIDIA is transitioning from the HGX H100/ H200 platforms for those that are starting to get GB200 platforms. For those who are not huge customers, the Hopper generation is still it.

AMD is transitioning from the Instinct MI300X to the MI325X generation. On the APU side, it seems like that has become part of El Capitan, but we did not hear about a major refresh yet. AMD’s main architecture is an 8-way OAM platform with two AMD EPYC CPUs and direct Infinity Fabric links.

Intel still says Falcon Shores is coming in 2025.

Final Words

From a rack level, a top-end AI rack is in the 120-140kW range which will be quaint by 2027. Hopefully, that covers most of the big system components. In the future, when we look back on the start of 2025, we at least have this memorialized set of where we started the year.

“100GbE is becoming the new 25GbE, but the AI space is really pushing 400Gbps networking to efficiently use PCIe lanes.”

I would love for more expansion on this line of thought.

Are you saying all standalone servers sold by hardware vendors are going to start including 100GbE because it’s fully commoditized like 10GbE and 25GbE before it? So a typical Dell 1U will come with 100GbE because it doesn’t make financial sense to go any slower?

I second James’ comment. Please elaborate on this.

@James

More like 100GbE will become the aspirational standard for new deployments

Without PCH you’re now getting almost all full speed Gen 5.0 lanes. So if you’ve got a dual 25G NIC you’re using only 2 lanes of Gen 5.0 but I don’t think there’s a controller less than x8 so you’re wasting at least 6 lanes. Dual 100G is only Gen 5.0 x4 and the 51.2T gen of switch chips stopped supporting under 100G.

That’s why you’re seeing more servers with OCP and they’re not coming with built-in NICs as much, because you’re getting 100G+ networking and nobody other than the cheap people are buying Intel E810

It’s fascinating to see how rapidly AI infrastructure is evolving. A top-end AI rack consuming 120-140kW today will indeed seem modest by 2027. This reflection on our current capabilities serves as a valuable benchmark for future advancements. Exciting times ahead in the world of AI!

Just wait ’til Intel releases their LGA-9324 Xeon 7 series with Diamond Rapids. Pat’s REALLY gonna flip out. Mainframe-sized CPUs, here we come. :-)

I think this is a pretty good starting point.

It’s hard to find people who know much about this topic, but you do!