At ISC 2022 HPE had on display the Cray EX node that will be featured in the Argonne Aurora supercomputer. This is a >2 EF system that we expect to see on the Top500 in 2023 possibly after some major work to the nodes. Still, it was exciting to see the next-gen platform in Hamburg, Germany this week.

A Look at a 2023 Top500 Aurora Supercomputer Node with Intel Ponte Vecchio

Looking at the front of the blades, these are HPE Cray EX style blades with the input fluid (blue) and return (red) nozzles for cooling in the same place they are on the AMD-based nodes. One of the big differences is that while this was being shown in the HPE booth, the naming on the front is “Intel” instead of HPE or Cray.

There is a key difference though. When we look at the AMD blade open on the table, it is clear that the Intel version is being shown with the opposite side opened up. It almost seems like the systems are flipped since we are looking at a different side of the blade from the AMD version, yet we can still see many of the similar features. To help orient, you can see the blade latches, handle, and cooling fluid nozzles.

The first hint of this we did not see until reviewing this photo. The labels for the ports are upside-down in the view exposing the Slingshot mezzanine NICs (codenamed “Sawtooth”.) Here we can see the four connections for Slingshot networking corresponding to the four Sawtooth Slingshot NICs. There is a HV Bus Bar for power. We also have a CMM connector. CMM is often used for chassis management in the industry.

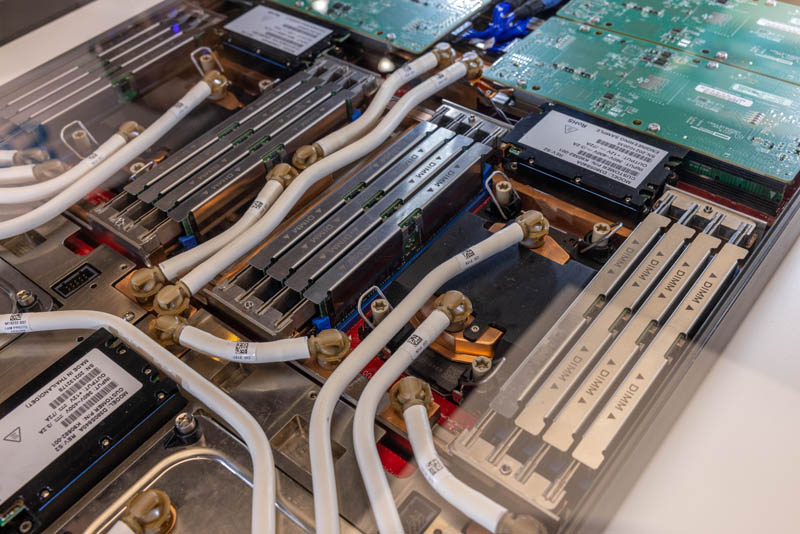

In the AMD Frontier node, the NICs are at either end of the CPU. In the Aurora node, they are at the rear of the chassis behind the Sapphire Rapids CPUs.

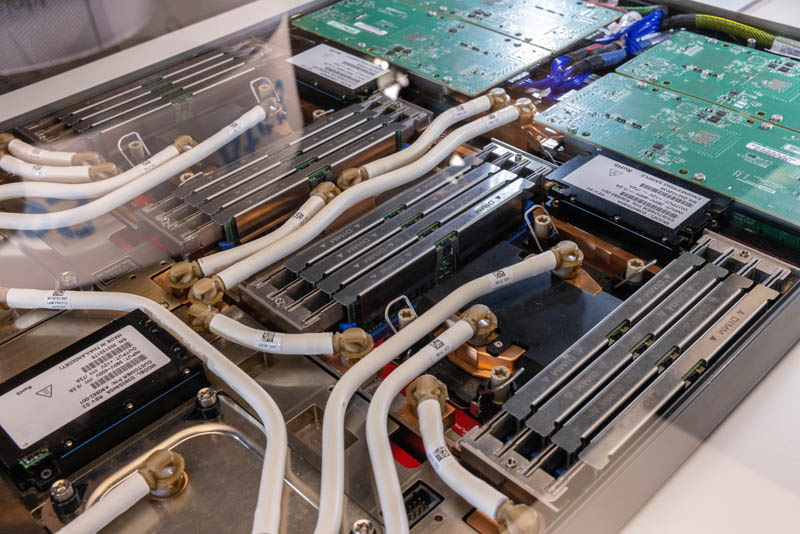

Here is a view of the 4th Generation Intel Xeon Scalable Sapphire Rapids CPU sockets with a similar retention mechanism to the 3rd Generation Xeons (Cooper and Ice Lake.) We expect AMD Genoa-based nodes to have a fairly substantial heatsink retention re-work, much more than Intel is doing in this generation.

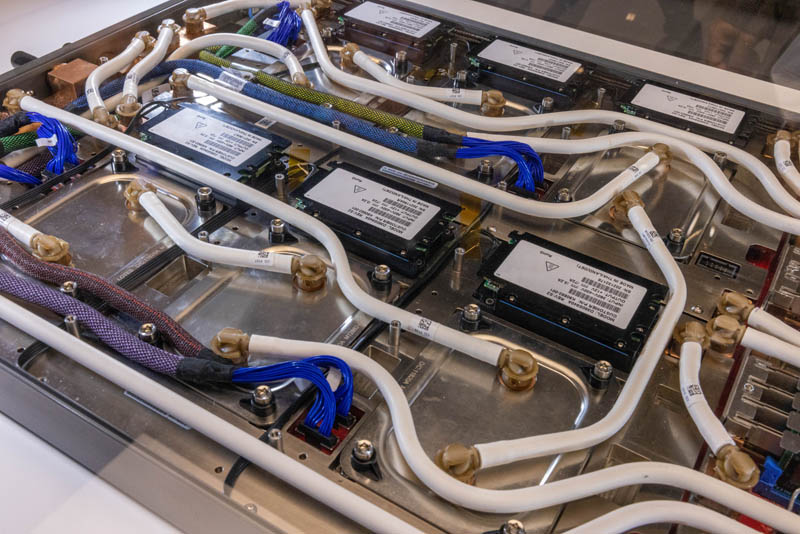

One other item that is significantly different is that the cooling solution is not branded. We saw the CoolIT branding on the Frontier AMD nodes. Here we have a bunch of white tubes without branding. The cold plates seem to be much lower profile and the fittings go up from the top of the cold plates and then out at a 90-degree angle. In the AMD-based systems, there are larger cold plates and the nozzle fittings are oriented parallel to the motherboard plane.

The red PCB under the OAM cold plates shows us we have an engineering sample board usually in the space, and that is to be expected.

The cold plates appear to have “Lotes” on them and that is a bit surprising. Lotes should be familiar to STH readers as the brand is often shown as a CPU socket maker when we look at servers and motherboards.

One feature that stuck out as appearing completely different between the SPR-PVC node and the Frontier EPYC MI250X node is the presence of many black boxes.

From what we saw, each PVC GPU had this black box, and there were also one between each Sapphire Rapids Xeon socket and the Sawtooth NICs.

These boxes had model numbers of D380S840A on them. That looks like a Delta power supply number. Each box has “Input 360V-400V 3.2A” and “Output 12V 72A” on the label also pointing to the possibility these are Delta power boxes.

What is very clear is that the Aurora node may share a physical footprint, but it is very different than the Frontier node in the HPE Cray EX platform.

Final Words

From what we are hearing, just given the time to deliver all of the nodes, get the new architecture and system running, and then do official Top500 HPL runs with tuning iterations, this will not be a system seen on the SC22 Top500 list. One factor accelerating the timeline for Aurora is that the new HPE Cray Slingshot interconnect is running with Frontier so that system will not need to be the leading edge on that new technology.

It also seems like the plan is still to do a two-step Aurora installation, installing the Aurora nodes with Sapphire Rapids then pulling the old CPUs from sockets and installing the Sapphire Rapids HBM nodes. That sounds like a fun process with pre-filled liquid loops that HPE is showing.

Although we still have some time between now and when the system launches, it seems like Aurora is ready for nodes as of when we discussed the project with Rick Stevens of Argonne and Raja Koduri of Intel a few weeks ago in Dallas during Intel Vision 2022. Now we get to wait for the installation and a run that hopefully gets Aurora on next years ISC 2023 timed Top500 list.

Hi Patrick,

It was nice to finally meet you in person at ISC.

What is your opinion on all these liquid piping and all the joints within the nodes? Do you have any industry numbers on how reliable they are? Looking at the sheer number of them, it feels like there will be at least one leak per year per rack, even if they’re 99.999% reliable.

This industry badly needs someone like Sandy Munro (check his Munro Live yt) to get it in shape on engineering side of cooling things. DLC as is looks horrible. In fact there was only one example at the ISC exhibition floor that I considered well done, a sequana intel three-node tray with proper heat pipes, no hoses and no joints. That’s how things should be done.

Can you help push the industry a bit in this direction? Thanks.

@jpecar – do not worry. If it springs a leak once a year in each rack, Intel will have a shiny marketing name for something to deal with that. The something will be stupidly pricy, consume twice to thrice as much power as reasonable and will be about five years late in delivery.

This is Aurora, after all, and the Pork Barrel would expect nothing less.

Aurora should have been given to AMD. That company at least can deliver the goods on time.