About a year ago, we published an article about DeepLearning01. Although for “serious” deep learning / AI work you are better off with something like DeepLearning11 (updated with Titan V’s,) that is frankly too much for many folks starting their learning journey. Our goal with DeepLearning01 was simple: provide a quiet, low power server for learning deep learning. In this piece, we are going to assess how well this build served its intended purpose.

Although it was not our intent at the time, this small system may have been the best recommendation STH has given in over 8 years. Over the ensuing year, that $1700 system handily beat the Dow Jones Industrial Average. That is quite a feat since the DJI was up about 29% over a similar timeframe.

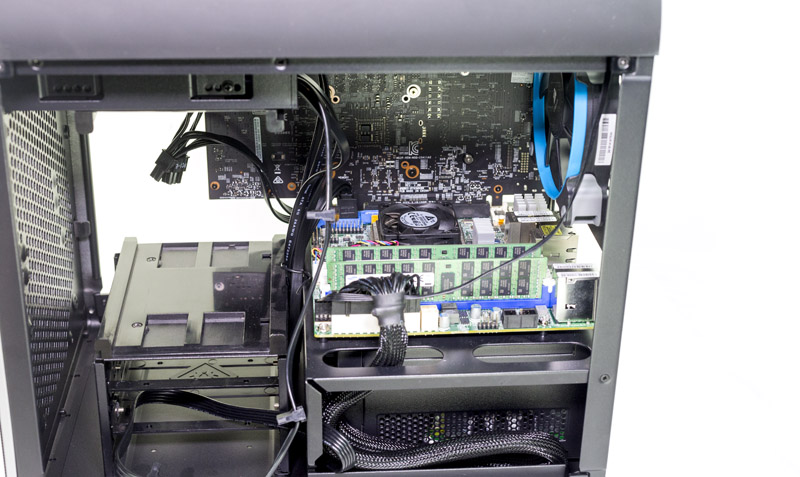

Inside the DeepLearning01 Build

We purchased this setup in late 2016 and published the list in early 2017. As a refresher, here is what the machine had internally:

- GPU: ASUS GeForce 6GB Dual-Fan OC Edition DUAL-GTX1060-O6G

- CPU/ Motherboard: Intel Xeon D-1541 / Supermicro X10SDV-TLN4F (review here)

- RAM: 64GB (2x 32GB) DDR4 RDIMMs (Amazon example here)

- SSDs: 4x Intel DC S3500 480GB

- Chassis: Bitfenix Prodigy mITX Case

- Hot-swap: Icy Dock ExpressCage MB324SP-B (review here)

- Power Supply: Seasonic 520w Fanless Platinum

We had a few goals: reasonable costs, near silent operation, “fast enough,” and the ability to update in 6 months if/when our skills were honed and we were ready to take on larger datasets. At the time, we looked everywhere for best prices and ended up spending just under $1700 on this build with the expectation that we would upgrade in six months.

DeepLearning01 The Retrospective for Techies

In hindsight, this was a terrible build. We should have instead bought 10x (or 1000x) NVIDIA GTX 1060 6GB cards instead of buying one. The reason for this is that while the ASUS GeForce 1060 6GB we purchased for $236 on November 27, 2016 now sells for $500-600 on a regular basis. Cryptocurrency mining has created a general GPU shortage driving GPU prices up.

Crypto-mining aside, this system has performed spectacularly well. We have been running dockerized Tensorflow with Keras on this platform all year along with a few other applications. There are huge performance and reliability boosts by running models off of this system versus a laptop. Since it is compact and quiet, it is also easy to stick in a corner of an office which was our intent.

Networking

One of the key wins with this build was the fact that we had 10Gbase-T networking. Although we are now using 40GbE NICs just about everywhere, the 10Gbase-T helped a lot. We have been using a model where we have datasets on a NAS and then that is fed onto the locally cached SSD array. The difference between 10Gbase-T and 1Gbase-T is enormous so long as your NAS is able to fill the pipe. 10Gbase-T is still not fast enough to where we would recommend using network storage, but it is fast enough to make scripted file transfers more tolerable.

Using 10Gbase-T was essentially “free” with the Intel Xeon D-1541 platform that we chose. It would be difficult using a consumer mITX platform since one would need one PCIe slot for the GPU and another for higher-speed networking. Moving to a larger form factor would have sacrificed size for more PCIe slots. If you do not have an embedded 10GbE or faster NIC, we strongly recommend one.

Storage

Building today, we would add a 1.6TB-2TB class (or larger) NVMe SSD using the m.2 port and use that for storage. The Intel DC S3500’s in RAID 10 have performed well, but used enterprise NVMe SSDs have come down in price to the point where that makes more sense. Optane drives would be the next logical choice but those are too costly for a basic build.

Our configuration today may use a single boot SSD and then a larger NVMe SSD for work. We keep our work output copied to the NAS so using RAID 10 was largely unnecessary.

CPU and RAM

Likely the most controversial choice we made. Using an Intel Xeon D-1541 gave us decently powerful comptue with 8 cores and 16 threads on our platform. We also were able to use standard RDIMMs where we used 4x16GB. RAM prices went up, but this worked exceptionally well.

Building today and accepting a larger form factor, an Intel Xeon Silver 4108 or Silver 4110 would be a strong choice to get a larger platform. The single GTX 1060 node never used more than 57GB of RAM so we feel that 64GB was the right choice for us in this small build.

Why We Would Not Build This Today

There are two reasons why in January 2018 this is significantly harder to recommend. First, as we mentioned, the GPU prices have more than doubled. While that is quite a bit, RAM prices also went up. As a result, the same RAM modules now cost almost twice as much even buying them second hand. NAND prices for the SSDs we used raised as well even on the secondary market.

Essentially a year later our 2017 $1700 build would tally around $2450 to replicate. Or in other words, one could part it out for significant profits. As the overall price tag increases, so does our appetite for a larger GPU (e.g. a GTX 1080 Ti.) Realistically, the complete lack of GTX 1000 series cards in the channel points to a GPU shortage which often accompanies an impending GPU architecture launch.

We also confirmed that in Q1 2018 we expect a new Xeon D based on Skylake, although production of systems may take longer. It seems like waiting a few weeks/ months may be the best course. In January 2018, this makes such as system hard to recommend.

Final Words

It is an exceptionally strange period in computing. In 2017 we saw NAND SSDs, RAM, and GPUs all increase in price. The asset price of this same build 13 months later would increase about 45% even scouring ebay for used components. Although that appreciation makes it hard to recommend today, it also means the original piece was considerably better than we anticipated. An investment in DeepLearning01 in December 2016 would have let you work on deep learning applications, mine cryptocurrencies, and have assets that beat the Dow Jones Industrial Average (in a great year) in terms of price appreciation.

On a more serious note, although we ended up moving to larger 8x and 10x GPU systems and GTX 1080 Ti’s almost everywhere, this small nearly silent system has performed extremely well for us over the last year. If you are looking to build a comparable system for an initial foray into deep learning, this example worked great.

That’s totally true. We bought over 100 1080 Ti’s after your DeepLearning10 article this summer and they’re selling on ebay for 50% more on ebay and Amazon now.

Cheeky title but it was a fun holiday read.

Jeez 100 or them? So 50k in GPUs?

Current state of industry is simply sick. Twice the money for GPU, twice the money for RAM. W/o EPYC we would be on the same perf like few years ago with cosmetic marketing gimmick played by Intel. Where is innovation and natural price drop?

Intel is the only relevant stock in the DJIA and it is too diversified to matter. Perhaps Microsoft & Cisco but they aren’t really semiconductor companies anyway.

A better comparison would be the Semiconductor Index represented by the SMH exchange traded fund which is up exactly the same amount as the DeepLearning01 box you created since Dec 2016 — 44% (a strange coincidence perhaps).

nVidia stock (NVDA) is up 110% since 12/01/2016 & Micron stock (MU) is up 99% since then as well.

What is this?!? I thought you used your modest deep learning system in some kind of trading system to beat the Dow Jones by over 29% !!! Man…I feel busted!!! LOL

I am in the process of building a trading system using multiple 1080ti’s and yes..NVidia is really kick ass!!!

Bring on 2018 and the 2080ti…

Cheers!!

I thought you used your deep learning system in some kind of trading to beat the Dow Jones, too! LOL

I’ve build my system base on Ryzen and a 1080Ti for deep learning, though I didn’t learn a lot after that system was built.