At OCP Summit 2019, Intel and Facebook showed off two platforms that if you connect the dots may be extremely powerful. At OCP Summit 2019, they did not appear together. After this article, we are going to suggest that maybe they should. Intel previously announced the NNP-I 1000 during CES but it was branded NNP I-1000 during OCP Summit 2019. At the CES announcement, Facebook noted its joint development.

The Intel Nervana NNP I-1000

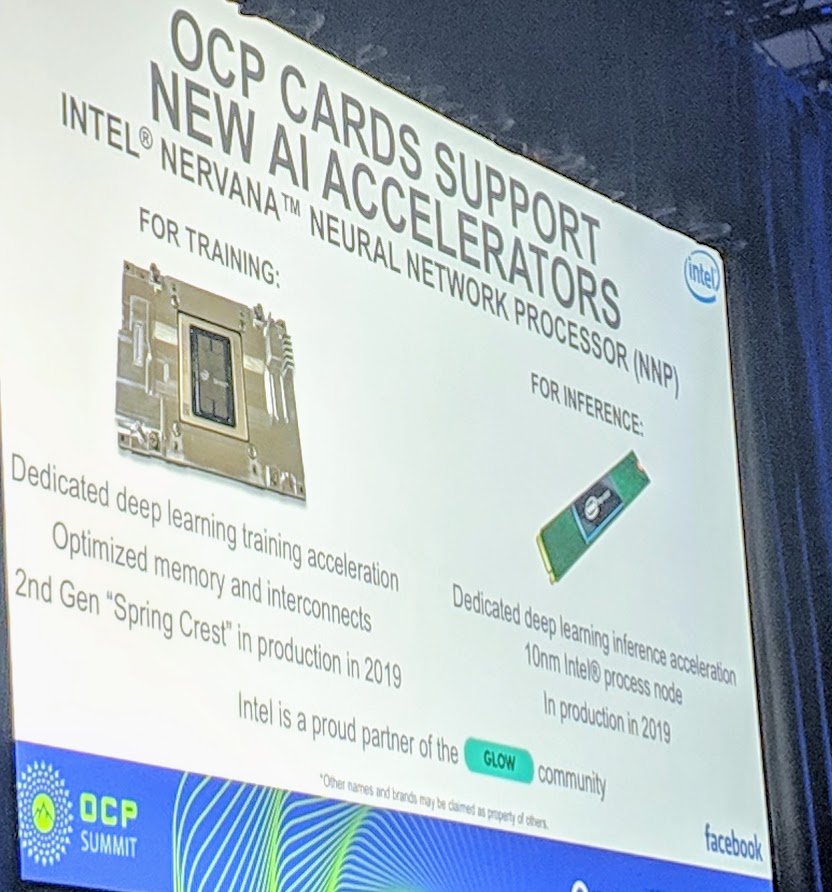

During the Intel OCP Summit 2019 Keynote, the company showed this slide on the two prongs of their strategy. Our piece: Intel Nervana NNP L-1000 OAM and System Topology a Threat to NVIDIA covered the first prong. The Intel Nervana NNP I-1000 is not designed for training, rather for inferencing.

The Glow ML compiler is Facebook’s answer to the problem of how to support multiple different architectures, including the NNP lines.

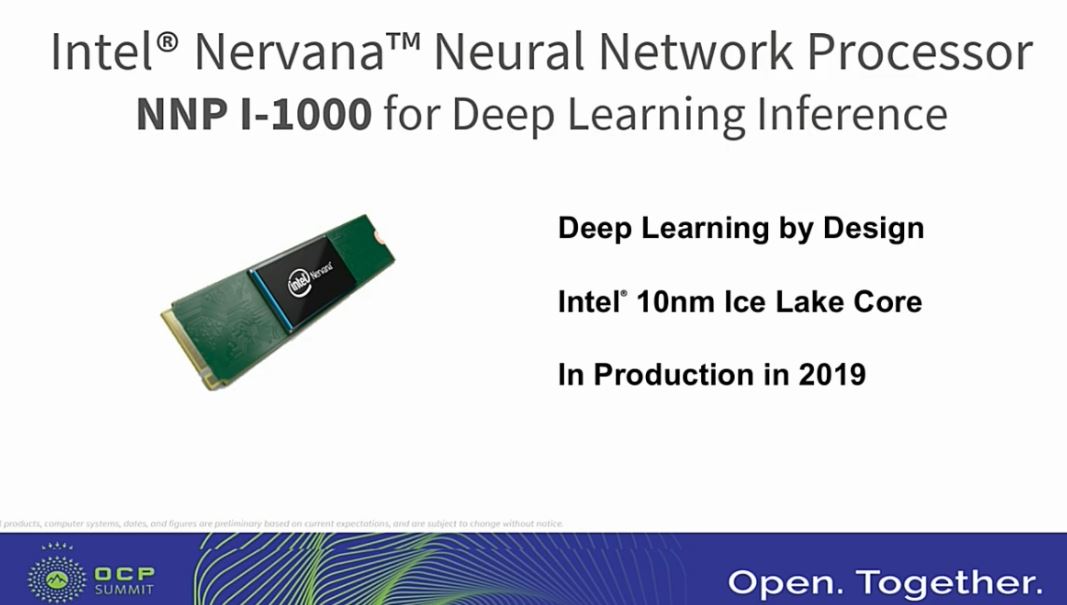

The detail on the Intel NNP I-1000 is a M.2 device based on 10nm Ice Lake.

Intel and Facebook clearly see the M.2 module as the way forward. Let us explore that for a moment.

Plausible Facebook Intel Nervana NNP I-1000 M.2 Platform

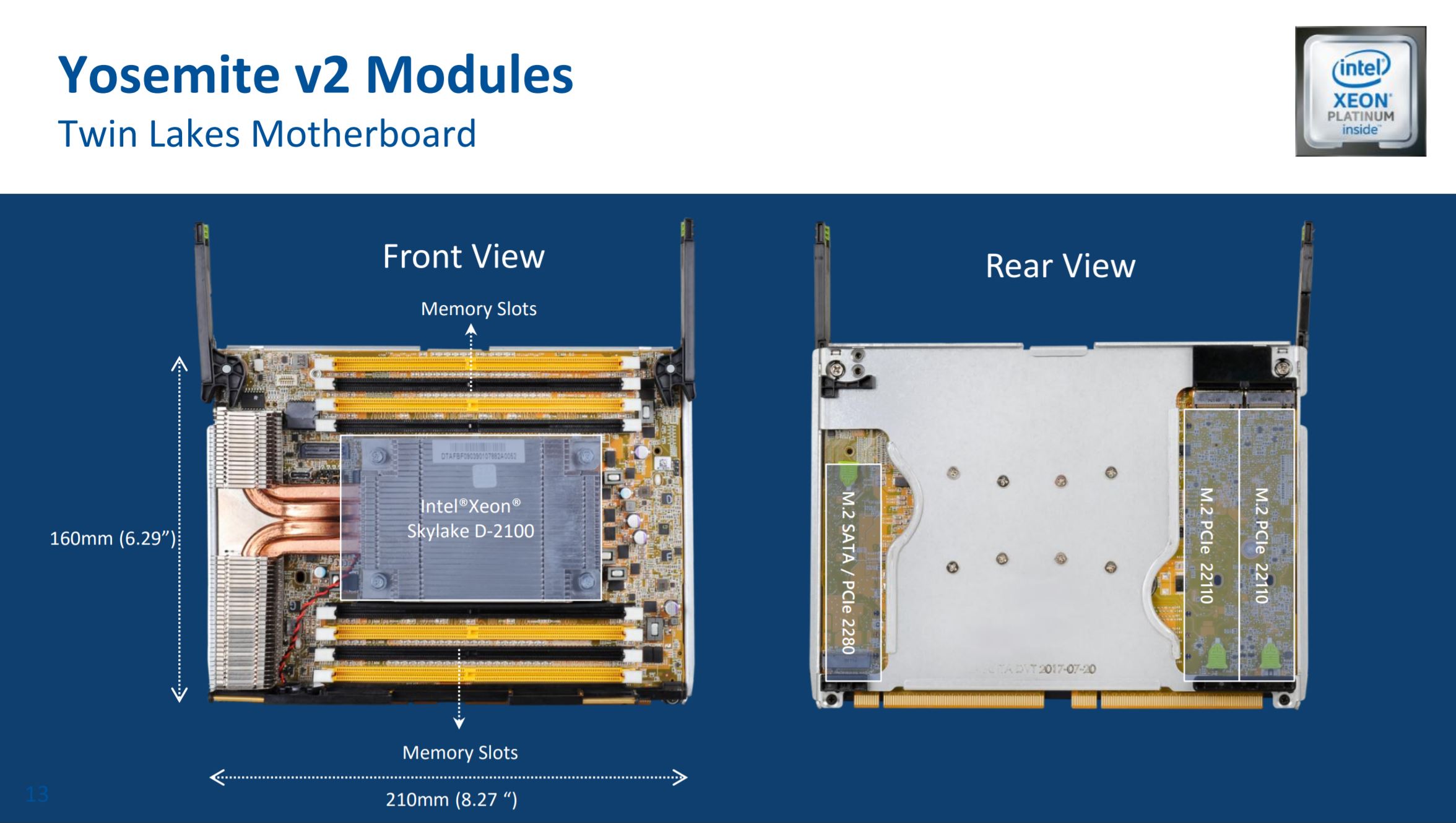

At first, one may assume that the Intel Nervana NNP I-1000 M.2 module will be a direct addition to the Yosemite V2. If you look at the QCT slides on the Yosemite V2 Twin Lakes motherboard you will see there are two M.2 22110 slots that may be a good fit for the NNP I-1000 modules although the first use was likely local NVMe.

You will notice that there is “Intel Xeon Platinum inside” logo on QCT’s slide. Although we saw in our Intel Xeon D-2183IT Benchmarks and Review that Skylake-D (Xeon D-2100 series), despite Intel’s specs, has dual port FMA AVX-512 like the Xeon Platinum series, we do not believe this is an intentional marketing leak by Quanta as we saw with the Next-Gen Intel Cascade Lake 28C Supporting 3.84TB Memory last year. Instead, this is likely just a copy-paste branding mistake. To our readers, do not read into that Xeon Platinum logo too deeply. Instead, look at the 2018 platform shown off at OCP Summit 2019.

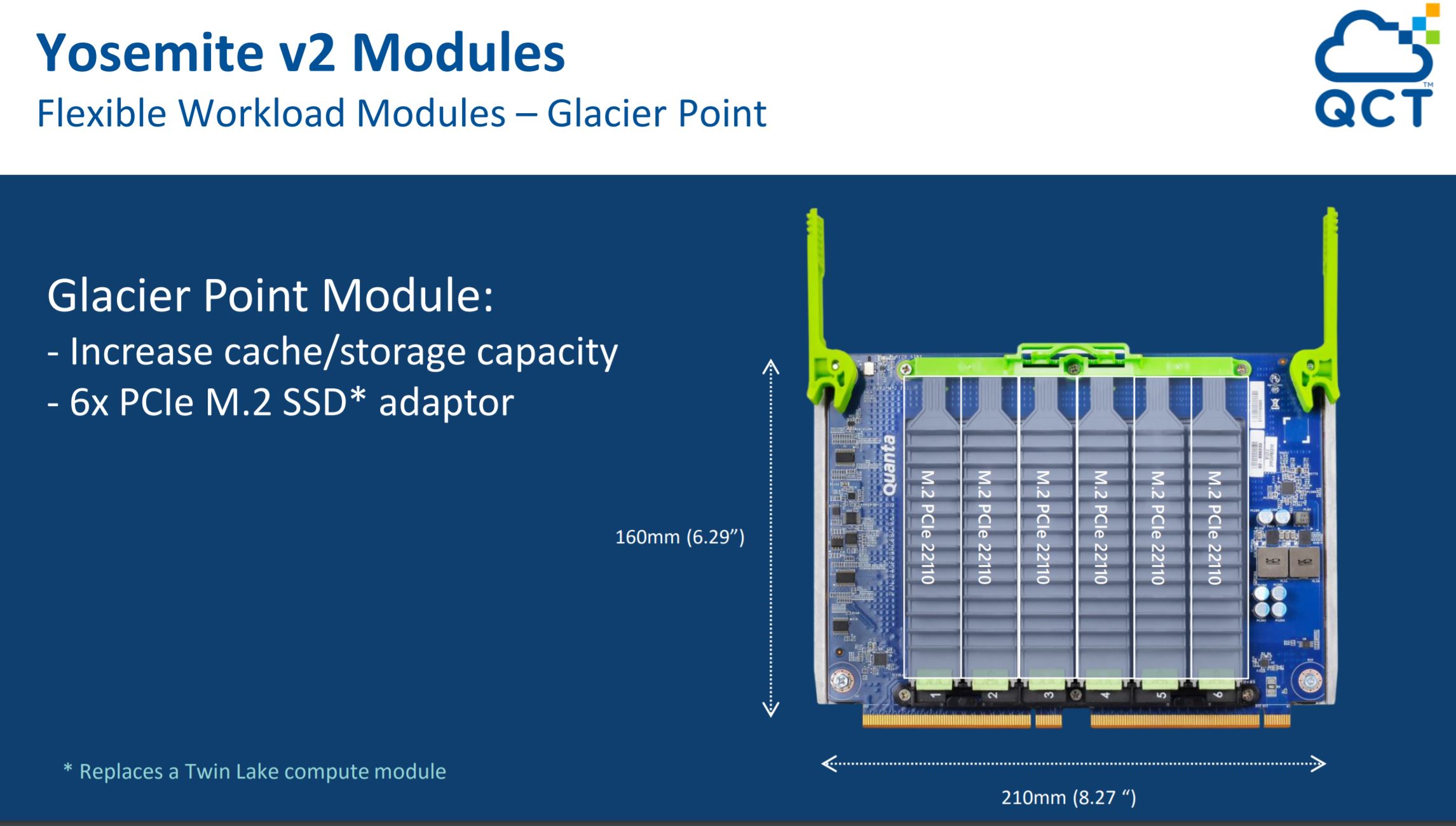

Beyond the compute module, we had Glacier Point modules for Yosemite V2. Glacier Point adds six NVMe drives into the same form factor that Twin Lakes fits in. Facebook can mix in more storage into sleds by simply changing modules. If you want a higher capacity storage Xeon D server, Glacier Point is what you would use.

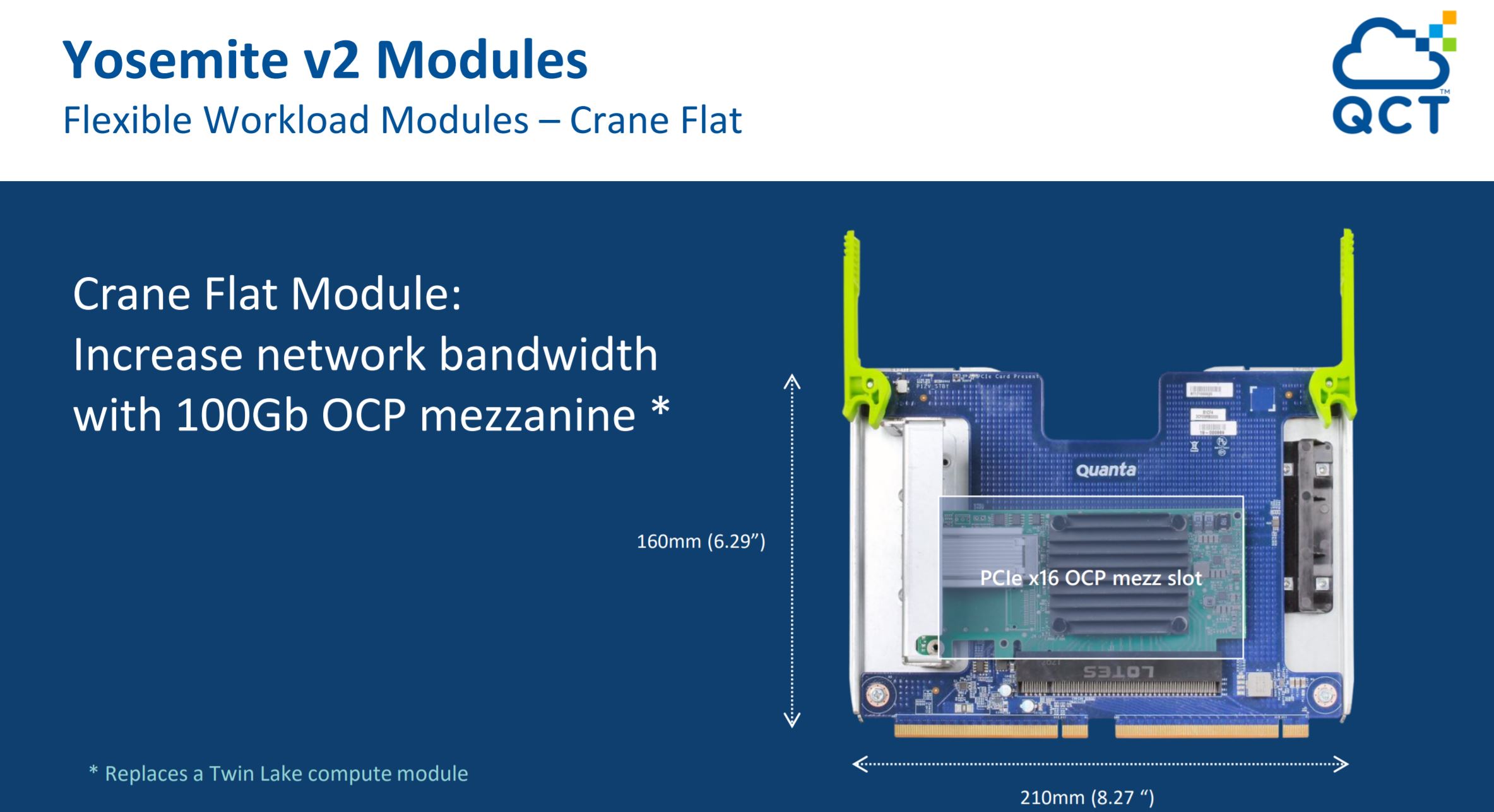

Quanta and Facebook also showed “Crane Flat” a year ago, a very interesting model. Crane Flat was set to add a PCIe x16 slot into a module to replace Twin Lakes. Quanta and Facebook appeared to be going down the path of adding PCIe x16 cards in the Yosemite V2 ecosystem.

Something about this module may seem strange. Quanta QCT is showing what appears to be a Mellanox PCIe x16 100Gbps NIC on a PCB. Here there appears to be a Lotes x16 slot mounted on the PCB. The NIC is labeled “PCIe x16 OCP mezz slot.” If you look at the OCP NIC 3.0, or the previous generation 1.0 / 2.0 OCP mezzanine cards, they do not use a standard x16 connector.

The physical layout with the QSFP28 port coming out of a module looks a bit strange since Facebook typically touts using multi-host adapters in Yosemite V2 as a major benefit of the architecture.

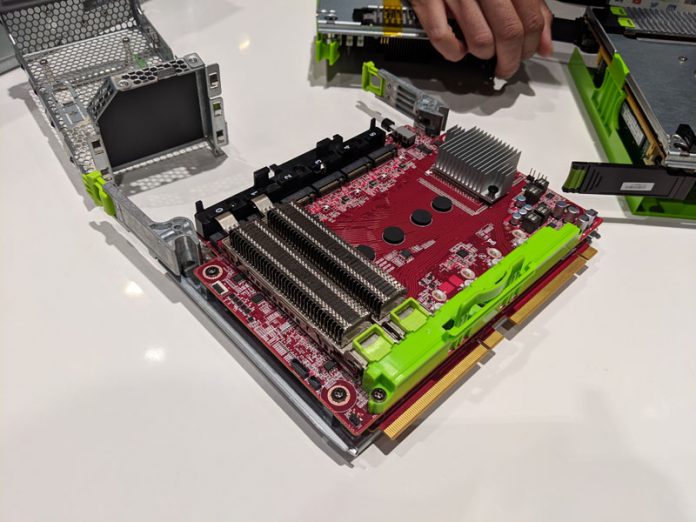

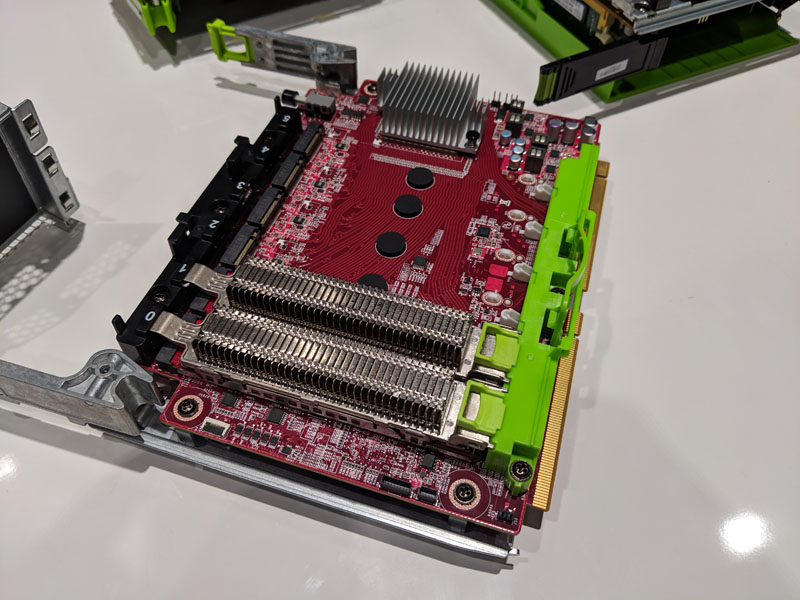

At OCP, we did not see this 100GbE module. Instead, we saw a different and significantly more interesting module. We are going to call this Glacier Point V2 although we were told in the Facebook booth that the name has not been announced. At first, it seemed like an upgraded version of Glacier Point.

What you are looking at is a Twin Lakes replacement module that has what we were told is a PCIe switch under the heatsink. There are six M.2 slots labeled 0-5. In the M.2 slots, there are relatively large heatsinks. Heatsinks of this size are unlikely to be required for NVMe SSDs. However, for Intel Nervana NNP I-1000 modules, they might be more appropriate.

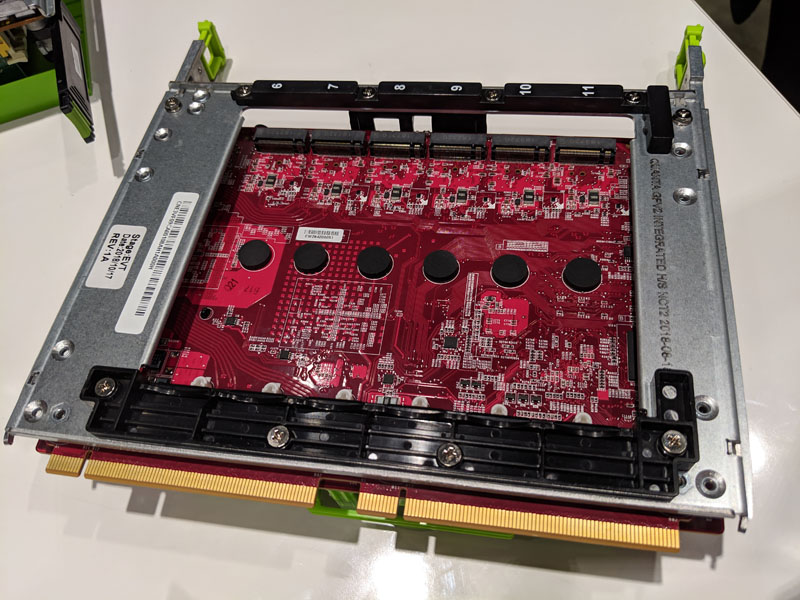

On the rear of the module, we find the key differentiator: six additional M.2 slots labeled 6-11. This is a module that can potentially house twelve M.2 modules, doubling the capacity of Glacier Point. We, therefore, are calling it Glacier Point V2 in captions.

The label on the left side of this picture says:

Stage: EVT

Date 2018/10/17

REV: 1A

Which seems to indicate that Facebook was not in full production of this module in mid-October 2018. Over two quarters after the Crane Flat module was shown by QCT.

Although one may assume this is only to add more NVMe storage to a Yosemite V2 module, the large heatsinks seem to indicate higher power parts are being prepared. If that is the case, and Facebook is looking at this as being its inferencing solution, Facebook could be looking to put up to twelve Intel Nervana NNP I-1000 M.2 cards into Yosemite V2 module slots mixed with Xeon D.

Final Words

At OCP Summit 2019, the PCIe x16 Crane Flat module QCT showed off about a year ago was not present. Instead, we have this new module we are calling Glacier Point V2. The Crane Flat design would have made for an interesting GPU or FPGA platform as those typically have PCIe x16 host interfaces. Perhaps Intel and Facebook pivoted to an M.2 inferencing architecture. Yosemite V2 Xeon D nodes are often used for web front end nodes at Facebook so having directly attached inferencing modules may be the way to build that architecture while Zion handles training.

Implications are far broader than just this 12x M.2 module as a potential home for a dozen Intel NNP I-1000 devices. Like the OAM, this is an industry standard modular form factor that aggregates many devices. If you look at the QCT Crane Flat module, it is the opposite looking to deliver a single, less modular device to the platform. For NVIDIA, the lack of a Crane Flat PCIe x16 slot module for Tesla T4 cards at OCP Summit 2019 is not a good sign. Likewise, for other vendors hoping their PCIe x16 solution for inferencing is going to find its way to Facebook, this seems to indicate the company is investing in M.2 but has the design capability to use a PCIe x16 card.

We look forward to OCP Summit 2020 when we can find out if reading between the lines here was correct. By then, the NNP I-1000 should be in production per Intel.