Supermicro’s AOC-STGN-i2S provides 10-gigabit ethernet using an Intel 82599 controller. Using dual SFP+ connectors and a PCIe 2.0 x8 interface, the card packs a lot of bandwidth in a relatively small, low profile package that can fit in 2U enclosures. One issue with today’s ubiquitous 1Gbps GigE connections is that they deliver anywhere from 100 to 125MB/s of real-world performance. That is simply too low for most modern storage servers. With previous generations of 10GbE controllers (e.g. Intel 82598), vendors have had to deal with very high power consumption that accompanied high throughput. With the introduction of the Intel 82599 controller that the AOC-STGN-i2S is based on, power consumption was reigned in and the cards became much more practical. The AOC-STGN-i2S provides dual SFP+ ports that can be used with appropriate copper or short range fibre SFP+ cabling to span 30m (copper) to 300m (fibre).

Test Configuration

The test platform was the same as the dual Intel Xeon E5606 and dual Intel Xeon L5640 reviews. I detailed the dual Xeon processor build in an earlier dual processor test bed build log piece. The platform has dual IOH36 controllers and an onboard LSI SAS2008 controller. With all of the PCIe lanes, the board offers a TON of expandability beyond the dual CPUs and enough PCIe 2.0 x8 ports to run things like the AOC-STGN-i2S.

- CPU: Dual Intel Xeon L5640

- Motherboard: Supermicro X8DTH-6F

- Memory: 24GB ECC DDR3 1066MHz DRAM (6x 4GB UDIMMs)

- OS Drive: OCZ Agility 2 120GB

- Additional NICs: Supermicro AOC-STGN-i2S, Intel EXPX9501AT

- Additional RAID Controllers/ HBAs: LSI 9211-8i

- Enclosure: Supermicro SC842TQ-665B

- Power Supply: Supermicro 665w (included in the SC842TQ-665B)

Overall, this is a fairly realistic test bed for this card. Moving to 10GbE does have an associated premium over standard gigabit networking, so most deployments are probably going to happen in the DP platform space.

Features

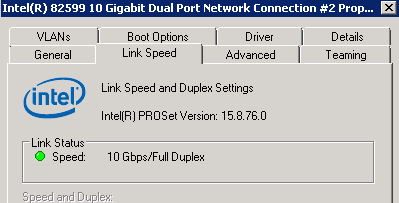

The Supermicro AOC-STGN-i2S may look like a fairly mundane card, but it has a very complete feature set. For anyone that has setup difficult fibre channel controllers, iSCSI controllers, or Infiniband controllers can attest to, ease of installation for high-speed data networking equipment is not always on the feature list. Setting up the Supermicro AOC-STGN-i2S was very simple as one can use the Intel Network Connections software/ PROSet drivers, for example, in Windows Server 2008 R2 and have everything setup within a matter of minutes. The Supermicro AOC-STGN-i2S was very simple and after Intel driver installation worked like any other Ethernet adapter.

After installation, the Supermicro AOC-STGN-i2S was configured as a virtual machine server (the Server 2008 R2 SP1 machine did have the Hyper-V role added.) Intel makes this very simple with some common pre-defined profiles. Based on the Intel 82599, the AOC-STGN-i2S included PCI-SIG SR-IOV and VDMq support among other features for virtualization servers.

As one can see from the spec sheet, the AOC-STGN-i2S provides a bevy of features aimed at two main goals, reducing CPU load and providing better support for virtualization and storage platforms. On the storage front, Intel is pushing Fibre Channel over Ethernet (or FCoE) fairly heavily. Intel’s goal is to simplify datacenter infrastructure by encapsulating fibre channel traffic over its 10 Gigabit Ethernet products. To push this, Intel provides its FCoE software free with 82599 based cards, including the Supermicro AOC-STGN-i2S.

One can see in the above picture that the FCoE Protocol is installed with the Supermicro AOC-STGN-i2S. In the interest of not re-writing a spec sheet, I will stop here, but for those looking for specific feature support I would advise doing appropriate research.

Performance

With SSDs being commonly deployed as cache drives, and these SSDs now pushing over 400MB/s (such as the Corsair Performance 3 series P3-128), a 125MB/s represents a clear bottleneck. Add to this the fact that SSDs offer a ton of performance in RAID 0 and RAID 5 scenarios, and one can see that these cache setups can now easily surpass 1GB/s. With every new storage generation, and every new server CPU generation, a need for greater performance, or 10 Gigabit Ethernet in today’s terms.

Some users may be asking why not just use NIC teaming, which is a common feature with most modern NICs. Using NIC teaming, one can speed up access to the server from multiple PCs, but teaming does little to help a single user accessing large files on a storage server. Furthermor, to achieve 10 Gigabit performance, one can replace ten cables carrying 1 Gigabit links with one cable carrying a 10 Giagabit link. For datacenters and heavily occupied server racks, cutting common cabling by 90% provides clear advantages.

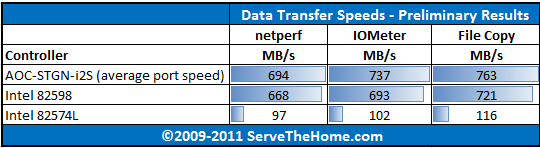

I did not spend copious amounts of time configuring the networking. Since at 10 Gigabit speeds hard drives have issues coping, I setup both the Xeon E3 series testbeds and the Dual Xeon L5640 testbed with RAM drives and direct connect cables to cope with the I/O demands. I will say, I am working on the network testing methodology, so this is probably directionally correct and real-world usage will vary:

Here, the big thing to note is that the 10 Gigabit controllers both provide significantly more throughput than the one Gigabit connections. I think that the choice to go 10 Gigabit is really focused on whether one cannot meet the speed requirements of an environment with a traditional quad port NIC.

One other performance consideration with 10 Gigabit Ethernet is power consumption. My old, single port, Intel EXPX9501AT 10GbE cards utilizing the Intel 82598EB chip set consume 24w under load. This requires not just good enclosure airflow, but also made it necessary for the card to have an onboard active fan cooling the processor. It is difficult to get an exact power consumption figure for the AOC-STGN-i2S since pushing 40 Gigabits worth of traffic over one card necessarily will increase CPU utilization by some degree and will also stress the chipset. From what I have observed (about a 12w increase) and what I have read, (Intel specs the 82599 at between 5-6w), my guess is that the card uses 7-10w if fully utilized. That is a vast improvement over previous generation technology.

Conclusion

Overall, the Supermicro AOC-STGN-i2S is a fairly attractive value at approximately $499 retail. One can easily spend $400 on a popular, Intel Pro/1000 PT Quad controller that offers four 1gbps ports. Compared to last generation technology, the AOC-STGN-i2S’ Intel 82599 controller is a significant step forward. Installation was very simple with Intel’s drivers recognizing the card and installing the proper drivers, even with the FCoE software. With lower costs than the Intel X520’s and similar features, the AOC-STGN-i2S is a strong contender. I would go as far as to say that the older generation controllers like the Intel EXPX9501AT are not worth purchasing unless they are needed for a validated environment, or one is purchasing refurbished single-port cards to lower purchase costs.

Feel free to discuss this article on the ServeTheHome.com Forums!

Patrick,

Good review. What did you use to connect the systems? I’m assuming you used direct copper cables (SFC-DAC) and not short range optics, right?

Yes, I used standard copper HP direct attach SFP+ cables that I had lying around. The boxes were about 3m from each other so going fibre didn’t make sense.

Good results. Much better than 1 gigabit speeds. Also looks like this adapter is inexpensive. Any word if this is getting updated to the X540 version?