After a quick hop out to Las Vegas, Nevada the STH colocation architecture has received a significant upgrade in May 2014. We originally posted our plans almost two months ago. A few iterations later and everything is now up and running. In total the node count has gone from 6 to 9 while only adding 1 amp to the setup (running right now at 6amps with most of the architecture idle.) This is a very impressive result as there have been major upgrades in terms of potential CPU power, drive speed and capacity, memory capacity and even interconnect capabilities. In keeping with tradition we take a look at some of the hardware that went into the colocation facility.

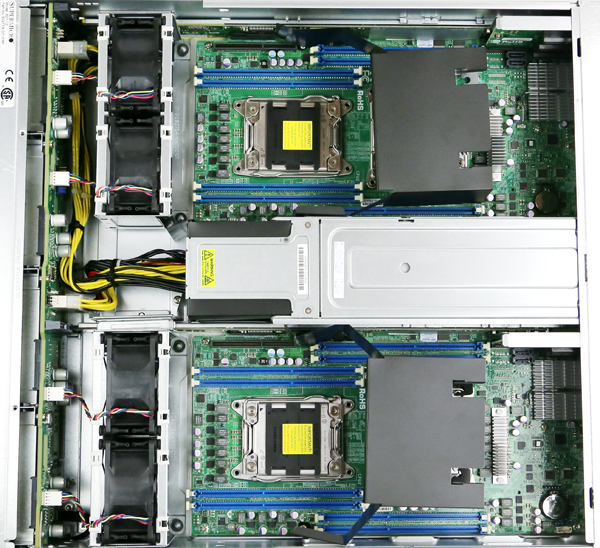

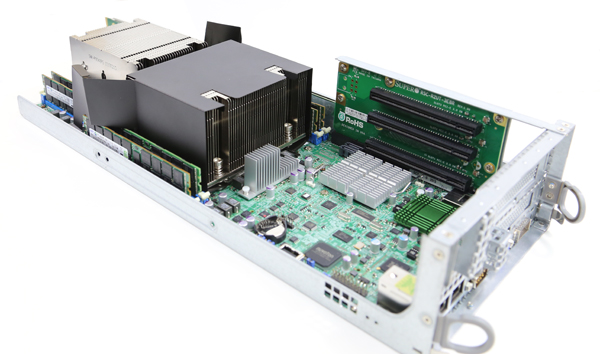

For those that did not read the forum thread, we got a great deal on a Supermicro Twin SYS-6027TR-D71FRF which is a monstrous system. It has 2x Intel Xeon E5 dual socket systems in a single 2U enclosure. Both systems are fed by a pair of redundant power supplies. Each node comes standard with a LSI SAS 2108 RAID controller and Mellanox ConnectX-3 56gbps FDR Inifiniband/ 40GbE onboard. Compared to the previous Dell PowerEdge C6100′s these are significantly more powerful. Here is how the internals are configured.

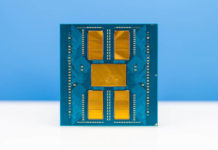

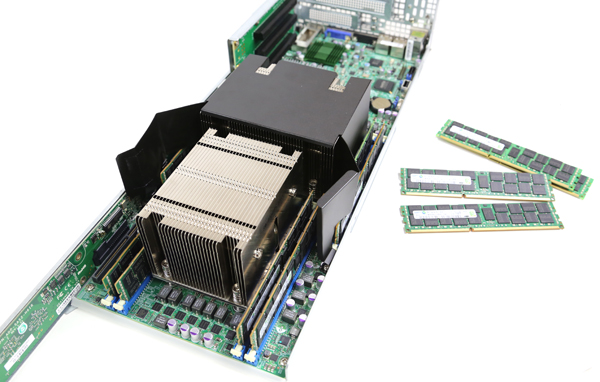

We did an initial test of the storage and networking subsystems with only one processor. The next step was adding RAM. This was somewhat tricky as there are only 4 DIMM slots per CPU for a total of 8 slots per node and 16 per chassis. Using dual Intel Xeon E5-2665′s in each node and filling each DIMM slot with 16GB Samsung ECC RDIMMs yielded 16 cores, 32 threads and 128GB of RAM per node.

One other excellent feature of the Supermciro SYS-6027TR-D71FRF is that it has three PCIe expansion slots to go along with its onboard Infiniband and SAS RAID controllers. Two are full height and one is half height but there is enough room for many types of cards.

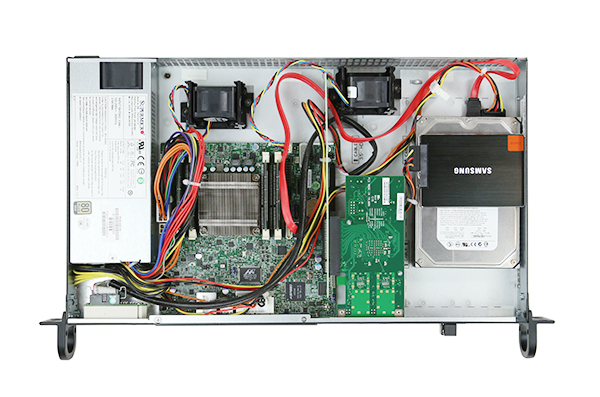

In terms of drives we ended up using a stack of Crucial M500′s. With lots of RAM in the colocation cabinet there is simply not much disk writing going on for a website like STH. Each of these Supermicro nodes got 2x Seagate 600 Pro 240GB “OS” drives in RAID 1, 2x Crucial M500 480GB “storage” drives in RAID 1 and 2x 4TB Hitachi CoolSpin 4TB drives in RAID 1 simply to act as backup targets. Crucial M500 240GB drives also found their way into the pfsense nodes.

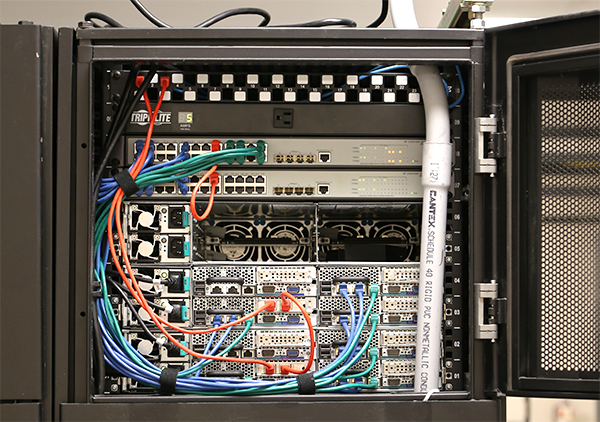

Colocation cabinet redo

- Prep everything ahead of time, including backing up and restoring the pfsense configuration on the new Atom C2758 based pfsense node

- Head to Las Vegas and the colocation facility

- Remove spare Dell C6100 chassis from the rack + rails for it in the middle of the cabinet

- Install the pfsense nodes and verify they were working

- Cutover to the new pfsense nodes

- Remove the top active Dell C6100

- Install the Supermicro 2U Twin with one node installed

- While allowing the new 2U Twin nodes to boot, replace two L5520′s in Dell C6100 nodes with Xeon X5650′s and add RAM

- Consolidate all Dell C6100 compute nodes to the bottom chassis

- Install the Atom based monitoring node in the front of the rack (not pictured)

- Pack up all of the remaining parts

- Head back to the airport

The impacts were that we increased density significantly. We replaced 24 Nahelem EP cores (L5520) with 24 Westmere-EP cores (X5650 and L5639) while keeping the same 216GB of RAM total we had in the two Dell C6100 chassis earlier. We added 32x Xeon E5-2665 cores and 256GB of RAM, over 4TB of SSD and 8TB of disk capacity and got a fast Mellanox FDR Hyper-V replication link between the two main Hyper-V nodes:

Supermicro Twin SYS-6027TR-D71FRF with dual compute nodes:

- 2x Intel Xeon E5-2665 – 2.4GHz -> 3.1GHz Turbo 20MB L3 and 8C/ 16 thread

- 128GB DDR3 (8x 16GB Samsung per node)

- 2x Seagate 600 Pro 240GB

- 2x Crucial M500 480GB

- 2x 4TB Hitachi CoolSpin drives (backup)

- 1x gigabit port to each switch

- 1x FDR infiniband port between the two nodes for replication

New pfsense node 1:

- Rangeley C2758

- 8GB ECC memory

- Crucial M500 240GB

- Avoton C2750

- 8GB ECC memory

- Crucial M500 240GB

- 2x dual L5520 nodes with 48GB RAM, Intel 320 160GB SSD, Kingston E100 400GB

- 1x dual L5639 node with 48GB of RAM and Intel 320 160GB SSD, 2x WD Red 3TB

- 1x dual X5650 node with 72GB of ram and Intel 320 160GB SSD, 2x Crucial M500 480GB

The mini node (just in case/ for monitoring)

- Supermicro X9SBAA-F

- Intel Atom S1260

- 4GB RAM