Recently I had a chance to work with the newly released PCIe AIC based Intel DC P3500 1.2TB NVMe SSD. After the installing Fusion-io on ESXi guide was published, NVMe was the next technology to evaluate. We were using a NVMe SSD to evaluate performance under VMware ESXi 6.0 and found some interesting results. I used the standard IOmeter for read throughput, write throughput, 4K read IOPS and 4K write IOPS. The results were certainly lower than we expected.

Setting up the Environment

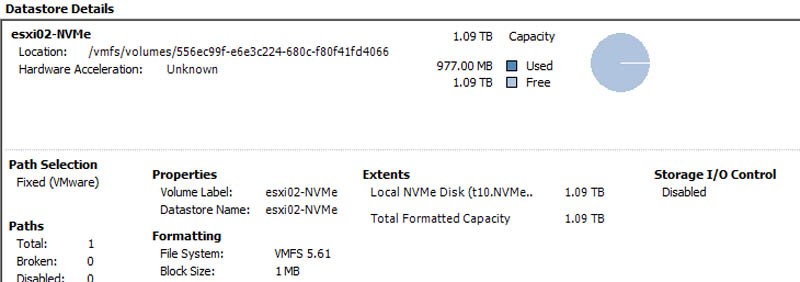

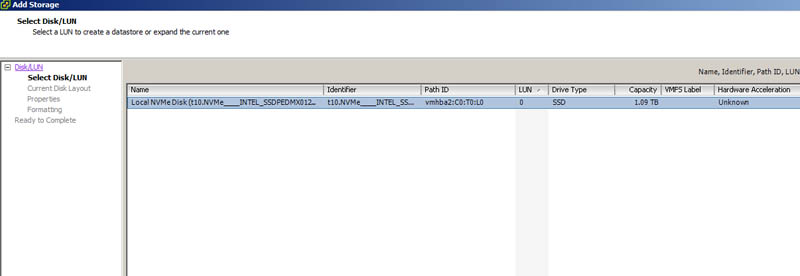

The test server was a Lenovo TD340, a fairly typical dual Intel Xeon E5-2600 V2 tower server. The P3500 1.2TB NVMe SSD was plugged into the PCIe 3.0 x16 slot. VMware ESXi 6.0 detected the drive without any issues using built-in drivers.

After formatting the drive and attaching it to the ESXi host, I created a Windows Server 2012 R2 VM (on a different drive) then assigned the P3500 LUN drive to the VM.

Everything worked using the stock VMware NVMe drivers. We will get to the performance shortly, but for comparison purposes we also installed the Intel ESXi drivers.

Installing Intel NVMe Drivers on VMware ESXi 6.0

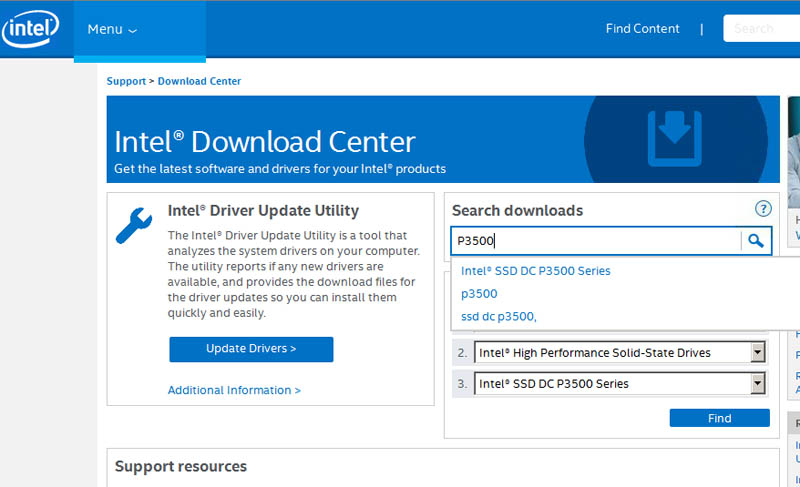

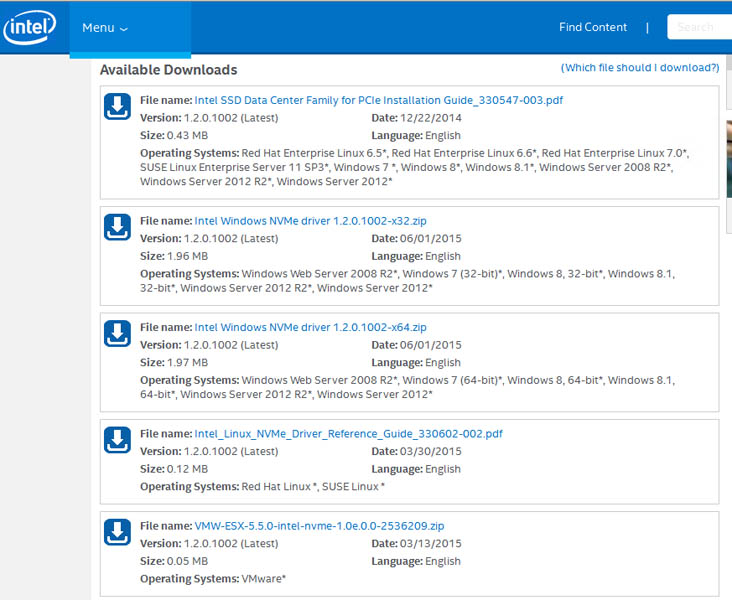

Windows has very different performance between Microsoft NVMe and Intel NVMe drivers. Therefore we decided to test with the Intel VMware ESXi drivers as well. One can search the Intel site for the P3500 drivers.

Both the Windows and ESXi drivers are version 1.2.0.1002 and were the latest version when we did this testing.

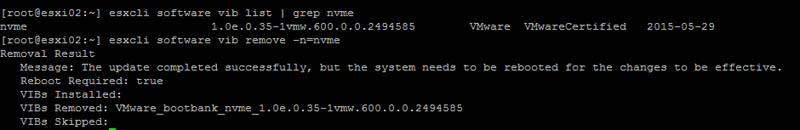

Once downloaded run the following to verify driver version on the VMware ESXi 6.0 server and remote the stock driver:

esxcli software vib list| grep nvme

It shows 1.0e.0.35 is installed. I removed the driver by running the following command:

esxcli software vib remote –n=nvme

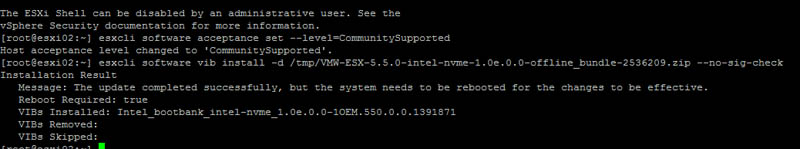

After reboot the server run the following to install the 1.2.0.1002 driver.

Esxcli software acceptance set –level=CommunitySupported

Esxcli software vib install –d /tmp/VMW-ESX-5.5.0-intel-nvme-1.0e.0.0-offline_bundle-2536209.zip –no-sig-check

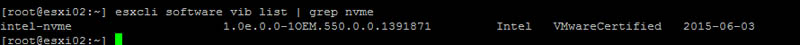

After reboot you can verify the driver version.

Esxcli software vib list| grep nvme

You can now reassign the drive to the same VM we used with the stock driver to test head to head performance.

VMware NVMe driver v. Intel NVMe driver Intel DC P3500 1.2TB Results

Here are some of the head-to-head tests that were run on the Intel DC P3500 1.2TB in VMware ESXi.

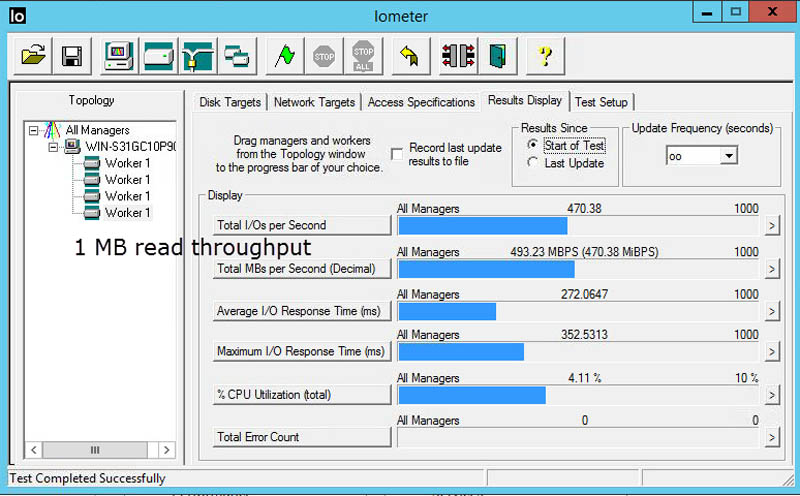

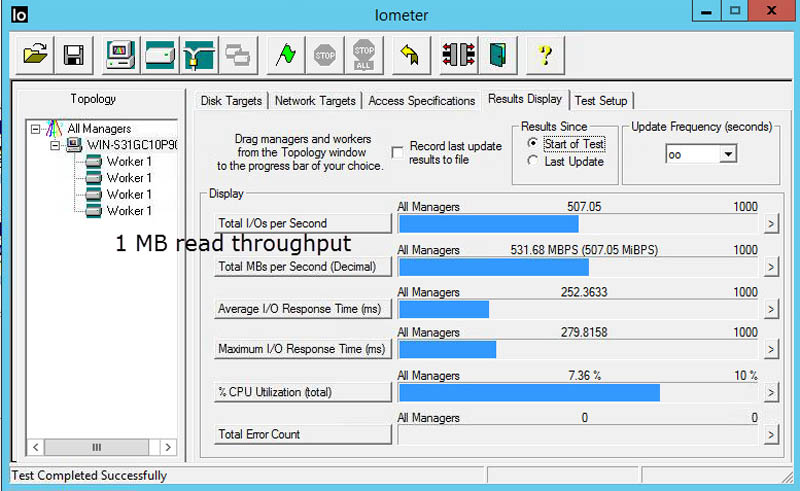

1MB read throughput

With the VMware driver I saw 1 MB read throughput 493 MB/s which is not as what I expected.

The Intel driver showed similar results.

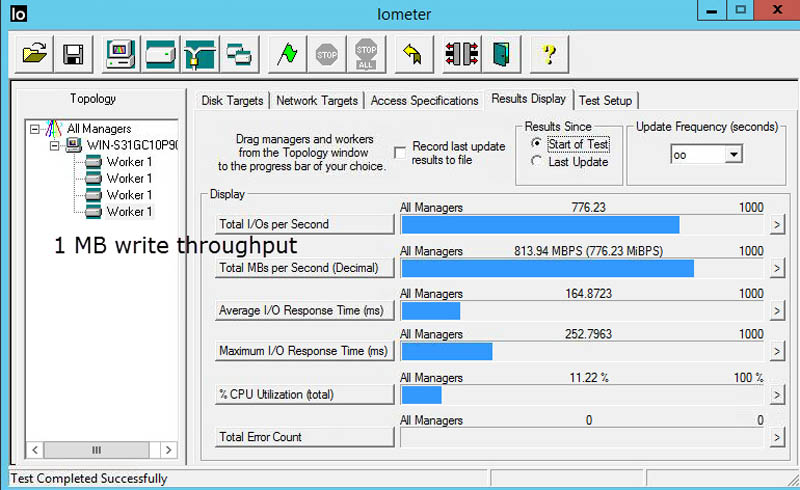

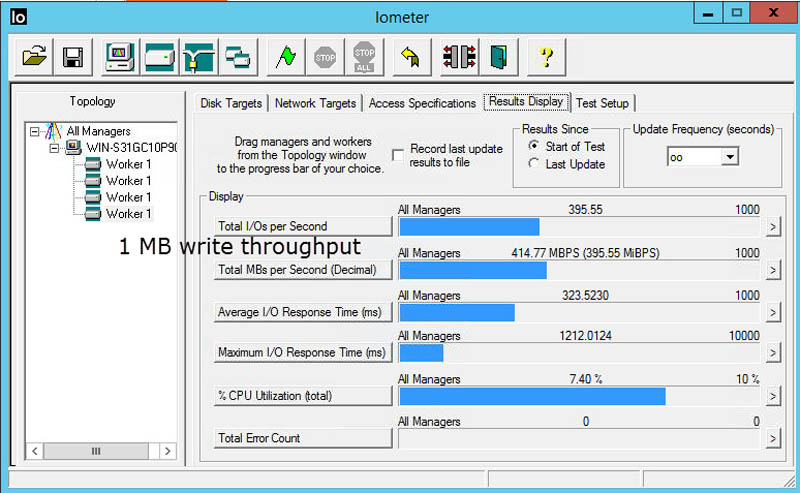

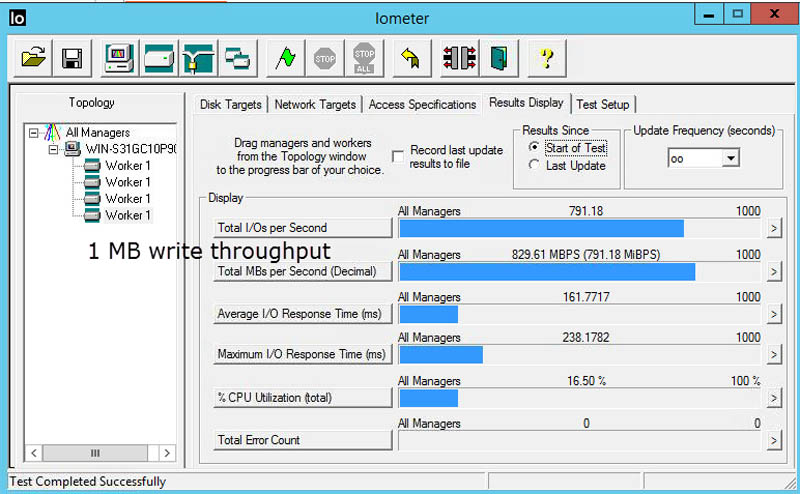

1MB write throughput

As for writes with the stock driver my 1MB write throughput was 813 MB/s which is much better compared to read:

The Intel results were lower at 414 MB/s:

It was interesting that the Intel driver was slower on writes when it was about even on reads.

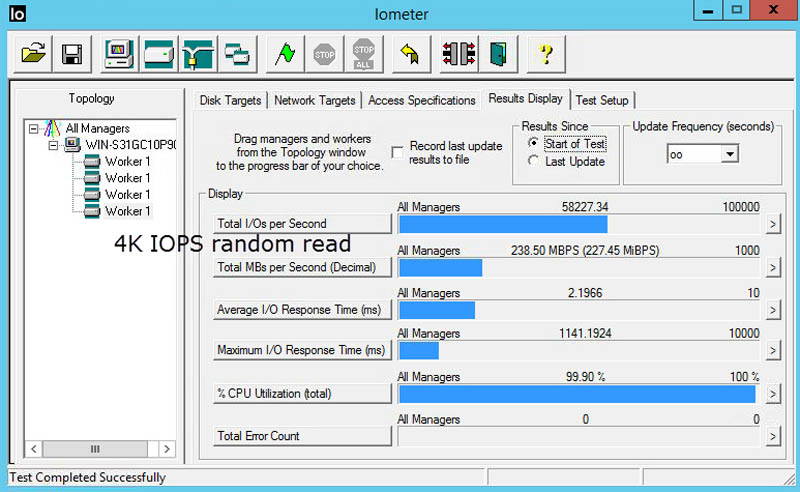

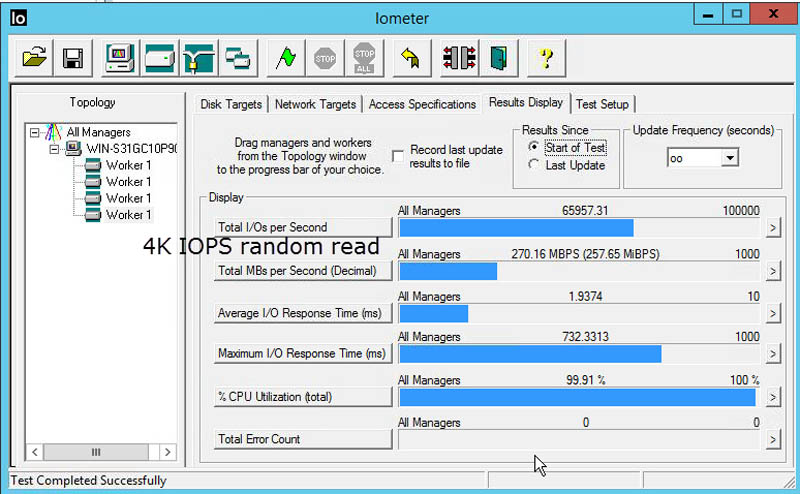

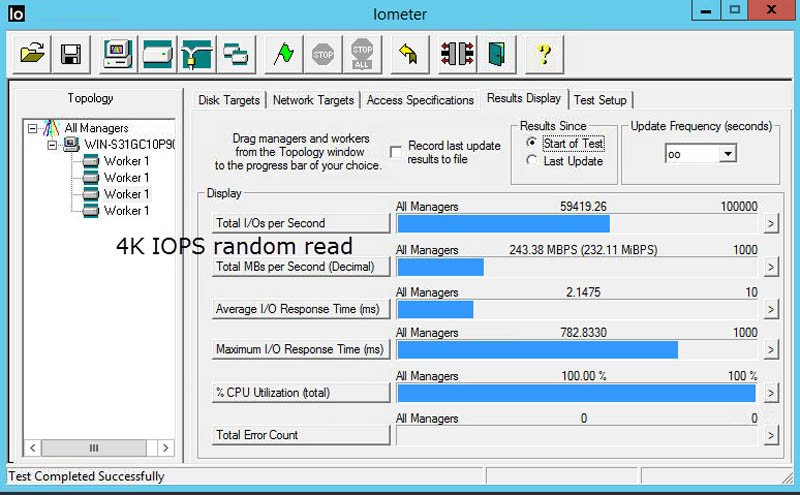

4K random read IOPS

The VMware stock NVMe driver yielded 58227 4K random read IOPS:

The Intel NVMe driver yielded 65957 4K random read IOPS:

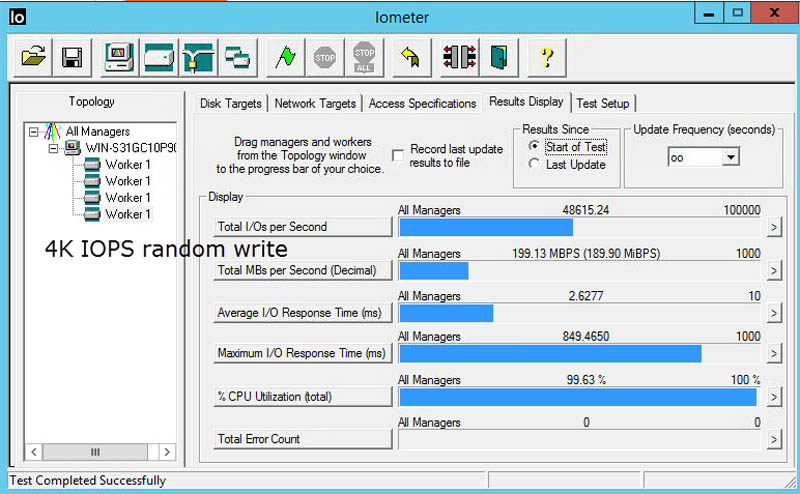

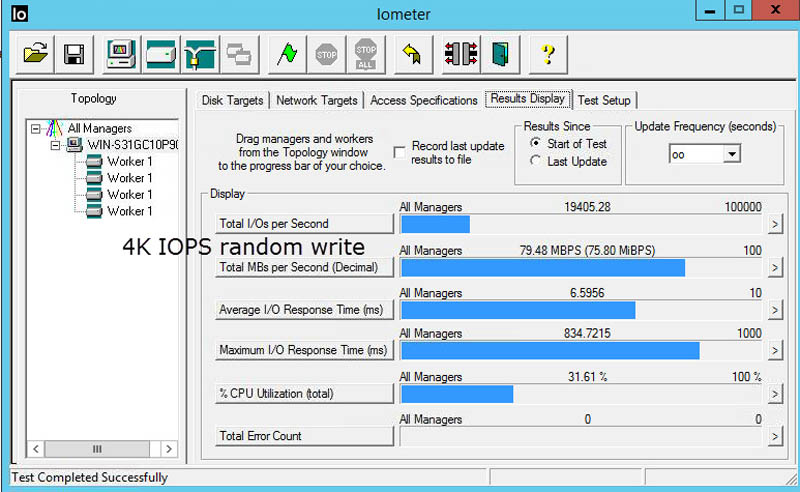

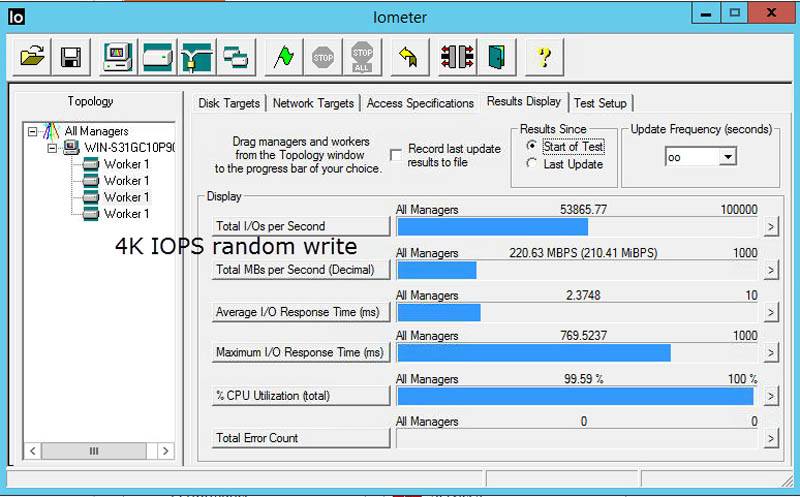

4K random write IOPS

The VMware stock NVMe driver showed 48615 4K random write IOPS:

The Intel NVMe driver yielded lower 19405 4K random write IOPS:

The results are mixed, in some case the Intel number is worse than the out of box driver. So I decided to directly attach the P3500 to the VM.

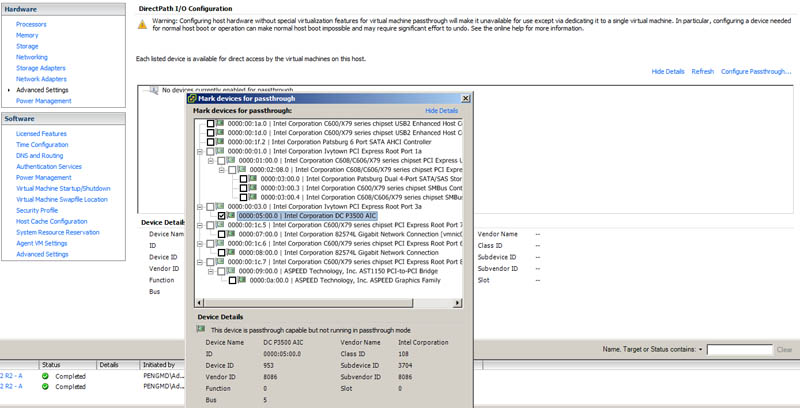

Enable PCI passthrough

Like most PCIe devices, one can passthrough the device to a guest VM directly on platforms that support VT-d. STH had a VT-d ESXi PCIe passthrough guide some time ago.

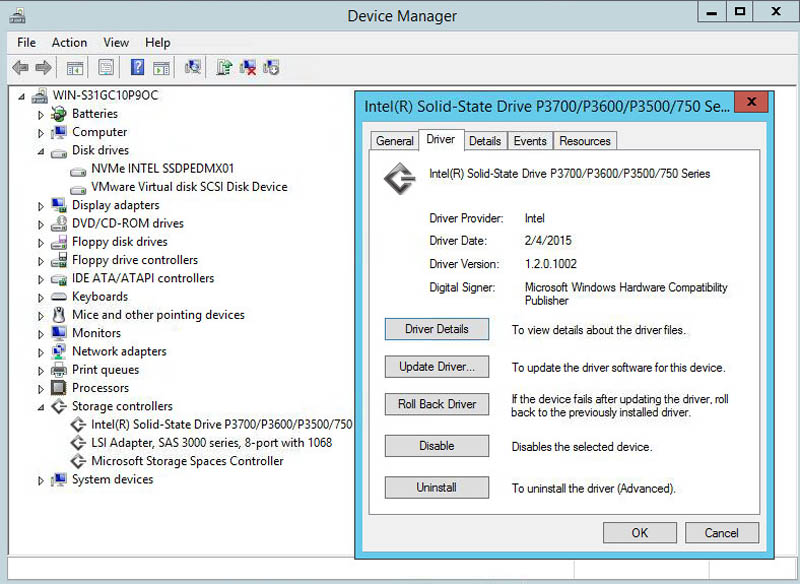

After assigning it to the VM. Windows Server 2012 R2 recognized the drive.

Here is the Intel 1.2.0.1002 driver installed in the VM:

We ran tests only with the Intel drivers as point of comparison.

1 MB read throughput: 531 MB/s:

1 MB write throughput: 829 MB/s:

4K random read IOPS 59419:

4K random write IOPS 53865:

The numbers look much better than ESXi local storage but still nowhere near Intel’s published numbers. Unfortunately the results are worse than SanDisk/ Fusion-io’s 5 year old ioDrive cards. Knowing P3500 could just be an enhanced version of 750 we may need to wait the numbers on P3600/P3700 to see the real difference. Since the product is just being released we could see better drivers down the road to provide better performance. With the 1.2TB version, it still a decent platform for general VM usage, but I am not seeing the night and day promised performance difference compared to SAS/SATA based SSD even in this very straightforward test.

Couple questions on your testing.

Did you use a single VM, with a single worker, on a single SCSI controller?

Did you use the default LSI controller, or use the paravirtual?

NVMe drives (much like storage arrays) test best when you have multiple parallel actions against them and having a lot of choke points (like a single command queue) will generally leave you disappointed.