Today we are looking at the Dell PowerEdge C6100 XS23-TY3 cloud server. Much like the Supermicro 2U Twin2 series, the Dell C6100 manages to fit four dual socket nodes into a 2U chassis. This is one of the most exciting value platforms in recent memory for those looking to install multiple servers into a tight space, or for those looking to put together mini-clusters. The basic idea of these designs is that the four nodes share redundant PSUs and a total of either 12x 3.5″ disks or 24x 2.5″ disks. The net result is either three or six drives per node. Processors are dual Intel Xeon 5500 or 5600 series up to 95w TDP so one can have between 4 physical cores to 12 core/ 24 threads per node with either 96GB or 192GB (5500 or 5600) DIMMs per node. Read on for the very exciting platform that is at the heart of ServeTheHome’s 2013 colocation architecture.

The Dell PowerEdge C6100 Family – Which one is which?

This list was first compiled in the forums but it is very important as there are several options available. Let’s break down the 6xxx cloud computing family from Dell.

Dell C6100 XS23-SB versus XS23-TY3

The Dell C6100 XS23-SB was the first generation part that can be found easily today. The XS23-SB had four nodes but with dual Core 2 generation LGA 771 Xeons. The processor of choice was the 50w TDP Intel Xeon L5420. Today these are generally found for $100-200 less than the XS23-TY3 version. We are still confirming whether these have compatible drive trays. Power supplies tended to be much smaller than later versions. Unless there is a burning reason to get the XS23-SB – skip it.[pullquote_right]Bottom line: Get the Dell C6100 XS23-TY3[/pullquote_right]

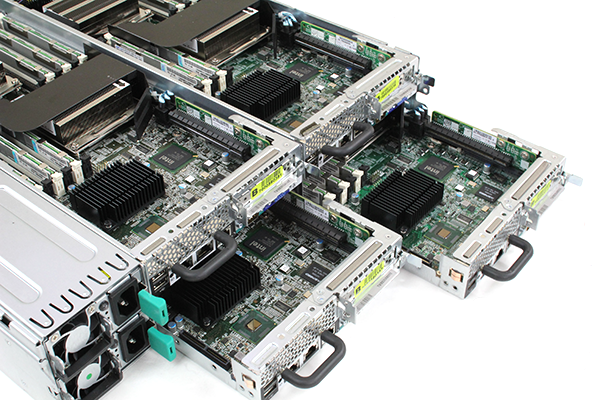

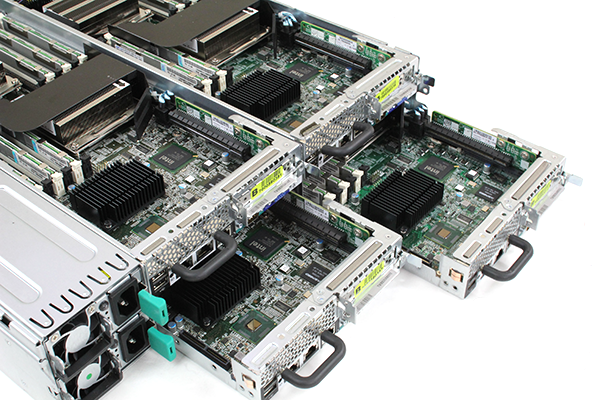

The focus of the piece today is the Dell C6100 XS23-TY3. The most common configuration seems to have been dual L5520‘s with 24GB DDR3 per node (6x 4GB DDR3 DIMMs.) These can accept six DIMMs per socket and with two DIMMs per node and four nodes that means 48 DIMM slots. As mentioned previously the Dell C6100 XS23-TY3 came in both 2.5″ and 3.5″ drive options, with the 3.5″ drives being much more widely used. Here is an overview of what these look like.

Only the top two nodes are visible but there are two identical nodes in-between. One can see four fans in the midplane. Quite amazing that there is one fan allocated for each dual socket node.

Other Dell PowerEdge C6000 Family Members

- The Dell C6105 is a 4- node dual Opteron 4000 series version of the C6100.

- Dell C6145 – Only two nodes here, but of quad Opteron 6000 series chips so still 8 CPUs/ node. The major benefit of this is that one can take advantage of high density with shared fans and power supplies.

- Dell C6220 – I look at this as rev2 for the C6100 series as noted above. These can handle higher TDP CPUs, have hot swap fans and etc. They utilize Intel Xeon E5-2600 based nodes supporting higher than and have 1200w power supplies.

These are either too new or too rare to be found easily second hand. The Dell PowerEdge C6220 looks great but is harder to come by unless you are buying thousands of units directly from Dell.

Why is the Dell C6100 XS23-TY3 Exciting? They are INEXPENSIVE!

At this point we have three Dell C6100 XS23-TY3’s at Fiberhub in Las Vegas, our colocation site and one inbound for the lab. Although we have access to higher-end and newer hardware, these were chosen because they are amazingly inexpensive.

I purchased the disk less unit for $1000 + 65 Shipping. Here is a link to the “deal” thread. Here is an ebay Dell XS23-TY3 search where several diskless units have popped up for $975 + 45 shipping. The common configuration at this price is:

One 1.1kW power supply in a 3.5″ drive chassis with rackmount rails and four nodes. Each node has:

- Dual Intel Xeon L5520 (4C/8T 2.26GHz) 60w TDP

- 24GB DDR3 Memory (6x 4GB RDIMMs in each node.

- 1x 3.5″ drive sled, wired for up to 3x drives/ node

- 2x Intel Gigabit LAN and 1x IPMI LAN port

Generally speaking at around $1,000 these units do not come with redundant power supplies nor full 12-drive compliments. One may need to add as necessary for those costs.

Quick math tells us that is $267/ node including rackmount rails. Performance wise these are faster in heavily threaded workloads than the Intel Xeon E3-1290 v2 but have the advantage of accepting up to 96GB of inexpensive DDR3 RDIMMs in each node compared to the Intel Xeon E3-1200 series at 32GB per node. The XS23-TY3 also uses triple channel memory versus dual. At $267/ node that’s about the price of an Intel Xeon E3-1240 V2 before RAM, motherboard chassis and etc.

Why they are so inexpensive… thanks big cloud players!

How can the prices be this low? Where do the Dell C6100 XS23-TY3 units on ebay come from? Rumor has it they are mostly they are off-lease equipment that large cloud providers purchased through Dell Financial Services. The Dell C6100 XS23-TY3 3.5″ option with 8x L5520’s and 96GB are the most common versions being dumped on the secondary market. Allegedly they are being sold for $700-800 each, but to buy the off lease equipment one must purchase in 500 unit quantities. It also seems like some came with LSI SAS controllers and Mellanox dual port Infiniband QDR ConnetX-2 controllers. Usually these are not in the inexpensive versions.

Surely they use a lot of power and are loud right?

With redundant 1.1kW power supplies and a very dense configuration, one might expect these to suck down power and be very loud. Since most come with L5520’s rated at 60W TDP and the maximum per CPU is 95w TDP, using lower power CPUs does a lot to lower the overall power consumption (35w * 8 CPUs = 280w of head room right there.) It should also be noted that in addition to the power button in the rear of the chassis, the front chassis ears also have power and reset buttons.

Using a sample test configuration with 8x L5520, 24x 4GB DDR3 RDIMM, redundant 1100w PSUs:

Idle: 174w at 66dba

Folding@Home GROMACS 100% CPU load on all 8 CPUs: 489w at 77.4dba (power saving enabled)

Overall not bad. At maximum load that is around 1A @ 120v per node. One forum member, PigLover is actively pursuing a project to bring these to no more than 50dba for home-lab use. See the forums for his Taming the 6100 project.

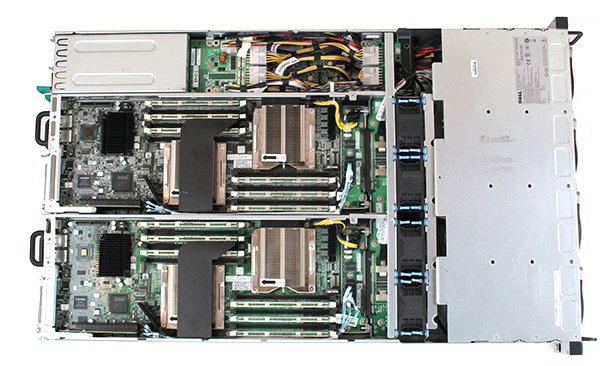

Servicing the Dell C6100

After installing three of these in a rack in the DC, they are absolutely great to work on. Swapping motherboards is done by unscrewing a single screw then pushing the latch while pulling units out.

Power supplies can also be swapped out from the rear of the unit. The front is entirely hot swap as well:

As one can see from the colocation picture, there is a chassis not hooked up. These are so inexpensive one can buy a bare bones (no CPU/ RAM or sell the CPUs and RAM) to stock a cold spare in the event that anything that is not hot-swap fails. Swapping all drives four motherboards and two power supplies takes under 5 minutes.

Conclusion and where to go for more information

Overall, these are very easy to work on and for the price, they make a very compelling case. There is an official Dell C6100 XS23-TY3 forum thread with a few thousand views already. PigLover’s Taming the 6100 project is another one to keep an eye on. If you are looking to purchase one, use this ebay search. Be careful. There are sellers selling two node units, single CPU per node units, way overpriced barebones and filled units and etc. They are worth a look but remember, most sellers are purchasing these in lots of several hundred because the Xeon L5520 CPUs sell for $45+ after transaction fees ($360 total) and the RAM for $15 ea x 24 ($360 total) meaning selling anything else is profit. Two key takeaways here. First, there are many of these available at low prices. Second, these may be the best value cloud server or cluster nodes out there at the moment.

Holy heck these are awesome if you need a lot of boxes. Shared fans and power supplieslower nnumber of failure points.

Amazing find. PL needs pictures of his project. The mezzanine cards are cheap too.

Wow this is a great find! (and this is coming from a cost critic…)

Wonder if is it possible to buy some of those Supermicro Lga1155/2011 half width twin motherboards and jam them into these, it’s worth the cost saving even if it means you have to saw of a few corners. ;)

I found the Dell C6100 drivers page:

http://ftp.dell.com/Browse_For_Drivers/Servers,%20Storage%20&%20Networking/Cloud%20Product/PowerEdge%20C6100/

Also, the PCIe ports look *really* short on the C6100. Surprisingly, they will fit a standard “short” low-profile PCIe card like an LSI adapter. You just need to remove four screws, lift out the riser card, and fold under the third SATA cable. When reinstalling, push the end of the PCIe card through the slit in the airflow baffle right next to the heat sink. OK so it’s a very tight fit, but it does work.

Already linked in the forum post dba :-)

Yes… awesome! These are the twin motherboard size, but I think they use different power connectors. I do want to give this a shot though as that could be a cool way to mix in new platforms over time. Also need to double-check the mounting points.

Very nice! I set up a private cloud on a batch of Supermicro 2U Twin2’s very similar to these 5 or so years ago and have been adding a few more per year since. These off lease dells are much cheaper than getting new supermicros. Will have to really look at getting some of these during the next expansion cycle.

What is the remote management like?

Cehap! So for $4000 I can have 16 dual processor cloud server nodes with remote management? Do these come with Intel network or others? If dedicated IPMI = 36 network ports tho. Costly switches.

Remote management is really basic MegaRAC based. Think SMC/ Tyan circa 2009-2010 IPMI. I have a fourth inbound for the lab so will show off more. Planning to use it as a test cluster for STH.

Good luck with that, it’d be great if those Supermicro twin power circuit boards can fit into these.

If it works then we get Intel performance and power consumption at AMD prices! :)

Seriously considering building a new all-in-one stack with this.

Did some digging around and everything seems perfect except for not having enough HDD slots per node.

One of the node will be used for storage, and all 3 HDD slots will be used by ZFS data (3 x 3TB). So I still need to find a way to give power to 2 SSDs for caching (and booting if I can fit 3 SSDs inside).

Buying the 24 x 2.5″ hdd slots version isn’t an viable option because 1TB/512G SSD are still too expensive.

Removing the cases from the SSDs (or just use mSATA) should fit 2 or 3 of them inside the node, there are 6 SATA connectors on each board but there will be no power source available for the SSDs.

If there is a way to draw SATA power from the board (PCI-e/mezzanine slot or even from the USB port at the back), this will be perfect.

Have speced out one of these comparable to what we have been using and its around $6K less.

Showed the CEO and he says buy two of those and max out the memory! Sweet! New hardware to play with soon :)

Have to be careful on these ebay auctions, there are a lot of these floating around with the old old Supermicro X7DWT-S5023 boards in them, I’m not sure why. Was this an option from Dell?

The X7DWT-S5023 boards also have 8 slots of DDR2 available vs 12 slots of DDR3

Patrick,

Thanks for sharing this information.

Do you have any recommendations for tray/adapter to mount 2.5″ SSDs into the 3.5″ bays?

Any pointers appreciated. Thanks.

Tim, these are the XS23-SB models noted above.

*sigh* these look like a dream, it’s such a shame that they’re all in the states. :(

I think some folks in the forums are having them shipped internationally. May want to check there.

Hello all, The review is great and was thinking of getting “Dell C6100 XS23-TY3″ for my home vmware lab from ebay. Can someone help me out with the following queries….

1) Can I install esxi 5.1/vCloud on the nodes ? Any issues which I should be aware off ? Is vMotion/DRS/HA/Storage possible b/w different nodes on the same server ?

2) I have plenty of spare HDD – Cheetah T10 SAS 146G LFF 3.5” 10K – Model #ST3146755SS; Will it be possible to use those ?

3) Planning to use 2 nodes with esxi 5.1 (one with vCenter); and two nodes for Openfiler as use them as iSCSI Storage. Any potential issues with that configuration ?

Thanks !

Great forum questions. Each node acts as its own server. Think of it as 4x 1U servers in a common 2U box. The SAS drives will require either a PCIe or mezz SAS controller as the servers are generally wired to the SATA ports. Part numbers are on the forums.

WOW ! That was a really fast response -:). I understood that each node acts as a server, just wanted to gather some details on vmware compatibility.

So, in order to use my existing SAS drives, I will need to buy

“Dell TCK99 10GbE Daughtercard”, which is almost half the cost of the box itself ! I wonder whether going with SATA drives will make sense ?

Regarding installing esxi 5.1 and vCloud 5.1….has anyone tried that yet ? Don’t want to invest in the box and find out that I cant use the box for what I had originally intended it for.

Shall I move/start this thread on the forums instead ?

Probably better on the forums. You’ll need the LSI card not the 10GbE card though.

Actually, sared fans increase risk of node failure, since the failure of one fan affects at best 2 and at worst 4 nodes. You’re quite right about the redundant PSUs though, especially if you can hook them up to separate power lines.

Hi..what its the benefits with surver ??you can sent free mail!!etc please help

Does anyone know how I can flash the lsi2008 based mezzanine HBA daughtercard for these (Dell Part# Y8Y69) to IT mode. If so can you pls list the site for firmware. Thank you.

The Dell Y8Y69 is not a LSI 2008 based card, it’s LSI 1068E based. I do believe that it can be flashed to IT mode using firmware from the LSI website. I just did that to one of my controllers, and it works like a champ.

I have one of these with the LSI 1086E cards – Apparently they are rated for 6gbps drives, including SSD – I have the 2.5″ drive enclosures, so I have packed them out with 512gb drives. I am finding maximum throughput of 300MBps – This is if I raid 0 4x SSD via one of the nodes. If I leave the drive as single JBOD, I get 200-230MBps throughput. I am running around circles trying to figure out why the speed is so slow – Pulled the drives out and put them in a R610, speed was 2GBps throughput! Something isn’t right and I can’t find exactly where to make changes. The bios has very limited controls or options… thinking you might have any ideas? Thank you in advanced…

We measured 6.2A on 110 or 120v (not sure which) with all 4 nodes under load. A bit more than reported here.

I had one shipped from the USA to New Zealand recently, no issues at all, brilliant piece of hardware!

I’ve got a few of these. Love ’em. I run them at 208V. Three of them (12 nodes) use between 12 and 15A at full load in the 2x L5520, 24GB of RAM per node, one hard drive per node configuration, so right around 1A per node at 208V. Not bad at all. And yeah, super-cheap. I feel like they’re the build-a-cluster-on-a-budget secret. I was replacing the internals of our old 2U nodes with off-the-shelf whitebox hardware, but buying these things on eBay is way more cost-effective!

Chris – did you ever solve this issue? I’m running these cards now and the Raid (1e) is painfully slow. I’m doing much better – MUCH better – on the NAS (qnap / iScsi) than I am on the local drives.

Did you find a solution to the SDD install on the Dell PowerEdge C6100 XS23-TY3 About to try that also :)

I want to setup 3 2tb sata disks and then have 1 256gb ssd caching

http://www.elitebytes.com/Benchmarks.aspx

But need to know thricks to getting an SSD into the node and powering it etc.

I see the unit has 3 Sata ports from the back near the power supply, if you wanted to connect 3 more and use 2.5″ drives – do you need a new sled prebuilt to be 3 or 6 drives?

Was there ever a solution to mounting an SSD in the nodes?

I am ever considering a PCI-e SSD like the OCZ RevoDrive PCI-E 230GB 4 x PCI Express but it is reported as being a full-sized card.

With the Dell C6100 allowing only short, low-profile PCIe cards, what PCI-e based SSD options will fit these nodes?

Just FYI, if you buy cable sets, you can re-arrange the hdds in a C6100 from 3/3/3/3 to 4/2/4/2 or even 6/0/6/0. You’ll have to PXE boot those diskless nodes, or get a pci-e ssd/fiberchannel/external SAS card, but it’ll take just fine. Besides some crazy expensive cables, no additional hardware is required.

What PCI-E NICs are shown in the picture??

Intel or Broadcom Chipset??

Those are Intel NICs in the colocation. Decided to keep everything on the same driver platform.

What cable sets are you referring to?

I couldn’t find anything that looked like what you might be talking about on ebay…

Has anyone been able to resolve the painfully slow I/O issue with the Mezzanine Cards ? I setup three nodes as Vsphere servers with Raid 1 and the I/O is so poor I cannot use them.

A fourth node has no Mez card and the drives are hooked directly to the SATA ports and performance is fine.

did anyone try using DELL PERC H200 in them?