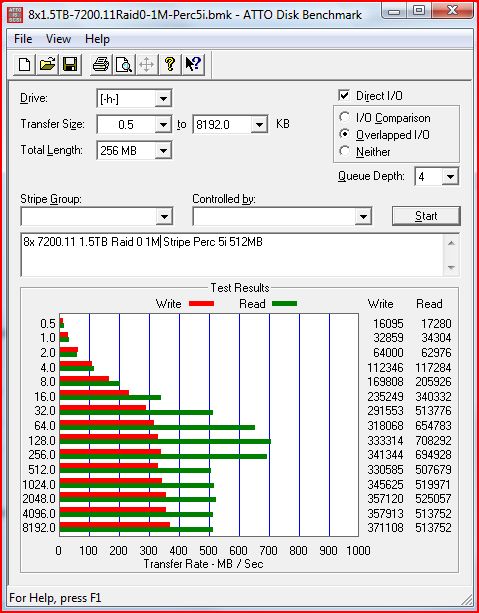

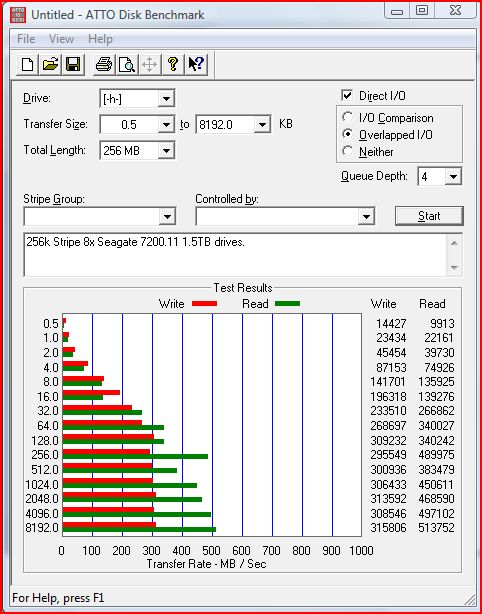

Although more will follow, here’s a quick glimpse of what a cheap, but quality hardware raid solution can do with cheap, and large SATA drives in Raid 5. Keep in mind that the Perc 5/i uses the old IOP333 CPU clocked at 500MHz. Many current 3 series Adaptec products, for example, utilize the IOP333 at 800MHz. Also, one should note that there are reports that the IOP348 has some issues with SATA drives making the below representative of very inexpensive ($1000) raid arrays with huge capacities.

Tested on:

Core i7 920 (stock clocks/ watercooled)

12GB Patriot DDR3 1600

Gigabyte X58

EVGA GTX285 1GB SLI

Corsair 1kw power supply

Dell Perc 5/i 512MB with Battery Back-up installed

Vista Ultimate x64

This is a significant improvement to onboard integrated Raid on consumer level motherboards, and the cards sell for about $120 on ebay, with battery back up units.

These drives are now on an Adaptec 31605 in a WHS box. More information to follow.

The reason you are seeing such great results is that you are still operating almost exclusively in the cache on the card. Now try increasing the “Total Length” to something greater than the 512MB cache and you should see some a significant difference. Don’t get me wrong this card offers a lot of bang for the buck. This is why I just ordered one today. Try benchmarking with the cache off and then on. That will tell show you clearly what the cache is doing for you.

I have been quite impressed with these, and their brother the PERC6/i, for some time. The only real downsides I have found with the cards, for use in any of my home servers (3x Media Servers, 1x Home Theater PC/Server, 1x FTP Server, 1x Backup Server, 3x Game Servers, and a few others; yes, I am nuts)….is the limited cache upgrade-ability, the somewhat finicky Dell firmware (solved, at least 99% solved, by the LSI F/W Flash), the lack of RAID6 support for the PERC5/i’s, and the lack of upgradable cache on the PERC6/i’s (need PERC6/e for cache upgrades, and even then it’s very limited).

On one of my game servers (the slowest one), for example, I am running 8x 1TB VelociRaptor 10krpm HDD’s in RAID0 off an Adaptec card (2GB cache, 24i-4e connectors, BBU), with 8x HGST Ultrastar 4TB in RAID10 off a PERC6/i flashed with LSI MR F/W… That gives me 8TB of IOP-friendly drive space and 16TB of decently-well-protected drive space.

On a different server, I have 8x 500GB VelociRaptor HDD’s in RAID0 via PERC6/i with LSI F/W.

The Adaptec Card (PCI-E 2.0 x8, 24i-4e SAS, 2GB Cache, LSI CacheCade Backup) with 8x 1TB VR RAID0:

– Sequential Reads: 865-873MB/sec

– Sequential Writes: 842-887MB/sec

– Access Time/Latency: 5.7-6.1ms

The Dell PERC6/i (PCI-E x8, 8i SAS, 256MB Cache, Battery Backup) with 8x 500GB VR RAID0:

– Sequential Reads: 619-634MB/sec

– Sequential Writes: 389-412MB/sec

– Access Time/Latency: 6.5-6.8ms

I have run the 1TB VR’s off the PERC6/i, in fact that was the initial setup, until I realized that the RAID Card was holding back the performance of the drives. It doesn’t matter if I short-stroke the 300GB VR’s, which I have done (from 80GB per drive to 150GB per drive; 4K goes up, Sequential average goes up a bit, and access times drop a bit; 4.1ms Access Time with 80GB short-stroke is nice, though!)…it doesn’t matter if I run them with write-through, write-back, or any other cache setting.

The PERCx/i RAID Controllers simply limit maximum performance on BIG performance-oriented arrays. I realize that most do not run 8-16 drive RAID0 arrays, but I assure you the data is NOT anything I wouldn’t happily delete myself; that’s why I have 8-20drive RAID5, RAID5+HS, and RAID10/RAID01, as well as 10-28drive RAID6+HS, RAID50, and RAID60 arrays (run off of a mix of LSI, Areca, and Adaptec controllers); not to mention 8x 4TB external drives (US3.0 and eSATA6Gbps) for off-site storage, and a dozen 256GB USB3.0 Flash Drives for Critical File backup.

I run arrays using only the same drive in an array (I always order half of the drives from Newegg, and buy half locally at Micro Center as they price-match Newegg and there is almost NO chance the drives are all from the same batch) to minimize potential weak links.

The drives I use:

– WD VelociRaptor 1TB & 500GB (now-11x 1TB in RAID0 and 8x 500GB in RAID0)

– WD RE4 2TB (been using since release; 8-drive RAID10, 28drive RAID6+HS, 10-drive RAID6)

– WD RE 4TB (new 12-drive RAID5+HS array; 12 in array + 1xHS; very fast!)

– WD Blue 1TB (WD10EZEX Single-Platter: 16x in RAID0 for now)

– Hitachi Ultrastar 2TB & 3TB (17x RAID5+HS, 9x RAID5, 14x RAID6, 8x RAID10)

– Hitachi Deskstar 1TB, 2TB, & 4TB (24x RAID10, 16x RAID50, 11x RAID5)

– Seagate Momentus XT 750GB/8GB (16x RAID0 for fun)

– Seagate Barracuda 3TB (16x RAID6, 23x RAID6+HS, 9x RAID0)

I also have a few older PC’s setup as NAS boxes running either SATA150 or E-IDE arrays with drives ranging from 40GB to 400GB in size (mostly 80GB, 120GB, 160GB, 200GB though), mostly RAID10.

In total, I have 392TB of storage space, 24 arrays, 31 Intel Hardware PCI-E x4 NIC cards (running Teamed GbEth connections up to 3x cards per Server/PC for ~6Gbps Ethernet minus overhead, and MB Eth ports used for true duplex data streams), and all spare PCI-Express slots are filled with GPU’s for Folding@Home*.

*Running 18x GTX480’s, 13x GTX295’s, 9x GTX470’s, 16x GTX570 2.5GB’s, 6x GTX560Ti 448cores, 21x GTX580 3GB’s, 7x 9800GTX+’s, and a random assortment of other cards; plus there are the 3x GTX670 FTW 2GB cards in 3-Way SLI and 1x GTX650Ti SSC PhysX card in my main machine